AI could help with the next pandemic—but not with this one

Some things need to change if we want AI to be useful next time, and you might not like them.

It was an AI that first saw it coming, or so the story goes. On December 30, an artificial-intelligence company called BlueDot, which uses machine learning to monitor outbreaks of infectious diseases around the world, alerted clients—including various governments, hospitals, and businesses—to an unusual bump in pneumonia cases in Wuhan, China. It would be another nine days before the World Health Organization officially flagged what we’ve all come to know as Covid-19.

BlueDot wasn’t alone. An automated service called HealthMap at Boston Children’s Hospital also caught those first signs. As did a model run by Metabiota, based in San Francisco. That AI could spot an outbreak on the other side of the world is pretty amazing, and early warnings save lives.

More on coronavirus

Our most essential coverage of covid-19 is free, including:

How does the coronavirus work?

What are the potential treatments?

What's the right way to do social distancing?

Other frequently asked questions about coronavirus

---

Newsletter: Coronavirus Tech Report

Zoom show: Radio Corona

See also:

Please click here to subscribe and support our non-profit journalism.

But how much has AI really helped in tackling the current outbreak? That’s a hard question to answer. Companies like BlueDot are typically tight-lipped about exactly who they provide information to and how it is used. And human teams say they spotted the outbreak the same day as the AIs. Other projects in which AI is being explored as a diagnostic tool or used to help find a vaccine are still in their very early stages. Even if they are successful, it will take time—possibly months—to get those innovations into the hands of the health-care workers who need them.

The hype outstrips the reality. In fact, the narrative that has appeared in many news reports and breathless press releases—that AI is a powerful new weapon against diseases—is only partly true and risks becoming counterproductive. For example, too much confidence in AI’s capabilities could lead to ill-informed decisions that funnel public money to unproven AI companies at the expense of proven interventions such as drug programs. It’s also bad for the field itself: overblown but disappointed expectations have led to a crash of interest in AI, and consequent loss of funding, more than once in the past.

So here’s a reality check: AI will not save us from the coronavirus—certainly not this time. But there’s every chance it will play a bigger role in future epidemics—if we make some big changes. Most won’t be easy. Some we won’t like.

There are three main areas where AI could help: prediction, diagnosis, and treatment.

Prediction

Companies like BlueDot and Metabiota use a range of natural-language processing (NLP) algorithms to monitor news outlets and official health-care reports in different languages around the world, flagging whether they mention high-priority diseases, such as coronavirus, or more endemic ones, such as HIV or tuberculosis. Their predictive tools can also draw on air-travel data to assess the risk that transit hubs might see infected people either arriving or departing.

The results are reasonably accurate. For example, Metabiota’s latest public report, on February 25, predicted that on March 3 there would be 127,000 cumulative cases worldwide. It overshot by around 30,000, but Mark Gallivan, the firm’s director of data science, says this is still well within the margin of error. It also listed the countries most likely to report new cases, including China, Italy, Iran, and the US. Again: not bad.

Others keep an eye on social media too. Stratifyd, a data analytics company based in Charlotte, North Carolina, is developing an AI that scans posts on sites like Facebook and Twitter and cross-references them with descriptions of diseases taken from sources such as the National Institutes of Health, the World Organisation for Animal Health, and the global microbial identifier database, which stores genome sequencing information.

Work by these companies is certainly impressive. And it goes to show how far machine learning has advanced in recent years. A few years ago Google tried to predict outbreaks with its ill-fated Flu Tracker, which was shelved in 2013 when it failed to predict that year’s flu spike. What changed? It mostly comes down to the ability of the latest software to listen in on a much wider range of sources.

Unsupervised machine learning is also key. Letting an AI identify its own patterns in the noise, rather than training it on preselected examples, highlights things you might not have thought to look for. “When you do prediction, you're looking for new behavior,” says Stratifyd’s CEO, Derek Wang.

But what do you do with these predictions? The initial prediction by BlueDot correctly pinpointed a handful of cities in the virus’s path. This could have let authorities prepare, alerting hospitals and putting containment measures in place. But as the scale of the epidemic grows, predictions become less specific. Metabiota’s warning that certain countries would be affected in the following week might have been correct, but it is hard to know what to do with that information.

What’s more, all these approaches will become less accurate as the epidemic progresses, largely because reliable data of the sort that AI needs to feed on has been hard to get about Covid-19. News sources and official reports offer inconsistent accounts. There has been confusion over symptoms and how the virus passes between people. The media may play things up; authorities may play things down. And predicting where a disease may spread from hundreds of sites in dozens of countries is a far more daunting task than making a call on where a single outbreak might spread in its first few days. “Noise is always the enemy of machine-learning algorithms,” says Wang. Indeed, Gallivan acknowledges that Metabiota’s daily predictions were easier to make in the first two weeks or so.

One of the biggest obstacles is the lack of diagnostic testing, says Gallivan. “Ideally, we would have a test to detect the novel coronavirus immediately and be testing everyone at least once a day,” he says. We also don’t really know what behaviors people are adopting—who is working from home, who is self-quarantining, who is or isn’t washing hands—or what effect it might be having. If you want to predict what’s going to happen next, you need an accurate picture of what’s happening right now.

It’s not clear what’s going on inside hospitals, either. Ahmer Inam at Pactera Edge, a data and AI consultancy, says prediction tools would be a lot better if public health data wasn’t locked away within government agencies as it is in many countries, including the US. This means an AI must lean more heavily on readily available data like online news. “By the time the media picks up on a potentially new medical condition, it is already too late,” he says.

But if AI needs much more data from reliable sources to be useful in this area, strategies for getting it can be controversial. Several people I spoke to highlighted this uncomfortable trade-off: to get better predictions from machine learning, we need to share more of our personal data with companies and governments.

Darren Schulte, an MD and CEO of Apixio, which has built an AI to extract information from patients’ records, thinks that medical records from across the US should be opened up for data analysis. This could allow an AI to automatically identify individuals who are most at risk from Covid-19 because of an underlying condition. Resources could then be focused on those people who need them most. The technology to read patient records and extract life-saving information exists, says Schulte. The problem is that these records are split across multiple databases and managed by different health services, which makes them harder to analyze. “I’d like to drop my AI into this big ocean of data,” he says. “But our data sits in small lakes, not a big ocean.”

Health data should also be shared between countries, says Inam: “Viruses don’t operate within the confines of geopolitical boundaries.” He thinks countries should be forced by international agreement to release real-time data on diagnoses and hospital admissions, which could then be fed into global-scale machine-learning models of a pandemic.

Of course, this may be wishful thinking. Different parts of the world have different privacy regulations for medical data. And many of us already balk at making our data accessible to third parties. New data-processing techniques, such as differential privacy and training on synthetic data rather than real data, might offer a way through this debate. But this technology is still being finessed. Finding agreement on international standards will take even more time.

For now, we must make the most of what data we have. Wang’s answer is to make sure humans are around to interpret what machine-learning models spit out, making sure to discard predictions that don’t ring true. “If one is overly optimistic or reliant on a fully autonomous predictive model, it will prove problematic,” he says. AIs can find hidden signals in the data, but humans must connect the dots.

Early diagnosis

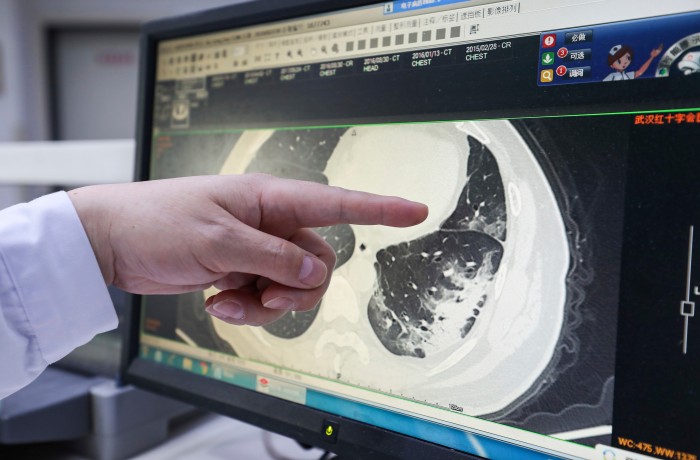

As well as predicting the course of an epidemic, many hope that AI will help identify people who have been infected. AI has a proven track record here. Machine-learning models for examining medical images can catch early signs of disease that human doctors miss, from eye disease to heart conditions to cancer. But these models typically require a lot of data to learn from.

A handful of preprint papers have been posted online in the last few weeks suggesting that machine learning can diagnose Covid-19 from CT scans of lung tissue if trained to spot telltale signs of the disease in the images. Alexander Selvikvåg Lundervold at the Western Norway University of Applied Sciences in Bergen, Norway, who is an expert on machine learning and medical imaging, says we should expect AI to be able to detect signs of Covid-19 in patients eventually. But it is unclear whether imaging is the way to go. For one thing, physical signs of the disease may not show up in scans until some time after infection, making it not very useful as an early diagnostic.

What’s more, since so little training data is available so far, it’s hard to assess the accuracy of the approaches posted online. Most image recognition systems—including those trained on medical images—are adapted from models first trained on ImageNet, a widely used data set encompassing millions of everyday images. “To classify something simple that's close to ImageNet data, such as images of dogs and cats, can be done with very little data,” says Lundervold. “Subtle findings in medical images, not so much.”

That’s not to say it won’t happen—and AI tools could potentially be built to detect early stages of disease in future outbreaks. But we should be skeptical about many of the claims of AI doctors diagnosing Covid-19 today. Again, sharing more patient data will help, and so will machine-learning techniques that allow models to be trained even when little data is available. For example, few-shot learning, where an AI can learn patterns from only a handful of results, and transfer learning, where an AI already trained to do one thing can be quickly adapted to do something similar, are promising advances—but still works in progress.

Cure-all

Data is also essential if AI is to help develop treatments for the disease. One technique for identifying possible drug candidates is to use generative design algorithms, which produce a vast number of potential results and then sift through them to highlight those that are worth looking at more closely. This technique can be used to quickly search through millions of biological or molecular structures, for example.

SRI International is collaborating on such an AI tool, which uses deep learning to generate many novel drug candidates that scientists can then assess for efficacy. This is a game-changer for drug discovery, but it can still take many months before a promising candidate becomes a viable treatment.

In theory, AIs could be used to predict the evolution of the coronavirus too. Inam imagines running unsupervised learning algorithms to simulate all possible evolution paths. You could then add potential vaccines to the mix and see if the viruses mutate to develop resistance. “This will allow virologists to be a few steps ahead of the viruses and create vaccines in case any of these doomsday mutations occur,” he says.

It’s an exciting possibility, but a far-off one. We don’t yet have enough information about how the virus mutates to be able to simulate it this time around.

In the meantime, the ultimate barrier may be the people in charge. “What I’d most like to change is the relationship between policymakers and AI,” says Wang. AI will not be able to predict disease outbreaks by itself, no matter how much data it gets. Getting leaders in government, businesses, and health care to trust these tools will fundamentally change how quickly we can react to disease outbreaks, he says. But that trust needs to come from a realistic view of what AI can and cannot do now—and what might make it better next time.

Making the most of AI will take a lot of data, time, and smart coordination between many different people. All of which are in short supply right now.

Deep Dive

Artificial intelligence

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

What’s next for generative video

OpenAI's Sora has raised the bar for AI moviemaking. Here are four things to bear in mind as we wrap our heads around what's coming.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.