Inside Amazon’s plan for Alexa to run your entire life

I started using Alexa before it was cool. I bought a first-generation Echo a few months after its launch because Amazon.com showed me a banner ad as I was shopping for new speakers. After it arrived, my then-roommate, a software engineer at Google, eagerly compared Alexa’s capabilities with those of her Google Assistant. Alexa didn’t really measure up. But as far as I was concerned, it did everything I wanted: it played my favorite songs, sounded my morning alarms, and sometimes told me the news and weather.

Five years later, my simple desires have been eclipsed by Amazon’s ambitions. Alexa is now distributed everywhere, capable of controlling more than 85,000 smart home products from TVs to doorbells to earbuds. It can execute over 100,000 “skills” and counting. It processes billions of interactions a week, generating huge quantities of data about your schedule, your preferences, and your whereabouts. Alexa has turned into an empire, and Amazon is only getting started.

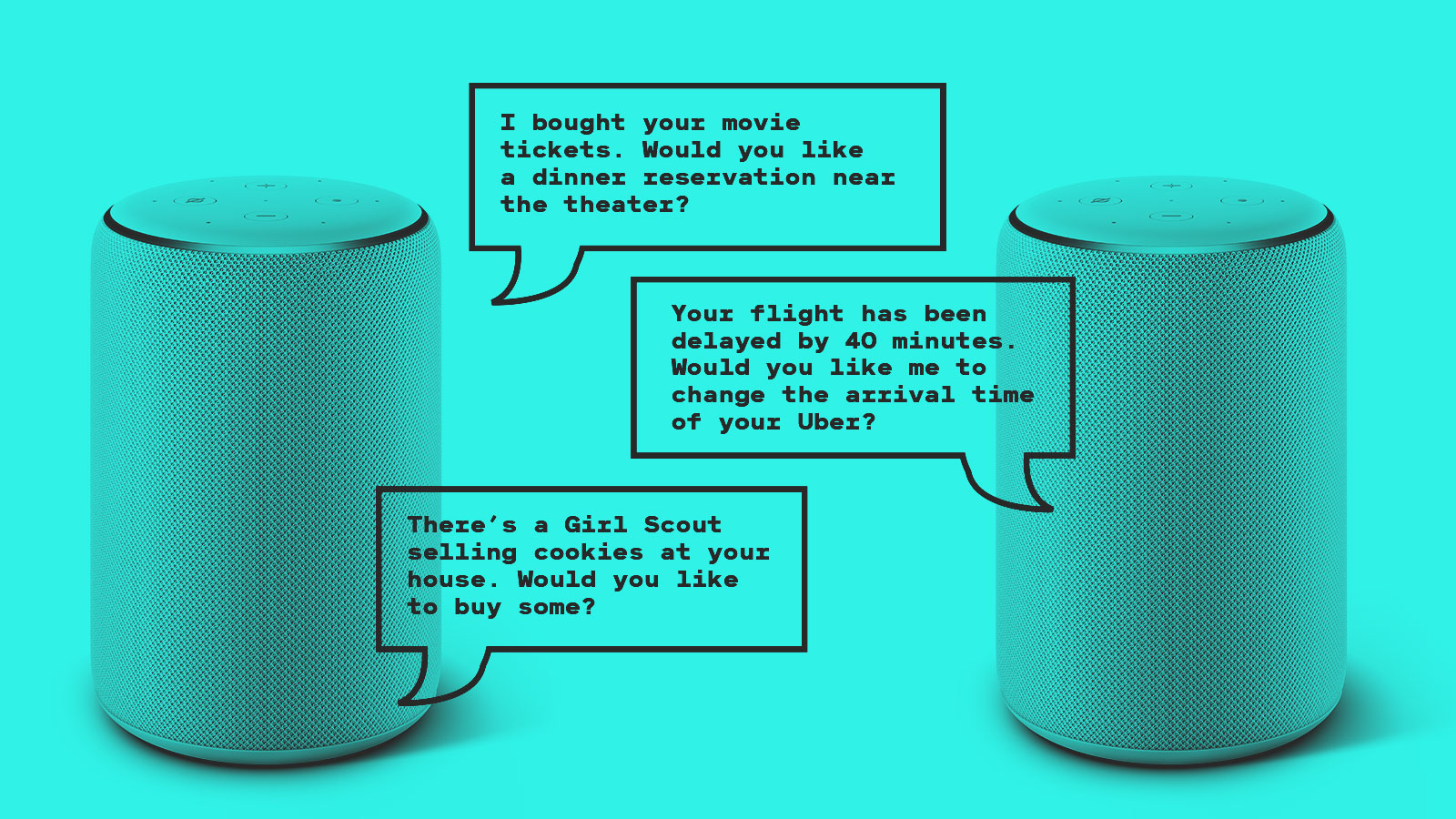

Speaking with MIT Technology Review, Rohit Prasad, Alexa’s head scientist, has now revealed further details about where Alexa is headed next. The crux of the plan is for the voice assistant to move from passive to proactive interactions. Rather than wait for and respond to requests, Alexa will anticipate what the user might want. The idea is to turn Alexa into an omnipresent companion that actively shapes and orchestrates your life. This will require Alexa to get to know you better than ever before.

In fact Prasad, who will outline his vision for Alexa’s future at WebSummit in Lisbon, Portugal, later today, has already given the world a sneak preview of what this shift might look like. In June at the re:Mars conference, he demoed a feature called Alexa Conversations, showing how it might be used to help you plan a night out. Instead of manually initiating a new request for every part of the evening, you would need only to begin the conversation—for example, by asking to book movie tickets. Alexa would then follow up to ask whether you also wanted to make a restaurant reservation or call an Uber.

To power this transition, Amazon needs both hardware and software. In September, the tech giant launched a suite of “on the go” Alexa products, including the Echo Buds (wireless earphones) and Echo Loop (a smart ring). All these new products let Alexa listen to and log data about a dramatically larger portion of your life, the better to offer assistance informed by your whereabouts, your actions, and your preferences.

From a software perspective, these abilities will require Alexa to use new methods for processing and understanding all the disparate sources of information. In the last five years, Prasad’s team has focused on building the assistant’s mastery of AI fundamentals, like basic speech and video recognition, and expanding its natural-language understanding. On top of this foundation, they have now begun developing Alexa’s intelligent prediction and decision-making abilities and—increasingly—its capacity for higher-level reasoning. The goal, in other words, is for Alexa’s AI abilities to get far more sophisticated within a few years.

A more intelligent Alexa

Here’s how Alexa’s software updates will come together to execute the night-out planning scenario. In order to follow up on a movie ticket request with prompts for dinner and an Uber, a neural network learns—through billions of user interactions a week—to recognize which skills are commonly used with one another. This is how intelligent prediction comes into play. When enough users book a dinner after a movie, Alexa will package the skills together and recommend them in conjunction.

But reasoning is required to know what time to book the Uber. Taking into account your and the theater’s location, the start time of your movie, and the expected traffic, Alexa figures out when the car should pick you up to get you there on time.

Prasad imagines many other scenarios that might require more complex reasoning. You could imagine a skill, for example, that would allow you to ask your Echo Buds where the tomatoes are while you’re standing in Whole Foods. The Buds will need to register that you’re in the Whole Foods, access a map of its floor plan, and then tell you the tomatoes are in aisle seven.

In another scenario, you might ask Alexa through your communal home Echo to send you a notification if your flight is delayed. When it’s time to do so, perhaps you are already driving. Alexa needs to realize (by identifying your voice in your initial request) that you, not a roommate or family member, need the notification—and, based on the last Echo-enabled device you interacted with, that you are now in your car. Therefore, the notification should go to your car rather than your home.

This level of prediction and reasoning will also need to account for video data as more and more Alexa-compatible products include cameras. Let’s say you’re not home, Prasad muses, and a Girl Scout knocks on your door selling cookies. The Alexa on your Amazon Ring, a camera-equipped doorbell, should register (through video and audio input) who is at your door and why, know that you are not home, send you a note on a nearby Alexa device asking how many cookies you want, and order them on your behalf.

To make this possible, Prasad’s team is now testing a new software architecture for processing user commands. It involves filtering audio and visual information through many more layers. First Alexa needs to register which skill the user is trying to access among the roughly 100,000 available. Next it will have to understand the command in the context of who the user is, what device that person is using, and where. Finally it will need to refine the response on the basis of the user’s previously expressed preferences.

“This is what I believe the next few years will be about: reasoning and making it more personal, with more context,” says Prasad. “It’s like bringing everything together to make these massive decisions.”

The elephant in the room

From a technical perspective, all this would be an incredible achievement. What Prasad is talking about—combining various data sources and machine-learning methods to conduct high-level reasoning—has been a goal of artificial-intelligence researchers for decades.

From a consumer’s perspective, however, these changes also have critical privacy implications. Prasad’s vision effectively assumes Alexa will follow you everywhere, know a fair bit about what you’re up to at any given moment, and be the primary interface for how you coordinate your life. At a baseline, this requires hoovering up enormous amounts of intimate details about your life. Some worry that Amazon will ultimately go far beyond that baseline by using your data to advertise and market to you. “This is ultimately about monetizing the daily lives of individuals and groups of people,” says Jeffrey Chester, the executive director of the Center for Digital Democracy, a consumer privacy advocacy organization based in Washington, DC.

When pressed on this point, Prasad emphasized that his team has made it easier for users to periodically auto-delete their data and opt out of human review. Neither option actually keeps the data from being used to train Alexa’s myriad machine-learning models, though. In fact, Prasad alluded to ongoing research that would switch Alexa’s training process to one where models can quickly be updated anytime there is new user data, more or less guaranteeing that the value from said data will be captured before it’s disposed of. In other words, auto-deleting your data will mean only that it won’t still be around to train future models once training algorithms have been updated; for current models, your data would be used in roughly the same way. (In follow-up requests, an Amazon spokesperson said the company did not sell data collected by Alexa to third-party advertisers nor to target advertising, unless the user were accessing a service through Alexa, such as Amazon.com.)

Jen King, the director for privacy at Stanford Law School’s Center for Internet and Society, says these types of data controls are far too superficial. “If you want to give people meaningful control, then you have to be able to respect their decision to completely opt out or give them more choices over how their data is being used,” she says. “Giving somebody functional help in a location-specific way could be done in an extremely privacy-preserving manner. I don’t think that scenario has to be inherently problematic.”

In practice, King envisions this to mean several things. First, at a bare minimum, Amazon should have users opt in rather than opt out to letting their data be used. Second, Amazon should be more transparent about what it’s being used for. Currently, when you delete your data, it’s not clear what the company may have already done with it. “Imagine that you have an AI surveillance camera in your home and you forgot it was on and you were walking around the house naked,” she says. “As a consumer it would be useful to know, when you delete those files, if the system has already used them to train whatever algorithm it’s using.”

Finally, Amazon should give users more flexibility about when and where it can use their data. Users may be happy, for example, to give up their own data while wanting their kids’ to be off limits. “Tech companies tend to design these products with this idea that it’s all or nothing,” she says. “I think that’s a really misguided way to approach it. People may want some of the convenience of these things, but that doesn’t mean they want them in every facet of their life.”

Prasad’s ultimate vision is to make Alexa available and useful for everyone. Even in developing countries, he imagines cheaper versions that people can access on their smartphones. “To me we are on a journey of shifting the cognitive load on routine tasks,” he says. “I want Alexa to be a productivity enhancer ... to be truly ubiquitous so that it works for everyone.”

To have more stories like this delivered directly to your inbox, sign up for our Webby-nominated AI newsletter The Algorithm. It's free.

Deep Dive

Artificial intelligence

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

What’s next for generative video

OpenAI's Sora has raised the bar for AI moviemaking. Here are four things to bear in mind as we wrap our heads around what's coming.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.