Why AI is a threat to democracy—and what we can do to stop it

Amy Webb, futurist, NYU professor, and award-winning author, has spent much of the last decade researching, discussing, and meeting with people and organizations about artificial intelligence. “We’ve reached a fever pitch in all things AI,” she says. Now it’s time to step back to see where it’s going.

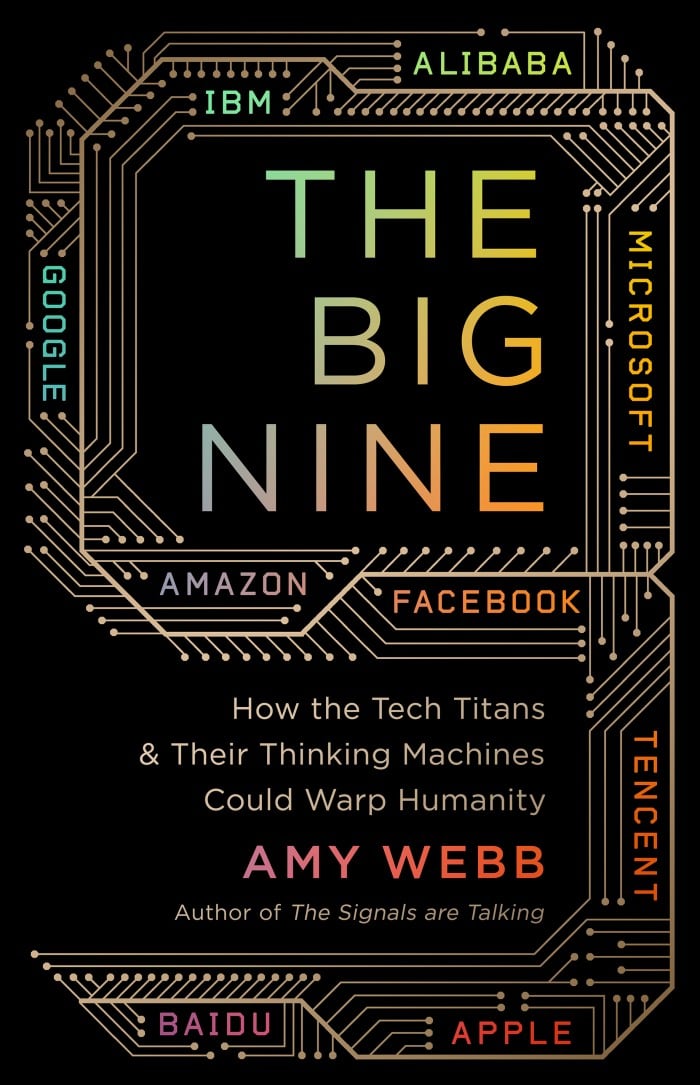

This is the task of her new book, The Big Nine: How the Tech Titans and Their Thinking Machines Could Warp Humanity, where she takes a bird’s-eye view of trends that, she warns, have put the development of technology on a dangerous path. In the US, Google, Microsoft, Amazon, Facebook, IBM, and Apple (the “G-MAFIA”) are hamstrung by the relentless short-term demands of a capitalistic market, making long-term, thoughtful planning for AI impossible. In China, Tencent, Alibaba, and Baidu are consolidating and mining massive amounts of data to fuel the government’s authoritarian ambitions.

If we don’t change this trajectory, argues Webb, we could be headed straight for catastrophe. But there is still time to act, and a role for everyone to play. MIT Technology Review sat down with her to speak about why she’s worried and what she thinks we can do about it.

The following Q&A has been condensed and edited for clarity.

You mention that we’re currently seeing a convergence of worrying technological, political, and economic trends. Can you expand on what the technological trends are?

When you talk to a researcher working in the field, they will tell you that it’s going to be a very long time before we see a lot of the promises that have been made about AI: things like full automation in vehicles, absolute recognition, or artificial general intelligence—AGI—systems that are capable of cognition and more human-like thought.

From my vantage point, looking to the horizon for the day we have some kind of walking, talking machine, or a machine with a disembodied voice making autonomous decisions, somewhat misses the point. We’re already seeing billions of tiny advancements that will have a compounding effect over time and lead to systems that can autonomously make many decisions at once.

The DeepMind team, for example, has been hard at work on teaching machines how to beat humans playing games. They’ve leapt pretty far ahead in areas like hierarchical reinforcement learning and multitask learning. The latest version of its AlphaGo algorithm, AlphaZero, is capable of learning how to play three games at once without a human in the loop. That’s a fairly big leap. There’s also the very new field of generative adversarial networks, where with a decent-sized corpus of images, you can now generate human faces that looked very, very realistic.

These advancements are not as sexy or as exciting as what we’ve been told about AGI. But if you’re able to take the 40,000-foot view, you can see that we’re heading into a situation in which systems will be making choices for us. And we have to stop and ask ourselves what happens when those systems put aside human strategy in favor of something totally unknown to us.

What about the political and economic trends? Can you describe the ones most worrying to you?

In the United States, the free flow of ideas can spread unencumbered. This is the way Silicon Valley was founded. It has bred both competition and innovation, which is how we got to where we are now with AI among other kinds of technologies.

However, in the US, we also suffer from a tragic lack of foresight. Rather than create a grand strategy for AI or for our long-term futures, the federal government has stripped funding from science and tech research. So the money must come from the private sector. But investors also expect some kind of return. That’s a problem. You can’t schedule your R&D breakthroughs when you’re working on fundamental technology and research. It would be terrific if the big tech companies had the luxury of working really hard without having to produce an annual conference to show off their latest and greatest whiz-bang thing. Instead, we now have countless examples of bad decisions that somebody in the G-MAFIA made, probably because they were working fast. We’re starting to see the negative effects of the tension between doing research that’s in the best interest of humanity and making investors happy.

Now that would be bad enough as it is, right? But this is all happening at the same time that there’s a tremendous amount of power being consolidated in China. China has a sovereign wealth fund devoted to fundamental basic research in AI. They’re throwing huge amounts of money at AI. And they have a totally different idea than the US when it comes to privacy and data. This means they have way more data that can be mined and refined. With a central authority, it’s super easy for the government to test and build AI services that incorporate data from 1.3 billion people. And that’s just within their own country.

Then they have the Belt and Road Initiative, which looks like a traditional infrastructure program but is partly also digital. It isn’t just about building roads and bridges; it’s also about building 5G networks and laying fiber, and about mining and refining data abroad. The deployment of these technologies is a risk to people who care about things like freedom of speech and Western democratic ideals.

Why should we be striving for Western democratic ideals?

It’s a great question. I’ve lived in China, in Japan, and obviously, in the United States. And you could look at the state of our country right now and what’s happening in China and wonder, is that really the worst thing? China’s social credit scoring system, by the way, sounds freakish and awful to Americans, but what a lot of people don’t realize is that self-reporting and monitoring behavior within villages and communities has been part of Chinese culture forever. The social credit score kind of just automates that. So yeah, that’s a terrific question.

I guess what I would say is if I were to look at the idealized version of Chinese communism and the idealized version of Western democracy, I would pick the Western democracy because I think there is a better opportunity for the free flow of ideas and for everyday people to succeed. I think that giving people incentives for individual and personal achievement is a great way to lift up a society, helps us reach our individual potential.

With the direction that the world is headed with AI today, is that a fair comparison? Should we be comparing the idealized versions of Chinese communism with Western democracy, or the worst versions of the two?

That’s a great question because you could argue that pieces of the AI ecosystem are already impacting our Western democratic ideals in a truly negative way. Obviously, everything that’s happened with Facebook serves as an example. But also look what’s going on with the anti-vaxxer community. They’re spreading totally incorrect information about vaccines and basic science. Our American traditions will say freedom of speech, platforms are platforms, we need to let people express themselves. Well, the challenge with that is that algorithms are making choices about editorial content that are leading people to make very bad decisions and getting children sick as a result.

The problem is our technology has become more and more sophisticated, but our thinking on what is free speech and what does a free market economy look like has not become as sophisticated. We tend to resort to very basic interpretations: Free speech means all speech is free unless it butts up against libel law, and that’s the end of the story. That’s not the end of the story. We need to start having a more sophisticated and intelligent conversation about our current laws, our emerging technology, and how we can get those two to meet in the middle.

In other words, you have faith that we will evolve from where we are now to a more idealized version of Western democracy. And you would much prefer that to idealized Chinese communism.

Yeah, I have faith that it’s possible. My huge concern is that everybody is waiting, that we’re dragging our heels and it’s going to take a true catastrophe to make people take action, as though the place we’ve arrived at isn’t catastrophic. But the fact that measles is back in the state of Washington to me is a catastrophic outcome. So is what’s happened in the wake of the election. Regardless of what side of the political spectrum you’re on, I cannot imagine anybody today thinks that the current political climate is good for our futures.

So I absolutely believe that there is a path forward. But we need to get together and bridge the gap between Silicon Valley and DC so that we can all steer the boat in the same direction.

What do you recommend government, companies, universities, and individual consumers do?

The developmental track of AI is a problem, and every one of us has a stake. You, me, my dad, my next-door neighbor, the guy at the Starbucks that I’m walking past right now. So what should everyday people do? Be more aware of who’s using your data and how. Take a few minutes to read work written by smart people and spend a couple minutes to figure out what it is we’re really talking about. Before you sign your life away and start sharing photos of your children, do that in an informed manner. If you’re okay with what it implies and what it could mean later on, fine, but at least have that knowledge first.

Businesses and investors can’t expect to rush a product over and over again. It is setting us up for problems down the road. So they can do things like shore up their hiring processes, significantly increase their efforts to improve inclusivity, and make sure their staff are more representative of what the real world looks like. They can also put on some brakes. Any investment that’s made into an AI company or project or whatever it might be should also include funding and time for checking things like risk and bias.

Universities must create space in their programs for hybrid degrees. They should incentivize CS students to study comparative literature, world religions, microeconomics, cultural anthropology and similar courses in other departments. They should champion dual degree programs in computer science and international relations, theology, political science, philosophy, public health, education and the like. Ethics should not be taught as a stand-alone class, something to simply check off a list. Schools must incentivize even tenured professors to weave complicated discussions of bias, risk, philosophy, religion, gender, and ethics in their courses.

One of my biggest recommendations is the formation of GAIA, what I call the Global Alliance on Intelligence Augmentation. At the moment people around the world have very different attitudes and approaches when it comes to data collection and sharing, what can and should be automated, and what a future with more generally intelligent systems might look like. So I think we should create some kind of central organization that can develop global norms and standards, some kind of guardrails to imbue not just American or Chinese ideals inside AI systems, but worldviews that are much more representative of everybody.

Most of all, we have to be willing to think about this much longer-term, not just five years from now. We need to stop saying, “Well, we can’t predict the future, so let’s not worry about it right now.” It’s true, we can’t predict the future. But we can certainly do a better job of planning for it.

An abridged version of this story originally appeared in our AI newsletter The Algorithm. To have it directly delivered to your inbox, sign up here for free.

Deep Dive

Artificial intelligence

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

What’s next for generative video

OpenAI's Sora has raised the bar for AI moviemaking. Here are four things to bear in mind as we wrap our heads around what's coming.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.