We tried teaching an AI to write Christmas movie plots. Hilarity ensued. Eventually.

Perhaps more than anyone, research scientist Janelle Shane popularized AI humor. You may have seen her work. During Halloween this year, she partnered with the New York Times to generate costume names with a neural network. The results—and illustrations—are pretty fantastic: “Sexy Minecraft Person” and “Vampire Chick Shark,” to name a few.

Shane has made an art out of AI-generated comedy on her delightful blog AI Weirdness. She’s fed neural networks everything from cocktail recipes and pie names to horror movie titles and Disney song lyrics. The results they churn out are always hilarious.

Inspired, senior AI editor Will Knight and I embarked on a challenge to create a comedic masterpiece in the style of Shane. So we fed plot summaries of 360 Christmas movies, courtesy of Wikipedia, into a machine-learning algorithm to see if we could get it to spit out the next big holiday blockbuster. Suffice it to say I now empathize with researchers who describe training neural nets as more of an art than a science. As I also discovered, getting them to be funny is actually pretty damn hard.

Follow our process below:

The process

The moral

The results

The process

The algorithm we used is called textgenrnn, the same one Shane used in her collaboration with the Times. Textgenrnn has two modes: you can either use letters to generate words in the style of other words, or use words to generate sentences in the style of other sentences.

Each mode comes with the same settings, which you can tune in various attempts to coax out good results. I primarily focused on three of the settings: the number of layers, the number of epochs, and the temperature.

Allow me to explain. Layers, here, refers to the complexity of the neural network: the more layers it has, the more complicated the data it can handle. The number of epochs is the number of times it gets to look at the training data before spitting out its final results. And the temperature is like a creativity setting: the lower the temperature, the more the network will choose common words in the training data set versus those that rarely appear.

Of course, I knew none of this while I was futzing around. Instead, I uploaded my movie plots in a text file and began blindly tweaking the knobs. Here are some examples of what I began to see, with high creativity settings and a growing number of epochs:

Epoch 4

Mary must friends from magic putting christmas stop nathan endeavors the.

Rubs serious a resort bet elves cared the a in day tallen shady with christmas unveiling retrieve died california awaits is groundhog after back of the wise janitor christmas traumatized the to to discover popular to his community.

A survive before show in town they the.

Epoch 6

And boy son working whose issues born religious can a.

Max mccallister evie to who true to her christmas the in partner question.

Orphan and mccallister apartment thief most holiday.

Epoch 8

Suburban owner away team short evil to at that she the naughty attempt naughty into of escape life learns neighborhood were their house circumstances visit you to.

WWII find retriever to to to the friends for.

A mother couple a a takes pacifist three cheap family tells cozy presents clone to toothless.

If you’re reading these and thinking, Those are incomprehensible and not funny, then you, friend, and I are of the same mind.

At first I assumed I was doing something wrong; I hadn’t quite cracked the art of training the neural network correctly. But after dozens of attempts on different settings, I finally came to the conclusion that this is as good as it gets. Most of the sentences will be flat-out terrible, and on the rare occasion, you will get a gem.

The moral

Part of the problem, Shane explained when I spoke to her, was due to my small training data set—360 data points is small potatoes compared with the millions typically used for high-quality results. Part of it was also due to textgenrnn—the algorithm, she said, just isn’t that good at constructing sentences compared with alternatives. (“Do we know why?” I asked Shane. “I don’t think even the guy who made textgenrnn really knows,” she said. “It could even be a bug.” Ah, the beauty of black box algorithms.)

But the main reason is really the limitations of generating sentences with a neural net. Even if I’d used better data and a better algorithm, the challenge of achieving coherence is exceedingly normal.

This makes sense if you think about what’s happening under the hood. Machine-learning algorithms are really good at using statistics to find and apply patterns in data. But that’s about it. So in the context of constructing sentences, you’re choosing each consecutive word based only on the probability that it would appear after the previous word. It’s like trying to compose an e-mail with predictive text. The result would be riddled with non sequiturs, switches between singular and plural, and a whole lot of confusion over parts of speech.

So really, it takes a lot of manual labor to make a neural network spit out gibberish that humans would consider remotely humorous.

“For some data sets, I’m only showing people maybe one out of a hundred things it generates,” Shane admitted. “I’m doing really well if one out of ten is actually funny and worth showing.” In many instances, she continued, it takes her more time to curate the results than to train the algorithm.

Lesson learned: neural networks aren’t that funny. It’s the humans who are.

The results

As a bonus, here are some of the best algorithmically generated plot summaries Will and I were able to come up with, slightly cleaned. We also generated Christmas movie titles using word mode for good measure.

Synopses

A family of the Christmas terrorist and offering the first time to be a charlichhold for a new town to fight.

A story of home-life father of the Christmas story.

The reclusive from Christmas.

A woman from chaos adopted home believes.

A princess ogre nearby cross by on the Christmas.

A gardener detective but country murderer magical suddenly Christmas the near elf.

A intercepting suffers and a friends up change Christmas with his and save Christmas time.

A family man and a special estranged for Christmas.

A stranded on Christmas Eve to the New York family before Christmas.

Santa.

The Scrooge-leads Bad by Santa, since Anima.

A man returns to the singer who is forced to return his life with a couple to help her daughter for Christmas.

An angel of Santa’s hitch from the plant.

Lonely courier village newspaper by home destroy Christmas Christmas Christmas the prancer.

Babysitter boy tries to party the Christmas in of for more Christmas.

Titles

The Christmas Store

Santa Christmas Christmas

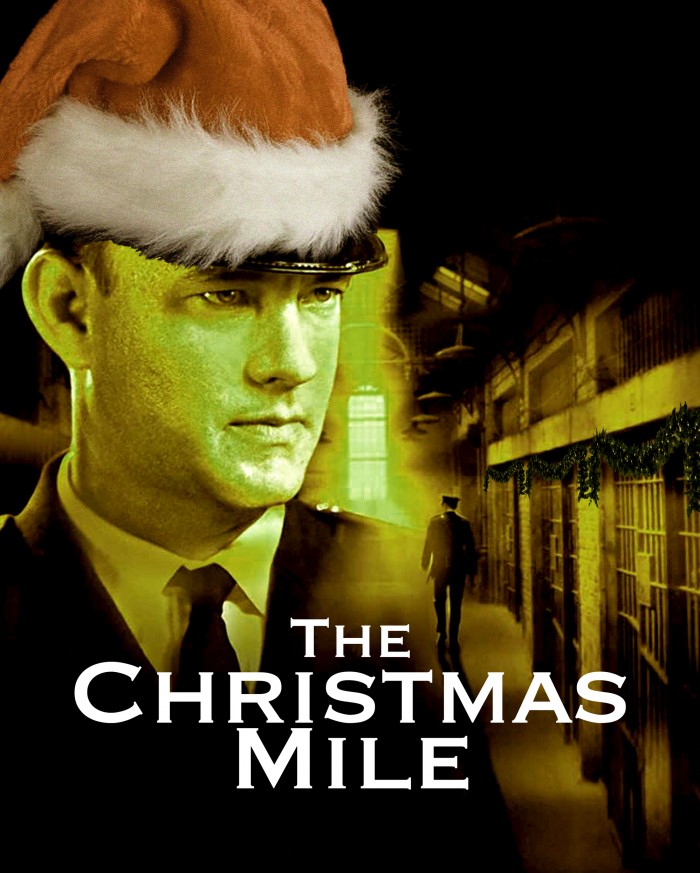

The Christmas Mile

Fight Christmas

The Nighht Claus

I Santa Manta Christmas Porie

Babee Christmas

A Christmas StorK

The Grange Christmas

The Santa Christmas Pastie Christmas

Christmas Caper

A Christmas Mister

The Lick Christmas

Mrack Me Christmas Satra

The Christmas Catond 2

Santa Bach Christmas

Christmas Pinta

Christmas Cast

A Christmas to Come

It Santa

Fromilly

Deep Dive

Artificial intelligence

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

What’s next for generative video

OpenAI's Sora has raised the bar for AI moviemaking. Here are four things to bear in mind as we wrap our heads around what's coming.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.