Fake America great again

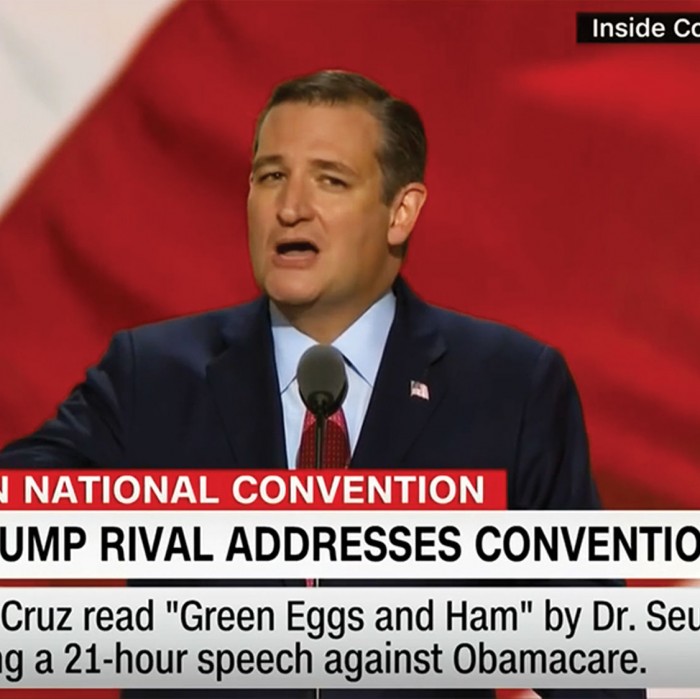

Guess what? I just got hold of some embarrassing video footage of Texas senator Ted Cruz singing and gyrating to Tina Turner. His political enemies will have great fun showing it during the midterms. Donald Trump will call him “Dancin’ Ted.”

Okay, I’ll admit it—I created the video myself. But here’s the troubling thing: making it required very little video-editing skill. I downloaded and configured software that uses machine learning to perform a convincing digital face-swap. The resulting video, known as a deepfake, shows Cruz’s distinctively droopy eyes stitched onto the features of actor Paul Rudd doing lip-sync karaoke. It isn’t perfect—there’s something a little off—but it might fool some people.

Photo fakery is far from new, but artificial intelligence will completely change the game. Until recently only a big-budget movie studio could carry out a video face-swap, and it would probably have cost millions of dollars. AI now makes it possible for anyone with a decent computer and a few hours to spare to do the same thing. Further machine-learning advances will make even more complex deception possible—and make fakery harder to spot.

These advances threaten to further blur the line between truth and fiction in politics. Already the internet accelerates and reinforces the dissemination of disinformation through fake social-media accounts. “Alternative facts” and conspiracy theories are common and widely believed. Fake news stories, aside from their possible influence on the last US presidential election, have sparked ethnic violence in Myanmar and Sri Lanka over the past year. Now imagine throwing new kinds of real-looking fake videos into the mix: politicians mouthing nonsense or ethnic insults, or getting caught behaving inappropriately on video—except it never really happened.

“Deepfakes have the potential to derail political discourse,” says Charles Seife, a professor at New York University and the author of Virtual Unreality: Just Because the Internet Told You, How Do You Know It’s True? Seife confesses to astonishment at how quickly things have progressed since his book was published, in 2014. “Technology is altering our perception of reality at an alarming rate,” he says.

Are we about to enter an era when we can’t trust anything, even authentic-looking videos that seem to capture real “news”? How do we decide what is credible? Whom do we trust?

These still images of Ted Cruz and Paul Rudd were taken from the footage that was fed to a face-swapping program.

Real fake

Several technologies have converged to make fakery easier, and they’re readily accessible: smartphones let anyone capture video footage, and powerful computer graphics tools have become much cheaper. Add artificial-intelligence software, which allows things to be distorted, remixed, and synthesized in mind-bending new ways. AI isn’t just a better version of Photoshop or iMovie. It lets a computer learn how the world looks and sounds so it can conjure up convincing simulacra.

I created the clip of Cruz using OpenFaceSwap, one of several face-switching programs that you can download for free. You need a computer with an advanced graphics chip, and this can set you back a few thousand bucks. But you can also rent access to a virtual machine for a few cents per minute using a cloud machine-learning platform like Paperspace. Then you simply feed in two video clips and sit back for a few hours as an algorithm figures out how each face looks and moves so that it can map one onto the other. Getting things to work is a bit of an art: if you choose clips that are too different, the result can be a nightmarish mishmash of noses, ears, and chins.

But the process is easy enough.

Getting things to work is a bit of an art: if you choose clips that are too different, the results can be a mishmash of noses, ears, and chins.

Face-swapping was, predictably, first adopted for making porn. In 2017, an anonymous Reddit user known as Deepfakes used machine learning to swap famous actresses’ faces into scenes featuring adult-movie stars, and then posted the results to a subreddit dedicated to leaked celebrity porn. Another Reddit user then released an easy-to-use interface, which led to a proliferation of deepfake porn as well as, for some odd reason, endless clips of the actor Nicolas Cage in movies he wasn’t really in. Even Reddit, a notoriously freewheeling hangout, banned such nonconsensual pornography. But the phenomenon persists in the darker corners of the internet.

OpenFaceSwap uses an artificial neural network, by now the go-to tool in AI. Very large, or “deep,” neural networks that are fed enormous amounts of training data can do all sorts of useful things, including finding a person’s face among millions of images. They can also be used to manipulate and synthesize images.

OpenFaceSwap trains a deep network to “encode” a face (a process similar to data compression), thereby creating a representation that can be decoded to reconstruct the full face. The trick is to feed the encoded data for one face into the decoder for the other. The neural network will then conjure, often with surprising accuracy, one face mimicking the other’s expressions and movements. The resulting video can seem wonky, but OpenFaceSwap will automatically blur the edges and adjust the coloring of the newly transplanted face to make things look more genuine.

Left: OpenFaceSwap previews attempted face swaps during training. Early tries can often be a bit weird and grotesque.

Right: The software takes several hours to produce a good face swap. The more training data, the better the end result.

Similar technology can be used to re-create someone’s voice, too. A startup called Lyrebird has posted convincing demos of Barack Obama and Donald Trump saying entirely made-up things. Lyrebird says that in the future it will limit its voice duplications to people who have given their permission—but surely not everyone will be so scrupulous.

A startup has posted convincing demos of Barack Obama and Donald Trump saying entirely made-up things.

There are well-established methods for identifying doctored images and video. One option is to search the web for images that might have been mashed together. A more technical solution is to look for telltale changes to a digital file, or to the pixels in an image or a video frame. An expert can search for visual inconsistencies—a shadow that shouldn’t be there, or an object that’s the wrong size.

Dartmouth University’s Hany Farid, one of the world’s foremost experts, has shown how a scene can be reconstructed in 3-D in order to discover physical oddities. He has also proved that subtle changes in pixel intensity in a video, indicating a person’s pulse rate, can be used to spot the difference between a real person and a computer-generated one. Recently one of Farid’s former students, now a professor at the State University of New York at Albany, has shown that irregular eye blinking can give away a face that’s been manipulated by AI.

Still, most people can’t do this kind of detective work and don’t have time to study every image or clip that pops up on Facebook. So as visual fakery has become more common, there’s been a push to automate the analysis. And it turns out that not only does deep learning excel at making stuff up, it’s ideal for scrutinizing images and videos for signs of fakery. This effort has only just begun, though, and it may ultimately be hindered by how realistic the automated fakes could become.

Networks of deception

One of the latest ideas in AI research involves turning neural networks against themselves in order to produce even more realistic fakes. A “generative adversarial network,” or GAN, uses two deep neural networks: one that’s been trained to identify real images or video, and another that learns over time how to outwit its counterpart. GANs can be trained to produce surprisingly realistic fake imagery.

Beyond copying and swapping faces, GANs may make it possible to synthesize entire scenes and people that look quite real, turning a daytime scene into a nighttime one and dreaming up imaginary celebrities. GANs don’t work perfectly, but they are getting better all the time, and this is a hot area of research (MIT Technology Review named GANs one of its “10 Breakthrough Technologies” for 2018).

GANs can turn daytime scenes into nighttime ones and dream up imaginary celebrity faces.

Most worrying, the technique could also be used to evade digital forensics. The US’s Defense Advanced Research Projects Agency invited researchers to take part in a contest this summer in which some developed fake videos using GANs and others tried to detect them. “GANs are a particular challenge to us in the forensics community because they can be turned against our forensic techniques,” says Farid. “It remains to be seen which side will prevail.”

This is the end

If we aren’t careful, this might result in the end of the world—or least what seems like it.

In April, a supposed BBC news report announced the opening salvos of a nuclear conflict between Russia and NATO. The clip, which began circulating on the messaging platform WhatsApp, showed footage of missiles blasting off as a newscaster told viewers that the German city of Mainz had been destroyed along with parts of Frankfurt.

It was, of course, entirely fake, and the BBC rushed to denounce it. The video wasn’t generated using AI, but it showed the power of fake video, and how it can spread rumors at warp speed. The proliferation of AI programs will make such videos far easier to make, and even more convincing.

Even if we aren’t fooled by fake news, it might have dire consequences for political debate. Just as we are now accustomed to questioning whether a photograph might have been Photoshopped, AI-generated fakes could make us more suspicious about events we see shared online. And this could contribute to the further erosion of rational political debate.

In The Death of Truth, published this year, the literary critic Michiko Kakutani argues that alternative facts, fake news, and the general craziness of modern politics represent the culmination of cultural currents that stretch back decades. Kakutani sees hyperreal AI fakes as just the latest heavy blow to the concept of objective reality.

“Before the technology even gets good, the fact that it exists and is a way to erode confidence in legitimate material is deeply problematic,” says Renee DiResta, a researcher at Data for Democracy and one of the first people to identify the phenomenon of politically motivated Twitter misinformation campaigns.

Perhaps the greatest risk with this new technology, then, is not that it will be misused by state hackers, political saboteurs, or Anonymous, but that it will further undermine truth and objectivity itself. If you can’t tell a fake from reality, then it becomes easy to question the authenticity of anything. This already serves as a way for politicians to evade accountability.

Perhaps the greatest risk is that the technology will further undermine truth and objectivity.

President Trump has turned the idea of fake news upside down by using the term to attack any media reports that criticize his administration. He has also suggested that an incriminating clip of him denigrating women, released during the 2016 campaign, might have been digitally forged. This April, the Russian government accused Britain of faking video evidence of a chemical attack in Syria to justify proposed military action. Neither accusation was true, but the possibility of sophisticated fakery is increasingly diminishing the credibility of real information. In Myanmar and Russia new legislation seeks to prohibit fake news, but in both cases the laws may simply serve as a way to crack down on criticism of the government.

As the powerful become increasingly aware of AI fakery, it will become easy to dismiss even clear-cut video evidence of wrongdoing as nothing more than GAN-made digital deception.

The truth will still be out there. But will you know it when you see it?

Will Knight is a senior editor at MIT Technology Review who covers artificial intelligence.

Deep Dive

Artificial intelligence

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

What’s next for generative video

OpenAI's Sora has raised the bar for AI moviemaking. Here are four things to bear in mind as we wrap our heads around what's coming.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.