Why even a moth’s brain is smarter than an AI

One of the curious features of the deep neural networks behind machine learning is that they are surprisingly different from the neural networks in biological systems. While there are similarities, some critical machine-learning mechanisms have no analogue in the natural world, where learning seems to occur in a different way.

These differences probably account for why machine-learning systems lag so far behind natural ones in some aspects of performance. Insects, for example, can recognize odors after just a handful of exposures. Machines, on the other hand, need huge training data sets to learn. Computer scientists hope that understanding more about natural forms of learning will help them close the gap.

Enter Charles Delahunt and colleagues at the University of Washington in Seattle, who have created an artificial neural network that mimics the structure and behavior of the olfactory learning system in Manduca sexta moths. They say their system provides some important insights into the way natural networks learn, with potential implications for machines.

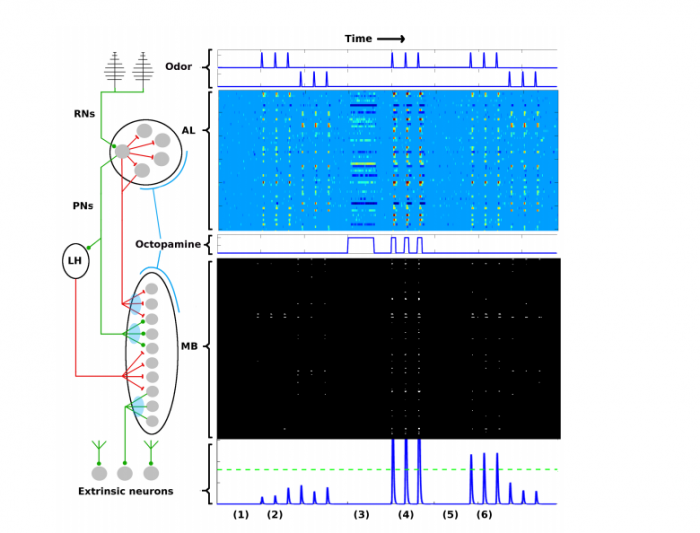

First some background. The olfactory learning system in moths is relatively simple and well mapped by neuroscientists. It consists of five distinct networks that feed information forward from one to the next.

The first is a system of around 30,000 chemical receptors that detect odors and send a rather noisy set of signals to the next level, known as the antenna lobe. This contains about 60 units, known as glomeruli, that each focus on specific odors.

The antenna lobe then sends neural odor codes to the mushroom body, which contains some 4,000 kenyon cells and is thought to encode odors as memories.

Finally, the result is read out by a layer of extrinsic neurons, which number in the 10s. These interpret the signals from the mushroom body as actions, such as “fly upwind.”

Several aspects of this system are entirely different from what’s found in machine-learning networks. For example, the antenna lobe encodes information in a low-dimensional parameter space but sends it to the mushroom body, which encodes it in a high-dimensional parameter space. By contrast, the layers in artificial neural networks tend to have similar dimensions.

And in moths, the successful recognition of an odor triggers a reward mechanism in which neurons spray a chemical neurotransmitter called octopamine into the antenna lobe and mushroom body.

This is a crucial part of the learning process. Octopamine seems to help reinforce the neural wiring that leads to success. It is a key part of Hebbian learning, in which “cells that fire together wire together.” Indeed, neuroscientists have long known that moths do not learn without octopamine. But the role it plays isn’t well understood.

Learning in machines is very different. It relies on a process called backpropagation, which tweaks the neural connections in a way that improves outcomes. But information essentially travels backward through the network in this process, and there is no known analogue of it in nature.

To better understand the way moths learn, Delahunt and co created an artificial neural network that mimics the behavior of the natural one. “We constructed an end-to-end computational model of the Manduca sexta moth olfactory system which includes the interaction of the Antenna Lobe and Mushroom Body under octopamine stimulation,” they say.

The model is specifically designed to reproduce the behavior of the natural system at every level. In particular, the model simulates the noisy signals generated by the odor receptors and the change in dimension as information flows from the antenna lobe to the mushroom body, and it includes an analogue of the role played by octopamine.

And the results make for interesting reading. The model shows how the odor receptors produce a noisy signal that is pre-amplified by the antenna lobe. However, the change in dimension as the signal travels to the mushroom body has the effect of removing noise, and this allows the system to generate specific, unambiguous action signals like “fly upwind.”

The role of octopamine looks clearer, too. The simulations show that learning can occur without octopamine, but it is so slow as to be effectively useless. This implies that octopamine acts as a powerful accelerant to learning.

But just how it does this is still up for discussion. Delahunt and co have their own ideas. “Perhaps it is a mechanism that allows the moth to work around intrinsic organic constraints on Hebbian growth of new synapses, constraints which would otherwise restrict the moth to an unacceptably slow learning rate,” they suggest.

Octopamine also has another role. Hebbian learning only reinforces connections that already exist, and that raises the question of how new wiring occurs. Delahunt and co say that octopamine opens new transmitting channels for wiring. “This expands the solution space the system can explore during learning,” they say.

And most impressive is that the simulated network learns in a similar way to the natural network. “Our model is able to robustly learn new odours, and our simulations of integrate-and-fire neurons match the statistical features of in vivo firing rate data,” say Delahunt and co.

This work that could have significant implications for the design of synthetic neural networks that need to learn quickly. “From a machine learning perspective, the model yields bioinspired mechanisms that are potentially useful in constructing neural nets for rapid learning from very few samples,” say the team.

So the machine learning networks of the future may soon contain simulated versions of octopamine and other neurotransmitters.

Of course, it is not just in learning that neurotransmitters are important. Neuroscientists are well aware of the role they play in emotions, mood regulation, and so on. Therein lies another avenue of research that machine-learning teams will be interested to explore.

Ref: arxiv.org/abs/1802.02678 : Biological Mechanisms for Learning: A Computational Model of Olfactory Learning in the Manduca sexta Moth, with Applications to Neural Nets

Deep Dive

Artificial intelligence

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

What’s next for generative video

OpenAI's Sora has raised the bar for AI moviemaking. Here are four things to bear in mind as we wrap our heads around what's coming.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.