Forget Killer Robots—Bias Is the Real AI Danger

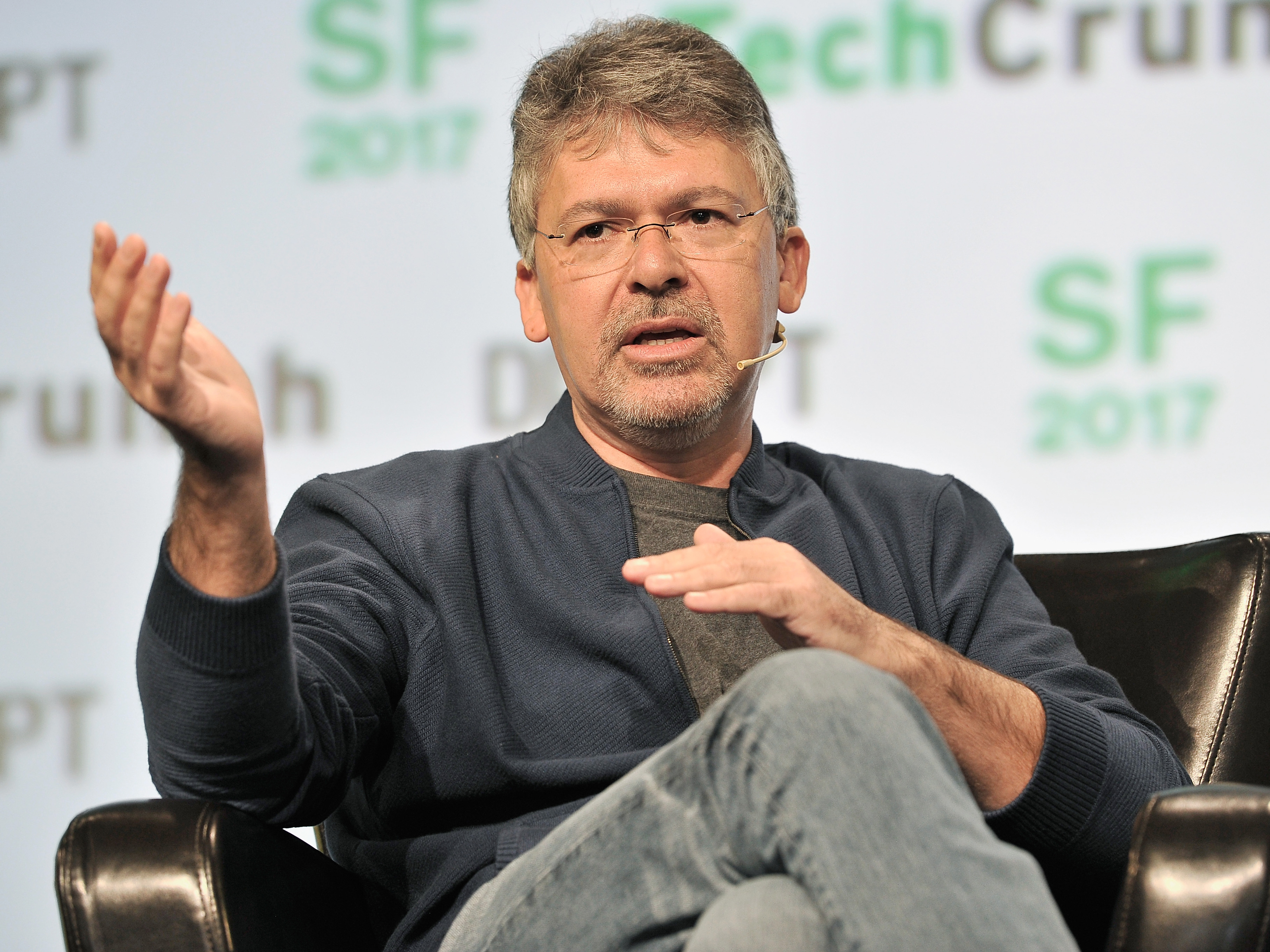

Google’s AI chief isn’t fretting about super-intelligent killer robots. Instead, John Giannandrea is concerned about the danger that may be lurking inside the machine-learning algorithms used to make millions of decisions every minute.

“The real safety question, if you want to call it that, is that if we give these systems biased data, they will be biased,” Giannandrea said before a recent Google conference on the relationship between humans and AI systems.

The problem of bias in machine learning is likely to become more significant as the technology spreads to critical areas like medicine and law, and as more people without a deep technical understanding are tasked with deploying it. Some experts warn that algorithmic bias is already pervasive in many industries, and that almost no one is making an effort to identify or correct it (see “Biased Algorithms Are Everywhere, and No One Seems to Care”).

“It’s important that we be transparent about the training data that we are using, and are looking for hidden biases in it, otherwise we are building biased systems,” Giannandrea added. “If someone is trying to sell you a black box system for medical decision support, and you don’t know how it works or what data was used to train it, then I wouldn’t trust it.”

Black box machine-learning models are already having a major impact on some people’s lives. A system called COMPAS, made by a company called Northpointe, offers to predict defendants’ likelihood of reoffending, and is used by some judges to determine whether an inmate is granted parole. The workings of COMPAS are kept secret, but an investigation by ProPublica found evidence that the model may be biased against minorities.

It may not always be as simple as publishing details of the data or the algorithm employed, however. Many of the most powerful emerging machine-learning techniques are so complex and opaque in their workings that they defy careful examination (see “The Dark Secret at the Heart of AI”). To address this issue, researchers are exploring ways to make these systems give some approximation of their workings to engineers and end users.

Giannandrea has good reason to highlight the potential for bias to creep into AI. Google is among several big companies touting the AI capabilities of its cloud computing platforms to all sorts of businesses. These cloud-based machine-learning systems are designed to be a lot easier to use than the underlying algorithms. This will help make the technology more accessible, but it could also make it easier for bias to creep in. It will be important to also offer tutorials and tools to help less experienced data scientists and engineers identify and remove bias from their training data.

Several of the speakers invited to the conference organized by Google also highlighted the issue of bias. Google researcher Maya Gupta described her efforts to build less opaque algorithms as part of a team known internally as “GlassBox.” And Karrie Karahalios, a professor of computer science at the University of Illinois, presented research highlighting how tricky it can be to spot bias in even the most commonplace algorithms. Karahalios showed that users don’t generally understand how Facebook filters the posts shown in their news feed. While this might seem innocuous, it is a neat illustration of how difficult it is to interrogate an algorithm.

Facebook’s news feed algorithm can certainly shape the public perception of social interactions and even major news events. Other algorithms may already be subtly distorting the kinds of medical care a person receives, or how they get treated in the criminal justice system. This is surely a lot more important than killer robots, at least for now.

Giannandrea has certainly been a voice of reason in recent years among some more fanciful warnings about the risks posed by AI. Elon Musk, in particular, has generated countless headlines by warning recently that AI is a bigger threat than North Korea, and could result in World War III.

“What I object to is this assumption that we will leap to some kind of super-intelligent system that will then make humans obsolete,” Giannandrea said. “I understand why people are concerned about it but I think it’s gotten way too much airtime. I just see no technological basis as to why this is imminent at all.”

Deep Dive

Artificial intelligence

What’s next for generative video

OpenAI's Sora has raised the bar for AI moviemaking. Here are four things to bear in mind as we wrap our heads around what's coming.

Is robotics about to have its own ChatGPT moment?

Researchers are using generative AI and other techniques to teach robots new skills—including tasks they could perform in homes.

An AI startup made a hyperrealistic deepfake of me that’s so good it’s scary

Synthesia's new technology is impressive but raises big questions about a world where we increasingly can’t tell what’s real.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.