Nvidia CEO: Software Is Eating the World, but AI Is Going to Eat Software

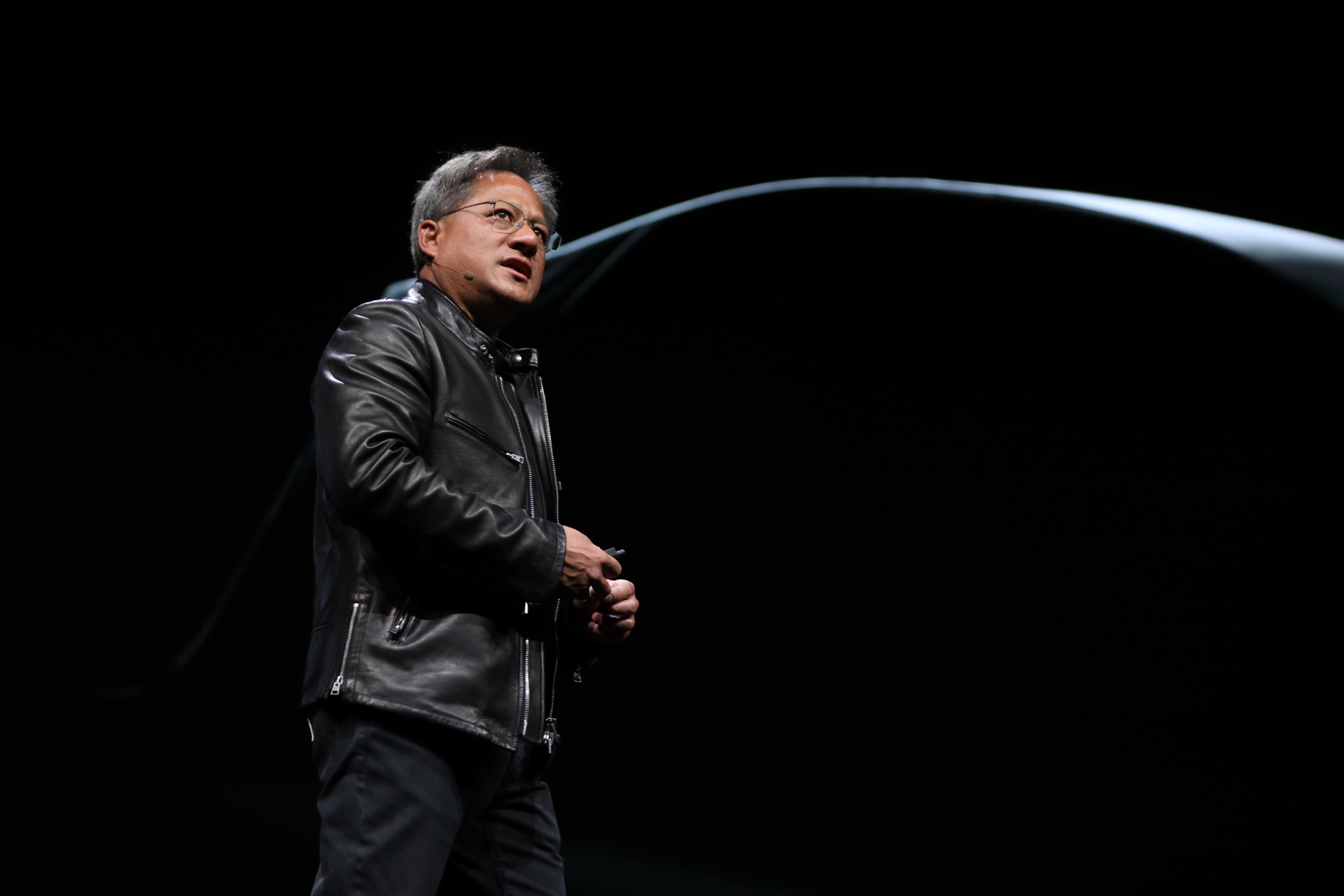

Tech companies and investors have recently been piling money into artificial intelligence—and plenty has been trickling down to chip maker Nvidia. The company’s revenues have climbed as it has started making hardware customized for machine-learning algorithms and use cases such as autonomous cars. At the company’s annual developer conference in San Jose, California, this week, the company’s CEO Jensen Huang spoke to MIT Technology Review about how the machine-learning revolution is just starting.

Nvidia has benefitted from a rapid explosion of investment in machine learning from tech companies. Can this rapid growth in the use cases for machine learning continue?

We’re very early on. Very few lines of code in the enterprises and industries all over the world use AI today. It’s quite pervasive in Internet service companies, particularly two or three of them. But there's a whole bunch of others in tech and other industries that are trying to catch up. Software is eating the world, but AI is going to eat software.

What industry will be transformed by machine learning next?

One is the automotive industry. Ten of the world’s top car companies are here with us at the conference. The second is health care, and the impact on society is going to be very great. Health information is messy and unstructured, but now computers can understand it to augment doctors’ diagnoses and predictions.

Recent research results from applying machine learning to diagnosis are impressive (see “An AI Ophthalmologist Shows How Machine Learning May Transform Medicine”). But it’s not clear how regulators will test and approve these new kinds of systems.

When we're talking about human lives, there are always regulatory challenges. But we can't ignore the impact of a technology that brings 10 or 1,000 times better results. I have confidence that reasonable minds will realize the benefits of this technology and put it in the hands of doctors and clinicians and radiologists so that they can do better work. Arterys recently got FDA approval for their cardiac imaging [which annotates scans of the heart], and I know of many others that are in the pipeline.

Using machine learning in cars will also create new challenges for regulators. Nvidia has demonstrated software that learns to drive just by watching what a human driver does—but it’s difficult to explain exactly how it works or would behave in different scenarios (see “The Dark Secret at the Heart of AI”).

The power and promise of this end-to-end approach is very enticing. We really believe that long-term, the way AI will drive is similar to the way humans drive—we don't break the problem down into objects and vision and localization and planning. But how long it will take us to get there is questionable. Getting it to do everything properly is a great challenge, [and] when it doesn't do one thing right, how do you patch it, because you're trying to train the whole thing together. We probably have to break down some of these problems into smaller chunks.

Your chips are already driving some cars: all Tesla vehicles now use Nvidia’s Drive PX 2 computer to power the Autopilot feature that automates highway driving. Does that function use the hardware’s full capacity? Could it power fully autonomous driving?

Drive PX 2 is a computing platform with a lot of computation capacity reserved—the idea is to have enough so that you can continuously update the software and be delighted with enhancements over time. For full autonomy, meaning a driverless car, there are still some unknowns, but there's a lot of software development that's going on. I'm not exactly sure, but we'll find out.

Intel, Google, and several other companies are now working on chips designed to accelerate machine learning (see “Battle to Provide Chips for the AI Boom Heats Up”). How will you stay ahead?

A lot of people recognize the importance of this market, and I think it's going to be very large. We're going to steer our years of investment in our GPU chips, and a two and a half billion dollar R&D budget, into deep learning. And we will make our architecture available everywhere: in PCs, in servers, in the cloud, in cars, in robots.

You agree with researchers who say that the physical challenges of making transistors smaller and more power-efficient are slowing progress in the power of computer processors (see “Moore’s Law Is Dead. Now What?”). But you claim Nvidia’s chips can keep advancing because they are specialized to particular use cases. Surely you can’t resist physics forever.

No question about it, we can’t. Right now we’re recapturing the inefficiencies of CPUs and software into our more specialized GPUs. My sense is we'll continue to benefit from that for a couple of decades. But somewhere we’ll have to find something new. We have an amazing engineering team in the company pushing the limits of device physics and some great partners in manufacturing. Between all of us we will find the way.

Deep Dive

Artificial intelligence

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

What’s next for generative video

OpenAI's Sora has raised the bar for AI moviemaking. Here are four things to bear in mind as we wrap our heads around what's coming.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.