Neural Network Learns to Identify Criminals by Their Faces

Soon after the invention of photography, a few criminologists began to notice patterns in mugshots they took of criminals. Offenders, they said, had particular facial features that allowed them to be identified as law breakers.

One of the most influential voices in this debate was Cesare Lombroso, an Italian criminologist, who believed that criminals were “throwbacks” more closely related to apes than law-abiding citizens. He was convinced he could identify them by ape-like features such as a sloping forehead, unusually sized ears and various asymmetries of the face and long arms. Indeed, he measured many subjects in an effort to prove his view although he did not analyze his data statistically.

This shortcoming eventually led to his downfall. Lombroso’s views were discredited by the English criminologist Charles Goring, who statistically analyzed the data relating to physical abnormalities in criminals versus noncriminals. He concluded that there was no statistical difference.

And there the debate rested until 2011, when a group of psychologists from Cornell University showed that people were actually quite good at distinguishing criminals from noncriminals just by looking at photos of them. How could that be if there are no statistically different features?

Today, we get an answer of sorts, thanks to the work of Xiaolin Wu and Xi Zhang from Shanghai Jiao Tong University in China. These guys have used a variety of machine-vision algorithms to study faces of criminals and noncriminals and then tested it to find out whether it could tell the difference.

Their method is straightforward. They take ID photos of 1856 Chinese men between the ages of 18 and 55 with no facial hair. Half of these men were criminals.

They then used 90 percent of these images to train a convolutional neural network to recognize the difference and then tested the neural net on the remaining 10 percent of the images.

The results are unsettling. Xiaolin and Xi found that the neural network could correctly identify criminals and noncriminals with an accuracy of 89.5 percent. “These highly consistent results are evidences for the validity of automated face-induced inference on criminality, despite the historical controversy surrounding the topic,” they say.

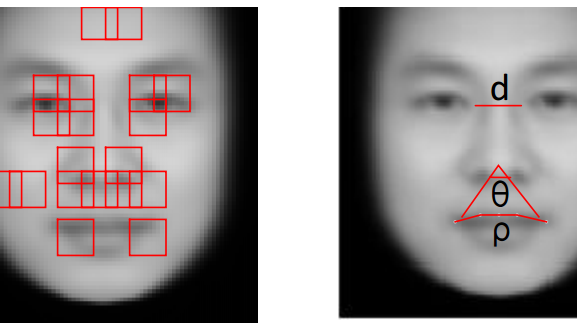

Xiaolin and Xi say there are three facial features that the neural network uses to make its classification. These are: the curvature of upper lip which is on average 23 percent larger for criminals than for noncriminals; the distance between two inner corners of the eyes, which is 6 percent shorter; and the angle between two lines drawn from the tip of the nose to the corners of the mouth, which is 20 percent smaller.

They go on to plot the variance in the data from criminal and noncriminal faces in a simplified parameter space called a manifold. And this process reveals why the difference has been hard to pin down.

Xiaolin and Xi show that these datasets are concentric but that the data for criminal faces has much greater variance. “In other words, the faces of general law-biding public have a greater degree of resemblance compared with the faces of criminals, or criminals have a higher degree of dissimilarity in facial appearance than normal people,” say Xiaolin and Xi.

This may also explain why certain kinds of statistical test cannot distinguish between these data sets. Indeed, Xiaolin and Xi show that when they combine criminal and noncriminal faces to create “average” faces, they look almost identical.

Although controversial, that result is not entirely unexpected. If humans can spot criminals by looking at their faces, as psychologists found in 2011, it should come as no surprise that machines can do it, too.

The worry, of course, is how humans might use these machines. It’s not hard to imagine how this process could be applied to data sets of, say, passport or driving license photos for an entire country. It would then be possible to pick out those people identified as law-breakers, whether or not they had committed a crime.

That’s a kind of Minority Report scenario in which law-breakers could be identified before they had committed a crime.

Of course, this work needs to be set on a much stronger footing. It needs to be reproduced with different ages, sexes, ethnicities, and so on. And on much larger data sets. That should help to tease apart the complexities of the findings. For example, Xiaolin and Xi find that criminal faces can be sub-divided into four subgroups, but noncriminal faces into only three. How come? And how does this vary in other groups?

And the work raises important questions. If the result does hold water, how is it to be explained? Why would the faces of criminals have much greater variance than those of noncriminals? And how are we able to spot these faces—is it learned behavior or hard-wired behavior that has evolved?

All this heralds a new era of anthropometry, criminal or otherwise. Last week, researchers revealed how they had trained a deep-learning machine to judge in the same way as humans whether somebody was trustworthy by looking at a snapshot of their face. This work is another take on the same topic. And there is room for much more research as machines become more capable. Examining what our clothes or hair say about us is one obvious angle. And machines will soon be able to study movement, too. That raises the possibility of studying how we move, how we interact, and so on.

Ref: arxiv.org/abs/1611.04135: Automated Inference on Criminality Using Face Images

Deep Dive

Artificial intelligence

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

What’s next for generative video

OpenAI's Sora has raised the bar for AI moviemaking. Here are four things to bear in mind as we wrap our heads around what's coming.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.