This AI Algorithm Learns Simple Tasks as Fast as We Do

Taking inspiration from the way humans seem to learn, scientists have created AI software capable of picking up new knowledge in a far more efficient and sophisticated way.

The new AI program can recognize a handwritten character about as accurately as a human can, after seeing just a single example. The best existing machine-learning algorithms, which employ a technique called deep learning, need to see many thousands of examples of a handwritten character in order to learn the difference between an A and a Z.

The software was developed by Brenden Lake, a researcher at New York University, together with Ruslan Salakhutdinov, an assistant professor of computer science at the University of Toronto, and Joshua Tenenbaum, a professor in the Department of Brain and Cognitive Sciences at MIT. Details of the program, and the ideas behind it, are published today in the journal Science.

Computers have become much cleverer over the past few years, learning to recognize faces, understand speech, and even drive cars safely, among many other things. And most of the progress has been made using large, or deep, neural networks. But there is a crucial drawback to these systems: they require oodles of data to learn how to do even the simplest task.

This limitation is largely due to the fact that the algorithms do not process information the way we do. Although deep learning is modeled on a virtual network of neurons—and the approach has produced very impressive results in perceptual tasks—it is a very rough imitation of the way the brain works. A deep-learning algorithm associates the pixels in an image with a particular character. The brain may process some visual stimuli in a similar way, but humans also use higher forms of cognitive function in order to interpret the contents of an image.

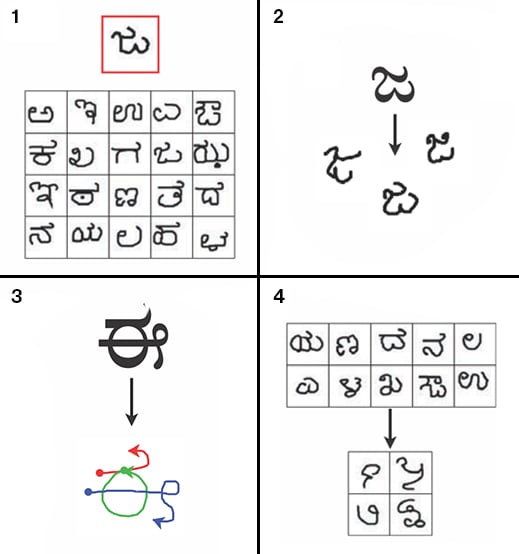

The researchers used a technique they call the Bayesian program learning framework, or BPL. Essentially, the software generates a unique program for every character using strokes of an imaginary pen. A probabilistic programming technique is then used to match a program to a particular character, or to generate a new program for an unfamiliar one. The software is not mimicking the way children acquire the ability to read and write but, rather, the way adults, who already know how, learn to recognize and re-create new characters.

“The key thing about probabilistic programming—and rather different from the way most of the deep-learning stuff is working—is that it starts with a program that describes the causal processes in the world,” says Tenenbaum. “What we’re trying to learn is not a signature of features, or a pattern of features. We’re trying to learn a program that generates those characters.”

Tenenbaum and colleagues tested the approach by having both humans and the software draw new characters after seeing one handwritten example, and then asking a group of people to judge whether a character was written by a person or a machine. They found that fewer than 25 percent of judges were able to tell the difference.

The team says the technique could be extended to more practical applications. For example, it might enable computers to quickly learn to recognize, and make use of, new words in spoken language. Or it could enable a computer to recognize new instances of a particular object. More generally, the approach points to an important new direction in artificial intelligence, as researchers take inspiration from research into human cognition.

Geoffrey Hinton, a professor of psychology at the University of Toronto who played a key role in the development of deep learning, says the work is an important step for the field. “It’s a beautiful paper, and a very impressive example of learning from not many examples,” he says.

Hinton, who was also the PhD advisor of one of the paper’s authors, Salakhutdinov, says AI researchers can learn many useful things from both neuroscience and cognitive science. He also suggests that approaches like the one developed for handwriting recognition, in fact, can be compatible with deep learning. “I think you can have the best of both worlds,” he says.

Gary Marcus, a cognitive scientist at New York University and the cofounder of a company called Geometric Intelligence, which is also developing machine-learning approaches inspired by human behavior, says he doesn’t entirely agree that the human mind works in the way described in the Science paper. But he thinks the approach shows an important goal for AI, because in many situations there aren’t huge quantities of data for a machine to learn from.

“The issue with the dominant paradigm is that it’s very, very data-hungry,” Marcus says. “This is proof you can learn faster. And I think that’s something people are going to think about a lot.”

Marcus adds that language could be the killer app for such systems. Many deep-learning researchers are already working on this challenge (see “Teaching Machines to Understand Us”), but Marcus believes machines will need to learn in more efficient and flexible ways in order to crack it. “The real inflection point in AI is going to come when machines can actually understand language,” he says. “Not just doing mediocre translations, but really understanding what you mean.”

Deep Dive

Artificial intelligence

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

What’s next for generative video

OpenAI's Sora has raised the bar for AI moviemaking. Here are four things to bear in mind as we wrap our heads around what's coming.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.