The Face Detection Algorithm Set to Revolutionize Image Search

Back in 2001, two computer scientists, Paul Viola and Michael Jones, triggered a revolution in the field of computer face detection. After years of stagnation, their breakthrough was an algorithm that could spot faces in an image in real time. Indeed, the so-called Viola-Jones algorithm was so fast and simple that it was soon built into standard point and shoot cameras.

Part of their trick was to ignore the much more difficult problem of face recognition and concentrate only on detection. They also focused only on faces viewed from the front, ignoring any seen from an angle. Given these bounds, they realized that the bridge of the nose usually formed a vertical line that was brighter than the eye sockets nearby. They also noticed that the eyes were often in shadow and so formed a darker horizontal band.

So Viola and Jones built an algorithm that looks first for vertical bright bands in an image that might be noses, it then looks for horizontal dark bands that might be eyes, it then looks for other general patterns associated with faces.

Detected by themselves, none of these features are strongly suggestive of a face. But when they are detected one after the other in a cascade, the result is a good indication of a face in the image. Hence the name of this process: a detector cascade. And since these tests are all simple to run, the resulting algorithm can work quickly in real-time.

But while the Viola-Jones algorithm was something of a revelation for faces seen from the front, it cannot accurately spot faces from any other angle. And that severely limits how it can be used for face search engines.

Which is why Yahoo is interested in this problem. Today, Sachin Farfade and Mohammad Saberian at Yahoo Labs in California and Li-Jia Li at Stanford University nearby, reveal a new approach to the problem that can spot faces at an angle, even when partially occluded. They say their new approach is simpler than others and yet achieves state-of-the-art performance.

Farfade and co use a fundamentally different approach to build their model. They capitalize on the advances made in recent years on a type of machine learning known as a deep convolutional neural network. The idea is to train a many-layered neural network using a vast database of annotated examples, in this case pictures of faces from many angles.

To that end, Farfade and co created a database of 200,000 images that included faces at various angles and orientations and a further 20 million images without faces. They then trained their neural net in batches of 128 images over 50,000 iterations.

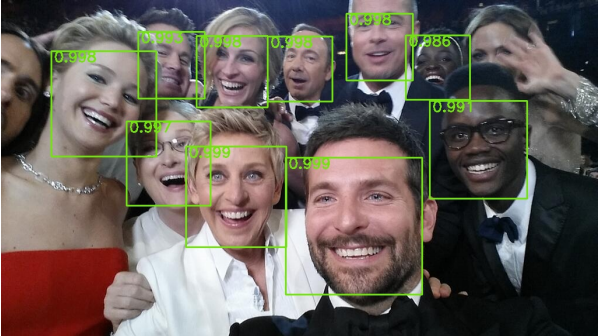

The result is a single algorithm that can spot faces from a wide range of angles, even when partially occluded. And it can spot many faces in the same image with remarkable accuracy.

The team call this approach the Deep Dense Face Detector and say it compares well with other algorithms. “We evaluated the proposed method with other deep learning based methods and showed that our method results in faster and more accurate results,” they say.

What’s more, their algorithm is significantly better at spotting faces when upside down, something other approaches haven’t perfected. And they say that it can be made even better with datasets that include more upside down faces. “We are planning to use better sampling strategies and more sophisticated data augmentation techniques to further improve performance of the proposed method for detecting occluded and rotated faces.”

That’s interesting work that shows how fast face detection is progressing. The deep convolutional neural network technique is only a couple of years old itself and already it has led to major advances in object and face recognition.

The great promise of this kind of algorithm is in image search. At the moment, it is straightforward to hunt for images taken at a specific place or at a certain time. But it is hard to find images taken of specific people. This is step in that direction. It is inevitable that this capability will be with us in the not too distant future.

And when it arrives, the world will become a much smaller place. It’s not just future pictures that will become searchable but the entire history of digitized images including vast stores of video and CCTV footage. That’s going to be a powerful force, one way or another.

Ref: arxiv.org/abs/1502.02766 : Multi-view Face Detection Using Deep Convolutional Neural Networks

Deep Dive

Artificial intelligence

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

What’s next for generative video

OpenAI's Sora has raised the bar for AI moviemaking. Here are four things to bear in mind as we wrap our heads around what's coming.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.