Watch this robot as it learns to stitch up wounds

The robot was able to sew six stitches all on its own—and has lessons for robotics as a whole.

An AI-trained surgical robot that can make a few stitches on its own is a small step toward systems that can aid surgeons with such repetitive tasks.

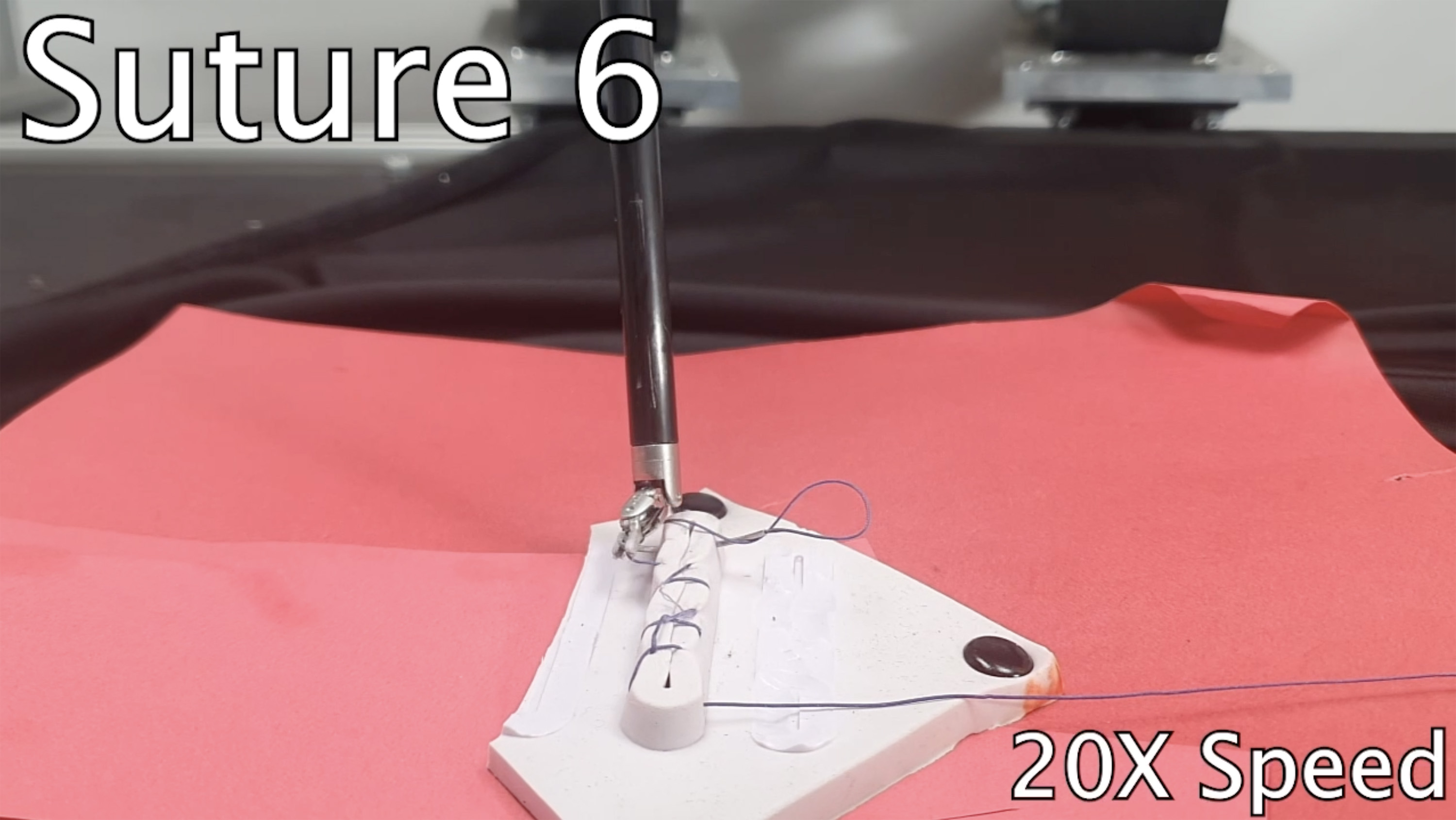

A video taken by researchers at the University of California, Berkeley, shows the two-armed robot completing six stitches in a row on a simple wound in imitation skin, passing the needle through the tissue and from one robotic arm to the other while maintaining tension on the thread.

Though many doctors today get help from robots for procedures ranging from hernia repairs to coronary bypasses, those are used to assist surgeons, not replace them. This new research marks progress toward robots that can operate more autonomously on very intricate, complicated tasks like suturing. The lessons learned in its development could also be useful in other fields of robotics.

“From a robotics perspective, this is a really challenging manipulation task,” says Ken Goldberg, a researcher at UC Berkeley and director of the lab that worked on the robot.

One issue is that shiny or reflective objects like needles can throw off a robot’s image sensors. Computers also have a hard time modeling how “deformable” objects, like skin and thread, react when poked and prodded. Unlike transferring a needle from one human hand to another, moving a needle between robotic arms is an immense challenge in dexterity.

The robot uses a pair of cameras to take in its surroundings. Then, having been trained on a neural network, it is able to identify where the needle is and use a motion controller to plan all six motions involved in making a stitch.

Though we’re a long way from seeing these sorts of robots used in operating rooms to sew up wounds and organs on their own, the goal of automating part of the suturing process holds serious medical potential, says Danyal Fer, a physician and researcher on the project.

“There’s a lot of work within a surgery,” Fer says, “and oftentimes, suturing is the last task you have to do.” That means doctors are more likely to be fatigued when doing stitches, and if they don’t close the wound properly, it can mean a longer healing time and a host of other complications. Because suturing is also a fairly repetitive task, Goldberg and Fer saw it as a good candidate for automation.

“Can we show that we actually get better patient outcomes?” Goldberg says. “It’s convenient for the doctor, yes, but most importantly, does this lead to better sutures, faster healing, and less scarring?”

That’s an open question, since the success of the robot comes with caveats. The machine made a record of six complete stitches before a human had to intervene, but it could only complete an average of about three across the trials. The test wound was limited to two dimensions, unlike a wound on a rounded part of the body like the elbow or knuckle. Also, the robot has only been tested on “phantoms,” a sort of fake skin used in medical training settings—not on organ tissue or animal skin.

Axel Krieger, a researcher at Johns Hopkins University who was not involved in the study, says the robot made impressive advancements, especially in its ability to find and grasp the needle and transfer it between arms.

“It’s quite like finding a needle in a haystack,” Krieger says. “It’s a very difficult task, and I’m very impressed with how far they got.”

Krieger’s lab is a leader in robotic suturing, albeit with a different approach. Whereas the Berkeley researchers worked with the da Vinci Research Kit, a shared robotics system used for laparoscopic surgeries in a long list of operating rooms, Krieger’s lab built its own system, called the Smart Tissue Autonomous Robot (STAR).

A 2022 paper on the STAR showed it could successfully put stitches in pig intestines. That was notable because robots have a hard time differentiating colors within a sample of animal tissue and blood. But the STAR system also benefited from unique tech, like infrared sensors placed in the tissue that helped tell the robot where to go, and a purpose-built suturing mechanism to throw the stitches. The Berkeley robot was instead designed to stitch by hand, using the less specialized da Vinci system.

Both researchers have a laundry list of challenges they plan to present to their robot surgeons in the future. Krieger wants to make the robot easier for surgeons to operate (its operations are currently obscured behind a wall of code) and train it to handle much smaller sutures.

Goldberg wants to see his lab’s robot successfully stitch more complicated wound shapes and complete suturing tasks faster and more accurately. Pretty soon, the lab will move from testing on imitation skin to animal skin.

Chicken is preferred. “The nice thing is you just go out and buy some chicken from the grocery store,” he says. “No approval needed.”

Deep Dive

Artificial intelligence

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

What’s next for generative video

OpenAI's Sora has raised the bar for AI moviemaking. Here are four things to bear in mind as we wrap our heads around what's coming.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.