Emotion AI researchers say overblown claims give their work a bad name

A lack of government regulation isn’t just bad for consumers. It’s bad for the field, too.

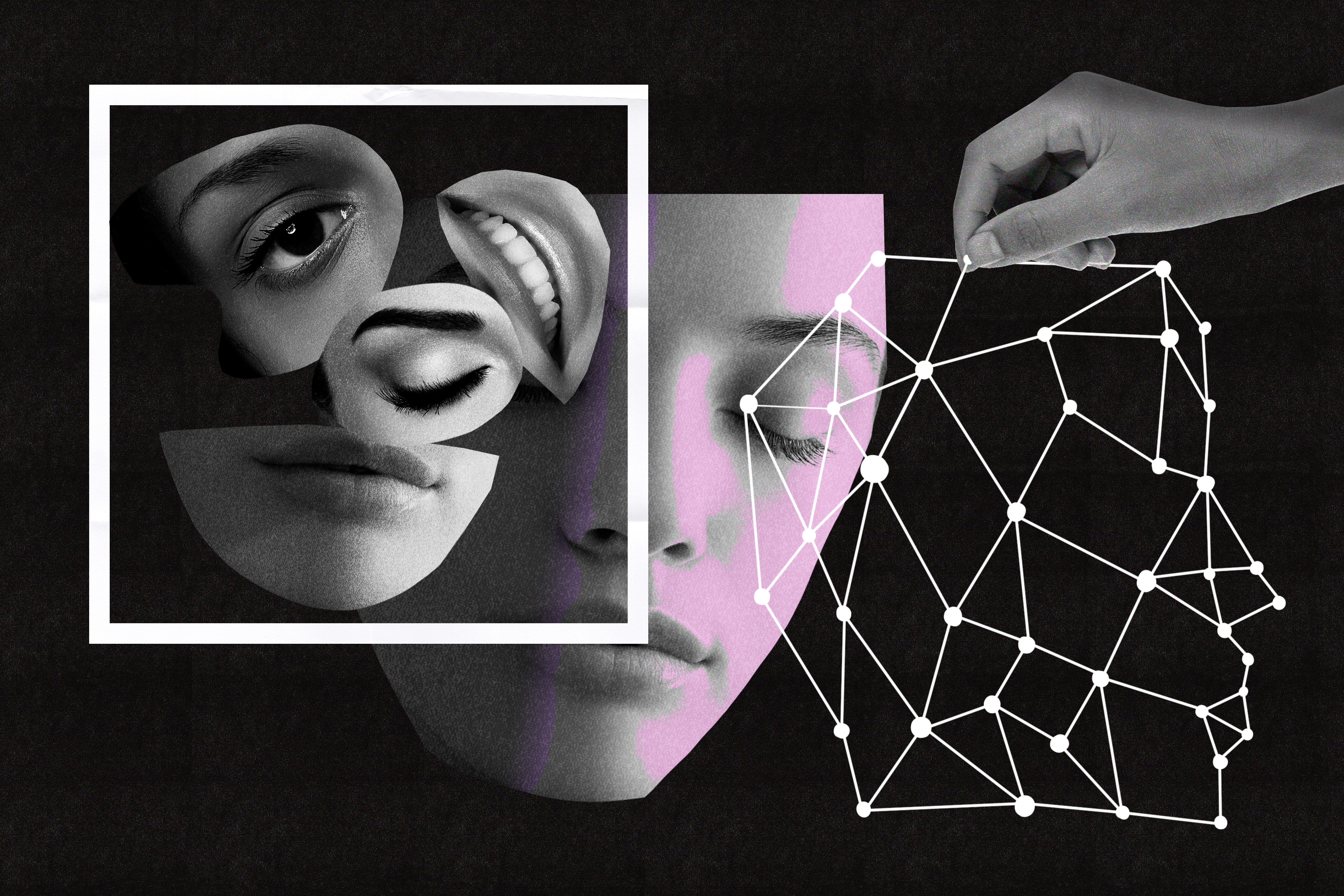

Perhaps you’ve heard of AI conducting interviews. Or maybe you’ve been interviewed by one yourself. Companies like HireVue claim their software can analyze video interviews to figure out a candidate’s “employability score.” The algorithms don’t just evaluate face and body posture for appearance; they also tell employers whether the interviewee is tenacious, or good at working on a team. These assessments could have a big effect on a candidate’s future. In the US and South Korea, where AI-assisted hiring has grown increasingly popular, career consultants now train new grads and job seekers on how to interview with an algorithm. This technology is also being deployed on kids in classrooms and has been used in studies to detect deception in courtroom videos.

But many of these promises are unsupported by scientific consensus. There are no strong, peer-reviewed studies proving that analyzing body posture or facial expressions can help pick the best workers or students (in part because companies are secretive about their methods). As a result, the hype around emotion recognition, which is projected to be a $25 billion market by 2023, has created a backlash from tech ethicists and activists who fear that the technology could raise the same kinds of discrimination problems as predictive sentencing or housing algorithms for landlords deciding whom to rent to.

The hype worries the researchers too. Many agree that their work—which uses various methods (like analyzing micro-expressions or voice) to discern and interpret human expressions—is being co-opted and used in commercial applications that have a shaky basis in science. They say that a lack of government regulation isn’t just bad for consumers. It’s bad for them, too.

The good and the bad

Emotion recognition, a subset of affective computing, is still a nascent technology. As AI researchers have tested the boundaries of what we can and can’t quantify about human behavior, the underlying science of emotions has continued to develop. There are still multiple theories, for example, about whether emotions can be distinguished discretely or fall on a continuum. Meanwhile, the same expressions can mean different things in different cultures. In July, a meta-study concluded that it isn’t possible to judge emotion by just looking at a person’s face. The study was widely covered (including in this publication), often with headlines suggesting that “emotion recognition can’t be trusted.”

Emotion recognition researchers are already aware of this limitation. The ones we spoke to were careful about making claims of what their work can and cannot do. Many emphasized that emotion recognition cannot actually assess an individual’s internal emotions and experience. It can only estimate how that individual’s emotions might be perceived by others, or suggest broad, population-based trends (such as one film eliciting, on average, a more positive reaction than another). “No serious researcher would claim that you can analyze action units in the face and then you actually know what people are thinking,” says Elisabeth André, an affective computing expert at the University of Augsburg.

Researchers also note that emotion recognition involves far more than just looking at someone’s face. It can also involve observing body posture, gait, and other characteristics, as well as using biometric sensors and audio to gather more holistic data.

Such distinctions are fine but important: they disqualify applications like HireVue, which claim to assess an individual’s inherent competence, but support others, such as technologies aiming to make machines into more intelligent collaborators and companions for humans. (HireVue did not respond to a request for comment.) A humanoid robot could smile when you smiled—a mirroring action humans often use to make interactions feel more natural. A wearable device could remind you to rest if it detected higher than baseline levels of cortisol, the body’s stress hormone. None of these applications require an algorithm to assess your private thoughts and feelings; they only require an estimation of an appropriate response to cortisol levels or body language. They also do not make high-stakes decisions about an individual’s life—unlike unproven hiring algorithms. “If we want computers and computing systems to help us, it would be positive if they had a sense of how we are feeling,” says Nuria Oliver, the chief data scientist at the nonprofit DataPop Alliance.

But much of this nuance gets lost when emotion recognition research is used to make lucrative commercial applications. The same stress-monitoring algorithms in a wearable could be used by a company trying to make sure you’re working hard enough. Even for companies like Affectiva, founded by researchers who speak about the importance of privacy and ethics, the boundaries are tough to define. It has sold its technology to HireVue. (Affectiva declined to comment on specific companies.)

A call for regulation

In December, the AI Now research institute called for a ban on emotion recognition technologies “in important decisions that impact people’s lives.” It’s one of the first calls to ban a technology that has received less regulatory attention than other forms of artificial intelligence, even though its use in job screening and classrooms could have serious effects.

In contrast, Congress just held its third hearing on facial recognition in less than a year, and it has become an issue in the 2020 election. Activists are working to boycott facial recognition technologies, and several representatives are acknowledging the need for regulation in both the private and public sectors. For affective computing, there haven’t been as many dedicated campaigns and working groups, and attempts at regulation have been limited. An Illinois law regulating AI analysis of job interview videos went into effect in January, and the Federal Trade Commission has been asked to investigate HireVue (though there’s no word on whether it intends to do so).

Though many researchers believe a ban is too broad, they agree that a regulatory vacuum is also harmful. “We have clearly defined processes to certify that certain products that we consume—be it food that we eat, be it medications that we take—they are safe for us to take them, and they actually do whatever they claim that they do,” says Oliver. “We don't have the same processes for technology.” She thinks companies whose technologies can significantly affect people’s lives should have to prove that they meet a certain standard of safety.

Rosalind Picard, a professor at the MIT Media Lab who cofounded Affectiva and another affective computing startup, Empatica, echoes this sentiment. For an existing model of regulation, she points to the Employee Polygraph Protection Act limiting the use of lie detectors, which she says are essentially an affective computing technology. For example, the law prohibits most private employers from using polygraphs and doesn’t let employers ask about the results of lie detector tests.

She suggests that all use of such technologies should be opt-in and that companies should be required to disclose how their technologies were tested and what their limitations are. “What we have today is that [companies] can make these outrageous claims which are just false, because right now the buyer is not that well educated,” she says. “And we shouldn’t require the buyers to be well educated.” (Picard, who says she left Affectiva in 2013, doesn’t support the claims that HireVue is making.)

For her part, Meredith Whittaker, a research scientist at NYU and co-director of AI Now, emphasizes the difference between research and commercialization. “We are not impugning the entire field of affective computing,” she says. “We are particularly calling out the unregulated, unvalidated, scientifically unfounded deployment of commercial affect recognition technologies. Commercialization is hurting people right now, potentially, because it’s making claims that are determining people’s access to resources.”

A ban on using emotion recognition in applications such as job screening would help stop commercialization from outpacing science. Halt the deployment of the technologies first, she says, and then invest in research. If the research confirms that the technologies work as companies claim, then consider loosening the ban.

Other regulations would still be needed, however, to keep people safe: there’s ultimately more to consider, Whittaker argues, than just scientific credibility. “We need to ensure, when these systems are used in sensitive contexts, that they are contestable, that they are used fairly,” she says, “and that they are not leading to increased power asymmetries between the people who use them and the people on whom they're used.”

Deep Dive

Artificial intelligence

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

What’s next for generative video

OpenAI's Sora has raised the bar for AI moviemaking. Here are four things to bear in mind as we wrap our heads around what's coming.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.