Powerful computer vision algorithms are now small enough to run on your phone

Researchers have shrunk state-of-the-art computer vision models to run on low-power devices.

Growing pains: Visual recognition is deep learning’s strongest skill. Computer vision algorithms are analyzing medical images, enabling self-driving cars, and powering face recognition. But training models to recognize actions in videos has grown increasingly expensive. This has fueled concerns about the technology’s carbon footprint and its increasing inaccessibility in low-resource environments.

The research: Researchers at the MIT-IBM Watson AI Lab have now developed a new technique for training video recognition models on a phone or other device with very limited processing capacity. Typically, an algorithm will process video by splitting it up into image frames and running recognition algorithms on each of them. It then pieces together the actions shown in the video by seeing how the objects change over subsequent frames. The method requires the algorithm to “remember” what it has seen in each frame and the order in which it has seen it. This is unnecessarily inefficient.

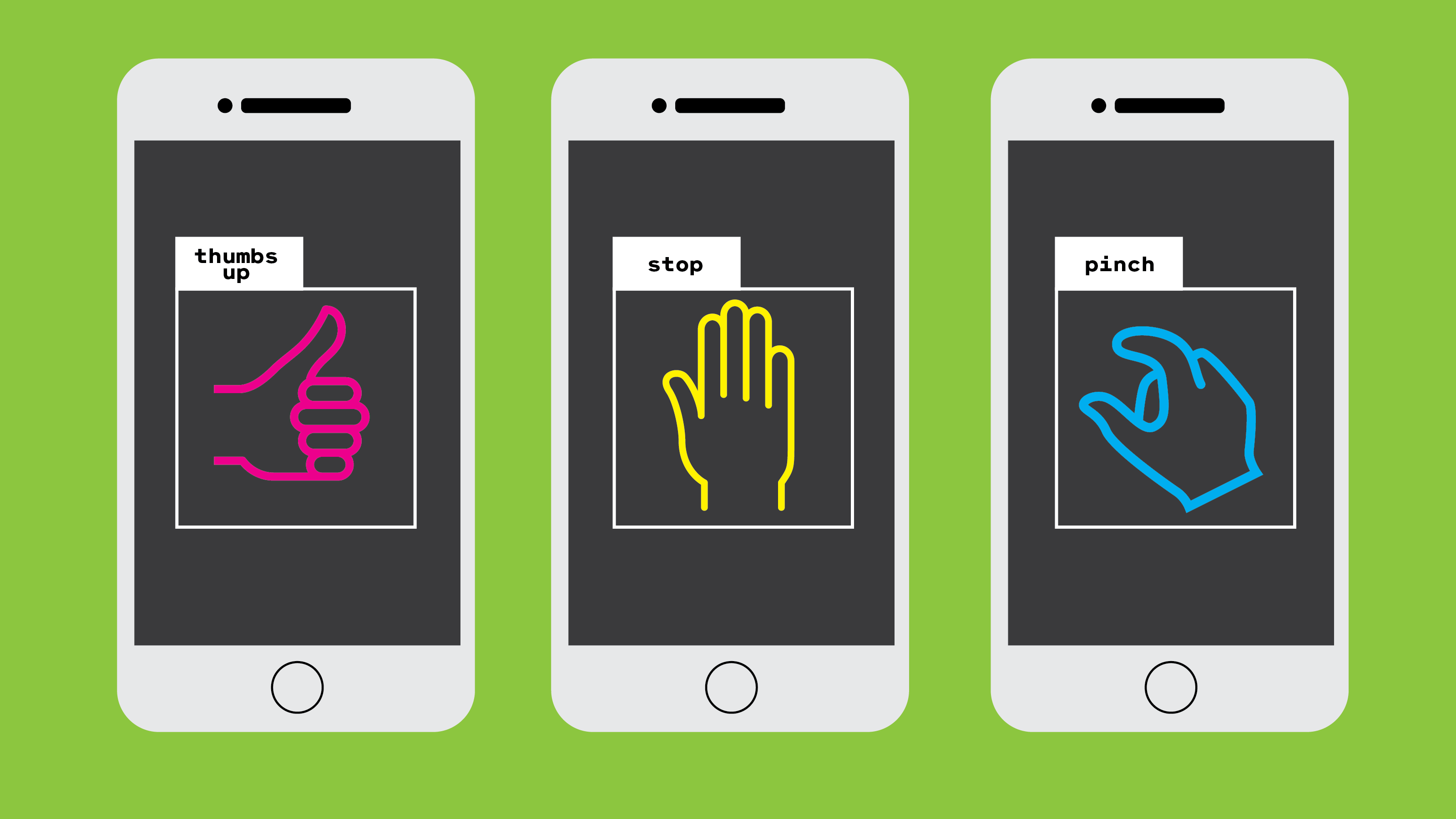

In the new approach, the algorithm instead extracts basic sketches of the objects in each frame, and overlays them on top of one another. Rather than remember what happened when, the algorithm can get an impression of the passing of time by looking at how the objects shift through space in the sketches. In testing, the researchers found that the new approach trained video recognition models three times faster than the state of the art. It was also able to quickly classify hand gestures with a small computer and camera running only on enough energy to power a bike light.

Why it matters: The new technique could help reduce lag and computation costs in existing commercial applications of computer vision. It could, for example, make self-driving cars safer by speeding up their reaction to incoming visual information. The technique could also unlock new applications that previously weren’t possible, such as by enabling phones to help diagnose patients or analyze medical images.

Distributed AI: As more and more AI research gets translated into applications, the need for tinier models will increase. The MIT-IBM paper is part of a growing trend to shrink state-of-the-art models to a more manageable size.

To have more stories like this delivered directly to your inbox, sign up for our Webby-nominated AI newsletter The Algorithm. It's free.

Deep Dive

Artificial intelligence

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

What’s next for generative video

OpenAI's Sora has raised the bar for AI moviemaking. Here are four things to bear in mind as we wrap our heads around what's coming.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.