This is what happens when you see the face of someone you love

The moment we recognize someone, a lot happens all at once. We aren’t aware of any of it.

Adam is on his way over.

My apartment doesn’t have a door buzzer, so Adam always calls when he’s two minutes away. He never says he’s two minutes away; he says he’s already at my door, because he knows I’m always trying to finish something before I open up.

Over the noise of the shower, I hear my phone buzz. I reach around the plastic curtain. It’s 6:31 p.m.

“Hi, I’m here,” he says.

Shit.

I bound down the stairs holding the towel nest on top of my head. I can see the shape of his face through the window. Adam resembles a Viking who works in finance. I see the beginnings of a smile. (0 milliseconds)

I see a tan, scruffiness and boyishness. (40 milliseconds) I register the shape of his face, his small bright almond eyes, his overbite (which I find darling), and his hairline. (50 milliseconds) The skin at the edge of his eyes is starting to wrinkle into little creases, and his strong forehead suggests an aggressive masculinity that is at odds with his personality. (70 milliseconds) I know it’s Adam from a flight away. (90 milliseconds)

I know his hair is starting to thin because I remember our very first fight when I asked whether he was balding. I can almost smell the patchouli of his beard oil—which he leaves at my apartment every other week—through the door. It reminds me of our mornings together before heading to our offices from a different lifetime. (400 milliseconds)

We exchange smiles while I unlock the pair of doors between us. We kiss on the cheek. We hug.

“How long have you been waiting?” I ask.

“Oh, just got here. I called from two blocks away.”

0 milliseconds

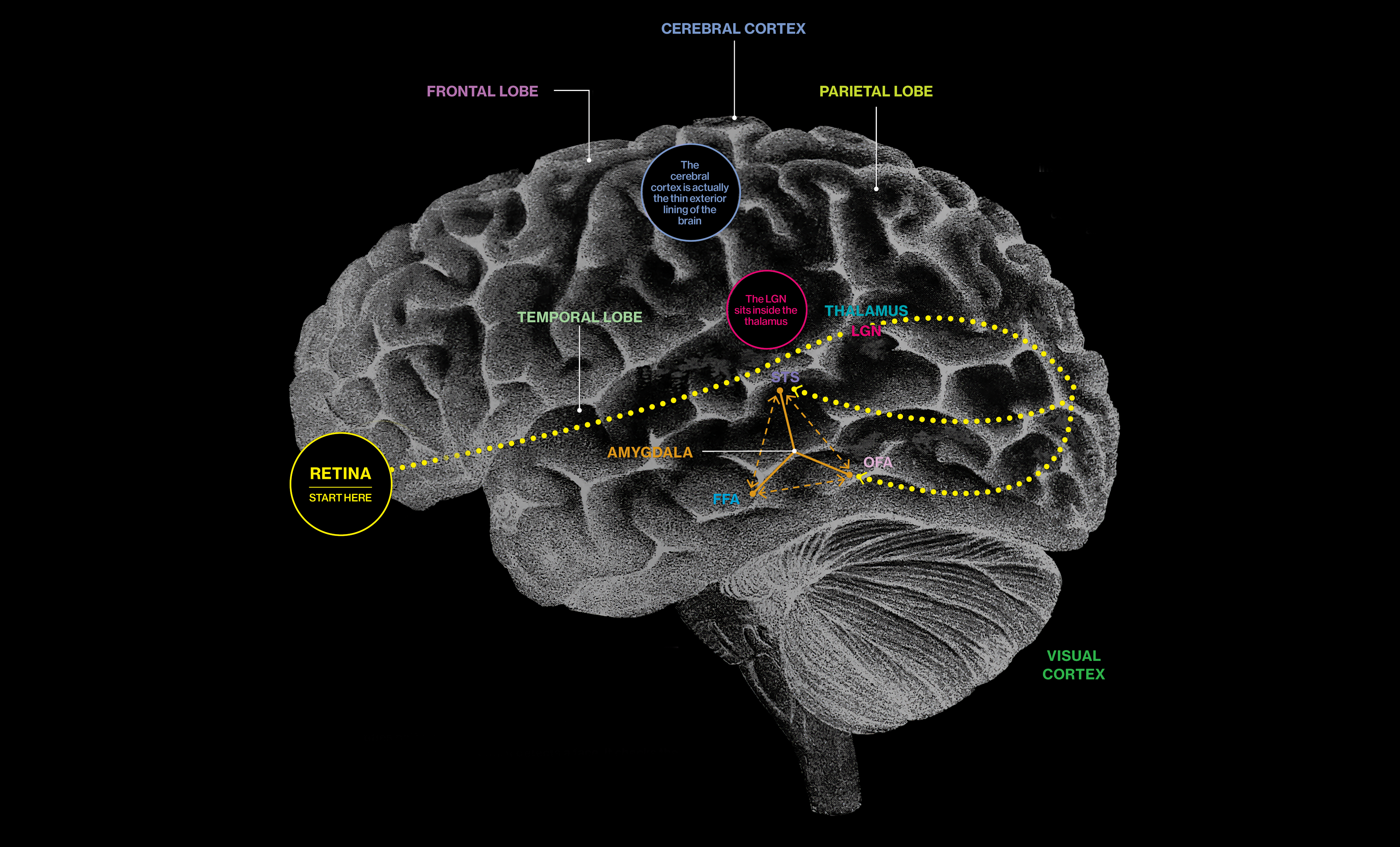

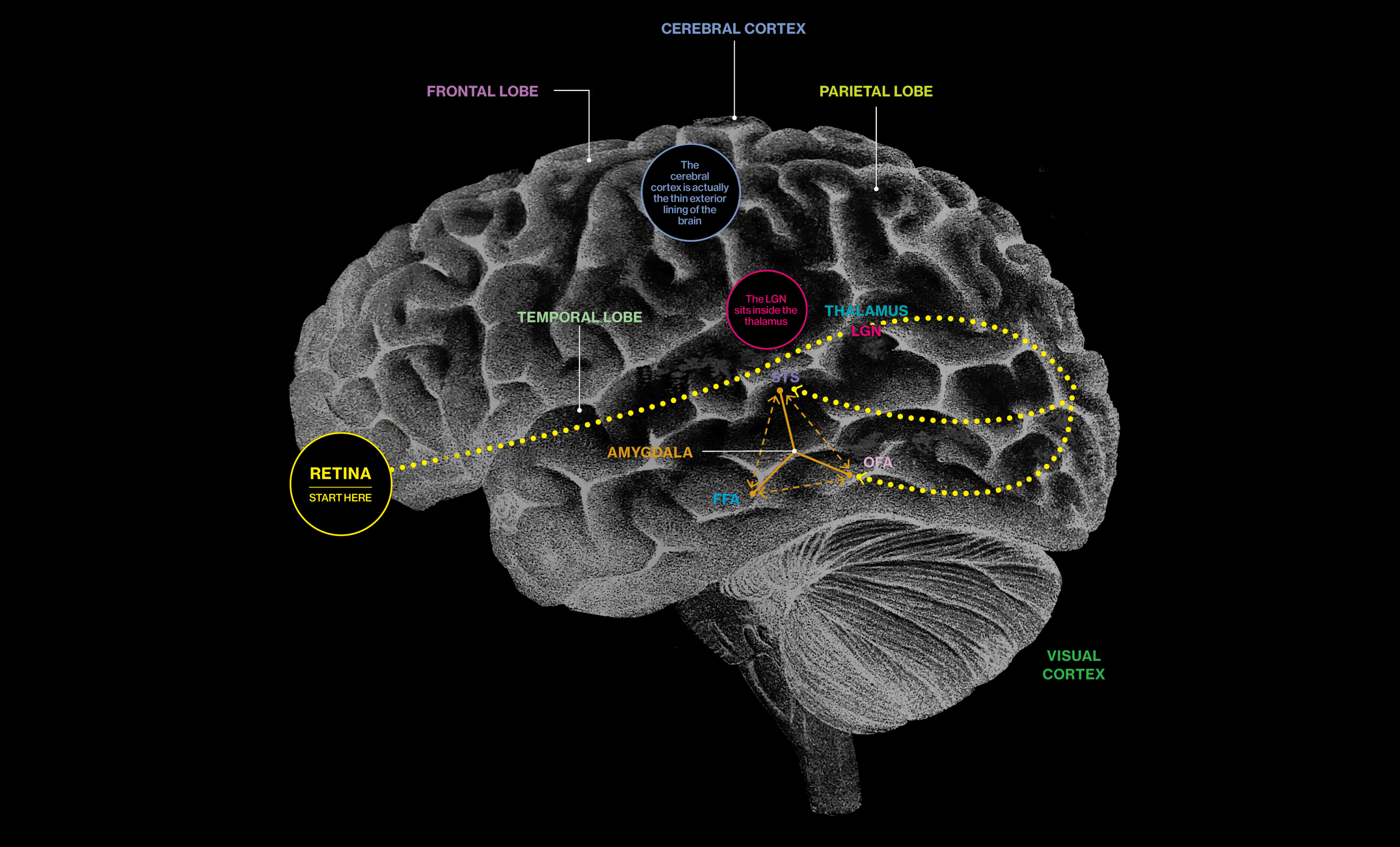

Light reflected from Adam’s face is absorbed by my retina, which sends signals down the optic nerve toward a relay center called the lateral geniculate nucleus (LGN). Here, visual information is passed on to other parts of the brain. The LGN is housed in the thalamus, a small region above the brain stem that sends sensory information to the CEREBRAL CORTEX,

the brain’s main control center.

40 milliseconds

The LGN starts to build a representation of what I am looking at for the visual cortex by combining information from both eyes.

50 milliseconds

The visual cortex registers what I am seeing outside my door.

70 milliseconds

Structures in the back of my temporal lobe, called the face patches—the occipital face area (OFA), the fusiform face area (FFA), and the superior temporal sulcus (STS)—tell me that I am looking at a face and start to categorize its gender and age.

90 milliseconds

The face patches tell me this face belongs to a single person and then compare it with faces I have seen before.

400 milliseconds

By now, my brain has recruited portions of the FRONTAL, PARIETAL, and TEMPORAL lobes that store memory and emotion to discern whether Adam’s face is familiar. The AMYGDALA, where most of my emotion controls are, is also involved. Together, these areas help me recall core information about him.

Deep Dive

Humans and technology

Building a more reliable supply chain

Rapidly advancing technologies are building the modern supply chain, making transparent, collaborative, and data-driven systems a reality.

Building a data-driven health-care ecosystem

Harnessing data to improve the equity, affordability, and quality of the health care system.

Let’s not make the same mistakes with AI that we made with social media

Social media’s unregulated evolution over the past decade holds a lot of lessons that apply directly to AI companies and technologies.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.