10 Breakthrough Technologies 2009

Emerging Technologies: 2009

Each year, Technology Review selects what it believes are the 10 most important emerging technologies. The winners are chosen based on the editors’ coverage of key fields. The question that we ask is simple: is the technology likely to change the world? Some of these changes are on the largest scale possible: better biofuels, more efficient solar cells, and green concrete all aim at tackling global warming in the years ahead. Other changes will be more local and involve how we use technology: for example, 3-D screens on mobile devices, new applications for cloud computing, and social television. And new ways to implant medical electronics and develop drugs for diseases will affect us on the most intimate level of all, with the promise of making our lives healthier.

This story was part of our March/April 2009 issue.

Explore the issue10 Breakthrough Technologies

Biological Machines

Michel Maharbiz’s novel interfaces between machines and living systems could give rise to a new generation of cyborg devices.

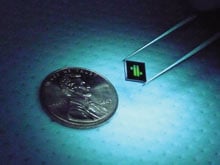

Cyborg beetle: By equipping a giant flower beetle with a processor and implanting electrodes that deliver electrical jolts to its brain and to its wing muscles, scientists have created a living machine whose flight can be wirelessly controlled. JOHN BURGOYNEA giant flower beetle flies about, veering up and down, left and right. But the insect isn’t a pest, and it isn’t steering its own path. An implanted receiver, microcontroller, microbattery, and six carefully placed electrodes–a payload smaller than a dime and weighing less than a stick of gum–allow an engineer to control the bug wirelessly. By remotely delivering jolts of electricity to its brain and wing muscles, the engineer can make the cyborg beetle take off, turn, or stop midflight.

The beetle’s creator, Michel Maharbiz, hopes that his bugs will one day carry sensors or other devices to locations not easily accessible to humans or the terrestrial robots used in search-and-rescue missions. The devices are cheap: materials cost as little as five dollars, and the electronics are easy to build with mostly off-the-shelf components. “They can fly into tiny cracks and could be fitted with heat sensors designed to find injured survivors,” says Maharbiz, an assistant professor at the University of California, Berkeley. “You cannot do that now with completely synthetic systems.”

Maharbiz’s specialty is designing interfaces between machines and living systems, from individual cells to entire organisms. His goal is to create novel “biological machines” that take advantage of living cells’ capacity for extremely low-energy yet exquisitely precise movement, communication, and computation. Maharbiz envisions devices that can collect, manipulate, store, and act on information from their environments. Tissue for replacing damaged organs might be an example, or tables that can repair themselves or reconfigure their shapes on the basis of environmental cues. In 100 years, Maharbiz says, “I bet this kind of machine will be everywhere, derived from cells but completely engineered.”

The remote-controlled beetles are an early success story. Beetles integrate visual, mechanical, and chemical information to control flight, all using a modicum of energy–a feat that’s almost impossible to reproduce from scratch. In order to deploy a beetle as a useful and sophisticated tool like a search-and-rescue “robot,” Maharbiz’s team had to create input and output mechanisms that could efficiently communicate with and control the insect’s nervous system. Such interfaces are now possible thanks to advances in microfabrication techniques, the availability of ever smaller power sources, and the growing sophistication of microelectromechanical systems (MEMS)–tiny mechanical devices that can be assembled to make things like radios and microcontrollers.

Stuck to the beetle’s back is a commercial radio receiver atop a custom-made circuit board. Six electrode stimulators snake from the circuit board into the insect’s optic lobes, brain, and left and right basilar flight muscles. A transmitter attached to a laptop running custom software sends messages to the receiver, delivering small electric pulses to the optic lobes to initiate flight and to the left or right flight muscle to trigger a turn. Because the receiver sends very high-level instructions to the beetle’s nervous system, it can simply signal the beginning and end of a flight, rather than sending continuous messages to keep the beetle flying.

Others have created interfaces that make it possible to remotely control the movements of rats and other animals. But insects are much smaller, and thus more challenging. Maharbiz is one of the few scientists with a sufficiently deep knowledge of both biology and engineering to successfully mesh an animal’s nervous system with MEMS technologies. His team previously modified beetles during the pupal stage, so that their implants are invisible in adulthood–a valuable property if they are to be used in covert missions. The researchers are now working on novel microstimulators and MEMS radio receivers that will allow for more precise neural targeting and even smaller systems.

The cyborg beetle is just one of an array of new technologies incubating in Maharbiz’s lab, including microfluidic chips that can deliver controlled amounts of oxygen and other chemicals–even DNA–to individual cells. This kind of system could be used to precisely control the development of cell populations. Ultimately, Maharbiz wants to develop programmable cell-based materials, like those required for the fantastical self-healing table. For now, his team focuses on finding the best ways to manipulate devices such as the beetles. “We want to find out,” says Maharbiz, “what are the limits of control?”

$100 Genome

Han Cao’s nanofluidic chip could cut DNA sequencing costs dramatically.

Nanoscale sorting: A tiny nanofluidic chip is the key to BioNanomatrix’s effort to sequence a human genome for just $100. BIONANOMATRIXIn the corner of the small lab is a locked door with a colorful sign taped to the front: “$100 Genome Room–Authorized Persons Only.” BioNanomatrix, the startup that runs the lab, is pursuing what many believe to be the key to personalized medicine: sequencing technology so fast and cheap that an entire human genome can be read in eight hours for $100 or less. With the aid of such a powerful tool, medical treatment could be tailored to a patient’s distinct genetic profile.

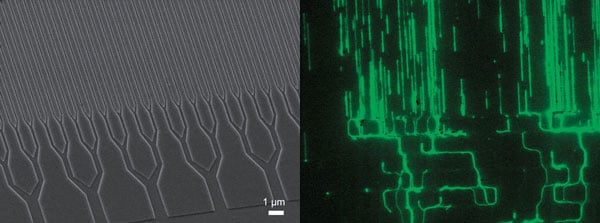

Despite many experts’ doubt that whole-genome sequencing could be done for $1,000, let alone a 10th that much, BioNanomatrix believes it can reach the $100 target in five years. The reason for its optimism: company founder Han Cao has created a chip that uses nanofluidics and a series of branching, ever-narrowing channels to allow researchers, for the first time, to isolate and image very long strands of individual DNA molecules.

If the company succeeds, a physician could biopsy a cancer patient’s tumor, sequence all its DNA, and use that information to determine a prognosis and prescribe treatment– all for less than the cost of a chest x-ray. If the ailment is lung cancer, for instance, the doctor could determine the particular genetic changes in the tumor cells and order the chemotherapy best suited to that variant.

Cao’s chip, which neatly aligns DNA, is essential to cheaper sequencing because double-stranded DNA, when left to its own devices, winds itself up into tight balls that are impossible to analyze. To sequence even the smallest chromosomes, researchers have had to chop the DNA up into millions of smaller pieces, anywhere from 100 to 1,000 base pairs long. These shorter strands can be sequenced easily, but the data must be pieced back together like a jigsaw puzzle. The approach is expensive and time consuming. What’s more, it becomes problematic when the puzzle is as large as the human genome, which consists of about three billion pairs of nucleotides. Even with the most elegant algorithms, some pieces get counted multiple times, while others are omitted completely. The resulting sequence may not include the data most relevant to a particular disease.

In contrast, Cao’s chip untangles stretches of delicate double-stranded DNA molecules up to 1,000,000 base pairs long–a feat that researchers had previously thought impossible. The series of branching channels gently prompts the molecules to relax a bit more at each fork, while also acting as a floodgate to help distribute them evenly. A mild electrical charge drives them through the chip, ultimately coaxing them into spaces that are less than 100 nanometers wide. With tens of thousands of channels side by side, the chip allows an entire human genome to flow through in about 10 minutes. The data must still be pieced together, but the puzzle is much smaller (imagine a jigsaw puzzle of roughly 100 pieces versus 10,000), leaving far less room for error.

Sequencing DNA: Thousands of branching channels just nanometers wide (left) untangle very long DNA strands; bright fluorescent labels allow researchers to easily visualize these molecules (right). The chip meets only half the $100-genome challenge: it unravels DNA but does not sequence it. To achieve that, the company is working with Silicon Valley-based Complete Genomics, which has developed bright, fluorescently labeled probes that bind to the 4,096 possible combinations of six-letter DNA “words.” Along with BioNanomatrix’s chip, the probes could achieve the lightning-fast sequencing necessary for the $100 genome. But the probes can’t stick to double-stranded DNA, so Complete Genomics will need to figure out how to open up small sections of DNA without uncoupling the entire molecule.

BioNanomatrix is keeping its options open. “At this point, we don’t have any exclusive ties to any sequencing chemistry,” says Gary Zweiger, the company’s vice president of development. “We want to make our chip available to sequencers, and we feel that it is an essential component to driving the costs down to the $100 level. We can’t do it alone, but we feel that they can’t do it without this critical component.”

Whether or not BioNanomatrix reaches its goal of $100 sequencing in eight hours, its technology could play an important role in medicine. Because the chips can process long pieces of DNA, the molecules retain information about gene location; they can thus be used to quickly identify new viruses or bacteria causing an outbreak, or to map new genes linked to specific diseases. And as researchers learn more about the genetic variations implicated in different diseases, it might be possible to biopsy tissue and sequence only those genes with variants known to cause disease, says Colin Collins, a professor at the Prostate Center at Vancouver General Hospital, who plans to use BioNanomatrix chips in his lab. “Suddenly,” Collins says, “you can sequence extremely rapidly and very, very inexpensively, and provide the patient with diagnosis and prognosis and, hopefully, a drug.”

Paper Diagnostics

George Whitesides has created a cheap, easy-to-use diagnostic test out of paper.

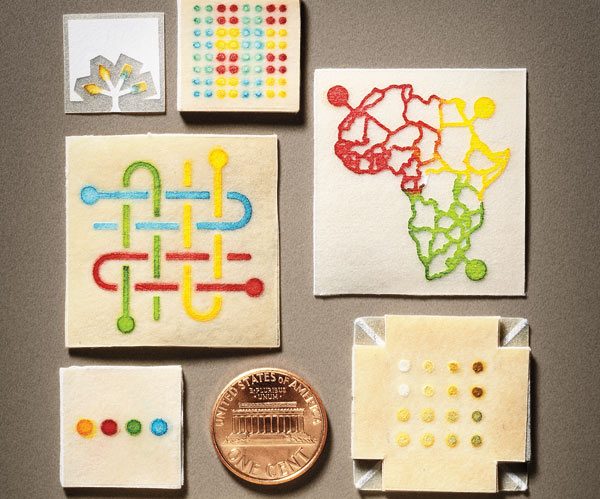

Color change: Paper tests, such as those shown here, could make it possible to diagnose a range of diseases quickly and cheaply. A small drop of liquid, such as blood or urine, wicks in through the corner or back of the paper and passes through channels to special testing zones. Substances in these zones react with specific chemicals in the sample to indicate different conditions; results show up as varying colors. These tests are small, simple, and inexpensive. BRUCE PETERSONDiagnostic tools that are cheap to make, simple to use, and rugged enough for rural areas could save thousands of lives in poor parts of the world. To make such devices, Harvard University professor George Whitesides is coupling advanced microfluidics with one of humankind’s oldest technologies: paper. The result is a versatile, disposable test that can check a tiny amount of urine or blood for evidence of infectious diseases or chronic conditions.

The finished devices are squares of paper roughly the size of postage stamps. The edge of a square is dipped into a urine sample or pressed against a drop of blood, and the liquid moves through channels into testing wells. Depending on the chemicals present, different reactions occur in the wells, turning the paper blue, red, yellow, or green. A reference key is used to interpret the results.

The squares take advantage of paper’s natural ability to rapidly soak up liquids, thus circumventing the need for pumps and other mechanical components common in microfluidic devices. The first step in building the devices is to create tiny channels, about a millimeter in width, that direct the fluid to the test wells. Whitesides and his coworkers soak the paper with a light-sensitive photoresist; ultraviolet light causes polymers in the photoresist to cross-link and harden, creating long, waterproof walls wherever the light hits it. The researchers can even create the desired channels and wells by simply drawing on the paper with a black marker and laying it in sunlight. “What we do is structure the flow of fluid in a sheet, taking advantage of the fact that if the paper is the right kind, fluid wicks and hence pulls itself along the channels,” says Whitesides. Each well is then brushed with a different solution that reacts with specific molecules in blood or urine to trigger a color change.

Paper is easily incinerated, making it easy to safely dispose of used tests. And while paper-based diagnostics (such as pregnancy tests) already exist, Whitesides’s device has an important advantage: a single square can perform many reactions, giving it the potential to diagnose a range of conditions. Meanwhile, its small size means that blood tests require only a tiny sample, allowing a user to simply prick a finger.

Currently, Whitesides is developing a test to diagnose liver failure, which is indicated by elevated levels of certain enzymes in blood. In countries with advanced health care, people who take certain medications undergo regular blood tests to screen for liver problems that the drugs can cause. But people without consistent access to health care do not have that luxury; a paper-based test could give them the same safety margin. Whitesides also wants to develop tests for infectious diseases such as tuberculosis.

To disseminate the technology, Whitesides cofounded the nonprofit Diagnostics for All in Brookline, MA, in 2007. It plans to deploy the liver function tests in an African country around the end of this year. The team hopes that eventually, people with little medical training can administer the tests and photograph the results with a cell phone. Whitesides envisions a center where technicians and doctors can evaluate the images and send back treatment recommendations.

“This is one of the most deployable devices I have seen,” says Albert Folch, an associate professor of bioengineering at the University of Washington, who works with microfluidics. “What is so incredibly clever is that they were able to create photoresist structures embedded inside paper. At the same time, the porosity of the paper acts as the cheapest pump on the planet.”

Recently, the Harvard researchers have made the paper chips into a three-dimensional diagnostic device by layering them with punctured pieces of waterproof tape. A drop of liquid can move across channels and into wells on the first sheet, diffuse down through the holes in the tape, and react in test wells on the second paper layer. The ability to perform many more tests and even carry out two-step reactions with a single sample will enable the device to detect diseases (like malaria or HIV) that require more complicated assays, such as those that use antibodies. Results appear after five minutes to half an hour, depending on the test.

The researchers hope the advanced version of the test can eventually be mass produced using the same printing technology that churns out newspapers. Cost for the materials should be three to five cents. At that price, says Folch, the tests “will have a big impact on health care in areas where transportation and energy access is difficult.”

Traveling-Wave Reactor

A new reactor design could make nuclear power safer and cheaper, says John Gilleland.

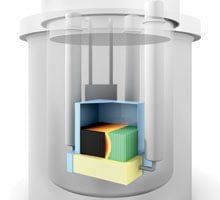

Wave of the future: Unlike today’s reactors, a traveling-wave reactor requires very little enriched uranium, reducing the risk of weapons proliferation. (Click here for a larger diagram, also on page 3). The reactor uses depleted-uranium fuel packed inside hundreds of hexagonal pillars (shown in black and green). In a “wave” that moves through the core at only a centimeter per year, this fuel is transformed (or bred) into plutonium, which then undergoes fission. The reaction requires a small amount of enriched uranium (not shown) to get started and could run for decades without refueling. The reactor uses liquid sodium as a coolant; core temperatures are extremely hot--about 550 ºC, versus the 330 ºC typical of conventional reactors. BRYAN CHRISTIE DESIGNEnriching the uranium for reactor fuel and opening the reactor periodically to refuel it are among the most cumbersome and expensive steps in running a nuclear plant. And after spent fuel is removed from the reactor, reprocessing it to recover usable materials has the same drawbacks, plus two more: the risks of nuclear-weapons proliferation and environmental pollution.

These problems are mostly accepted as a given, but not by a group of researchers at Intellectual Ventures, an invention and investment company in Bellevue, WA. The scientists there have come up with a preliminary design for a reactor that requires only a small amount of enriched fuel–that is, the kind whose atoms can easily be split in a chain reaction. It’s called a traveling-wave reactor. And while government researchers intermittently bring out new reactor designs, the traveling-wave reactor is noteworthy for having come from something that barely exists in the nuclear industry: a privately funded research company.

As it runs, the core in a traveling-wave reactor gradually converts nonfissile material into the fuel it needs. Nuclear reactors based on such designs “theoretically could run for a couple of hundred years” without refueling, says John Gilleland, manager of nuclear programs at Intellectual Ventures.

Gilleland’s aim is to run a nuclear reactor on what is now waste. Conventional reactors use uranium-235, which splits easily to carry on a chain reaction but is scarce and expensive; it must be separated from the more common, nonfissile uranium-238 in special enrichment plants. Every 18 to 24 months, the reactor must be opened, hundreds of fuel bundles removed, hundreds added, and the remainder reshuffled to supply all the fissile uranium needed for the next run. This raises proliferation concerns, since an enrichment plant designed to make low-enriched uranium for a power reactor differs trivially from one that makes highly enriched material for a bomb.

But the traveling-wave reactor needs only a thin layer of enriched U-235. Most of the core is U-238, millions of pounds of which are stockpiled around the world as leftovers from natural uranium after the U-235 has been scavenged. The design provides “the simplest possible fuel cycle,” says Charles W. Forsberg, executive director of the Nuclear Fuel Cycle Project at MIT, “and it requires only one uranium enrichment plant per planet.”

The trick is that the reactor itself will convert the uranium-238 into a usable fuel, plutonium-239. Conventional reactors also produce P-239, but using it requires removing the spent fuel, chopping it up, and chemically extracting the plutonium–a dirty, expensive process that is also a major step toward building an atomic bomb. The traveling-wave reactor produces plutonium and uses it at once, eliminating the possibility of its being diverted for weapons. An active region less than a meter thick moves along the reactor core, breeding new plutonium in front of it.

The traveling-wave idea dates to the early 1990s. However, Gilleland’s team is the first to develop a practical design. Intellectual Ventures has patented the technology; the company says it is in licensing discussions with reactor manufacturers but won’t name them. Although there are still some basic design issues to be worked out–for instance, precise models of how the reactor would behave under accident conditions–Gilleland thinks a commercial unit could be running by the early 2020s.

While Intellectual Ventures has caught the attention of academics, the commercial industry–hoping to stimulate interest in an energy source that doesn’t contribute to global warming–is focused on selling its first reactors in the U.S. in 30 years. The designs it’s proposing, however, are essentially updates on the models operating today. Intellectual Ventures thinks that the traveling-wave design will have more appeal a bit further down the road, when a nuclear renaissance is fully under way and fuel supplies look tight.

“We need a little excitement in the nuclear field,” says Forsberg. “We have too many people working on 1/10th of 1 percent change.”

A. Coolant pumps B. Expansion area for fission gases C. Fuel (depleted uranium) inside the hexagonal pillars; green represents unused fuel, black spent fuel D. Fission wave (red) E. Breeding wave (yellow) F. Liquid sodium coolant Racetrack Memory

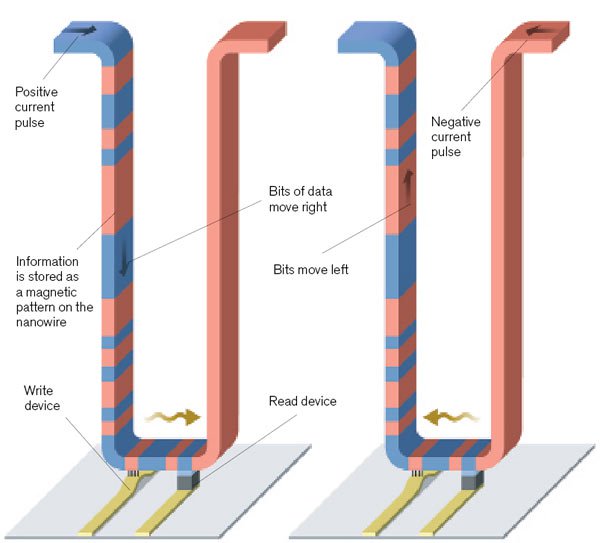

Stuart Parkin is using nanowires to create an ultradense memory chip. ARTHUR MOUNT; SOURCE: IBM

ARTHUR MOUNT; SOURCE: IBMWhen IBM sold its hard-drive business to Hitachi in April 2002, IBM fellow Stuart Parkin wondered what to do next. He had spent his career studying the fundamental physics of magnetic materials, making a series of discoveries that gave hard-disk drives thousands of times more storage capacity. So Parkin set out to develop an entirely new way to store information: a memory chip with the huge storage capacity of a magnetic hard drive, the durability of electronic flash memory, and speed superior to both. He dubbed the new technology “racetrack memory.”

Both magnetic disk drives and existing solid-state memory technologies are essentially two-dimensional, Parkin says, relying on a single layer of either magnetic bits or transistors. “Both of these technologies have evolved over the last 50 years, but they’ve done it by scaling the devices smaller and smaller or developing new means of accessing bits,” he says. Parkin sees both technologies reaching their size limits in the coming decades. “Our idea is totally different from any memory that’s ever been made,” he says, “because it’s three-dimensional.”

The key is an array of U-shaped magnetic nanowires, arranged vertically like trees in a forest. The nanowires have regions with different magnetic polarities, and the boundaries between the regions represent 1s or 0s, depending on the polarities of the regions on either side. When a spin-polarized current (one in which the electrons’ quantum-mechanical “spin” is oriented in a specific direction) passes through the nanowire, the whole magnetic pattern is effectively pushed along, like cars speeding down a racetrack. At the base of the U, the magnetic boundaries encounter a pair of tiny devices that read and write the data.

This simple design has the potential to combine the best qualities of other memory technologies while avoiding their drawbacks. Because racetrack memory stores data in vertical nanowires, it can theoretically pack 100 times as much data into the same area as a flash-chip transistor, and at the same cost. There are no mechanical parts, so it could prove more reliable than a hard drive. Racetrack memory is fast, like the dynamic random-access memory (DRAM) used to hold frequently accessed data in computers, yet it can store information even when the power is off. This is because no atoms are moved in the process of reading and writing data, eliminating wear on the wire.

Just as flash memory ushered in ultrasmall devices that can hold thousands of songs, pictures, and other types of data, racetrack promises to lead to whole new categories of electronics. “An even denser, smaller memory could make computers more compact and more energy efficient,” Parkin says. Moreover, chips with huge data capacity could be shrunk to the size of a speck of dust and sprinkled about the environment in tiny sensors or implanted in patients to log vital signs.

When Parkin first proposed racetrack memory, in 2003, “people thought it was a great idea that would never work,” he says. Before last April, no one had been able to shift the magnetic domains along the wire without disturbing their orientations. However, in a paper published that month in Science, Parkin’s team showed that a spin-polarized current would preserve the original magnetic pattern.

The Science paper proved that the concept of racetrack memory is sound, although at the time, the researchers had moved only three bits of data down a nanowire. Last December, Parkin’s team successfully moved six bits along the wire. He hopes to reach 10 bits soon, which he says would make racetrack memory competitive with flash storage. If his team can manage 100 bits, racetrack could replace hard drives.

Parkin has already found that the trick to increasing the number of bits a wire can handle is to precisely control its diameter: the narrower and more uniform the wire, the more bits it can hold. Another challenge will be to find the best material for the job: it needs to be one that can survive the manufacturing process and one that allows the magnetic domains to move quickly along the wire, with the least amount of electrical current possible.

If the design proves successful, racetrack memory could replace all other forms of memory, and Parkin will bolster his status as a magnetic-memory genius. After all, his work on giant magnetoresistance, which led to today’s high-capacity hard drives, transformed the computing industry. With racetrack memory, Parkin could revamp computing once more.

ARTHUR MOUNT; SOURCE: IBM

ARTHUR MOUNT; SOURCE: IBMLiquid Battery

Donald Sadoway conceived of a novel battery that could allow cities to run on solar power at night.

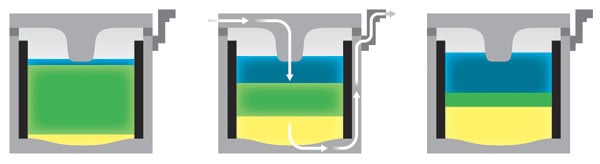

Conventional battery: Ordinary batteries use at least one solid active material. In the lead-acid battery shown here, the electrodes are solid plates immersed in a liquid electrolyte. Solid materials limit the conductivity of batteries and therefore the amount of current that can flow through them. They’re also vulnerable to cracking, disintegrating, and otherwise degrading over time, which reduces their useful lifetimes. ARTHUR MOUNTWithout a good way to store electricity on a large scale, solar power is useless at night. One promising storage option is a new kind of battery made with all-liquid active materials. Prototypes suggest that these liquid batteries will cost less than a third as much as today’s best batteries and could last significantly longer.

The battery is unlike any other. The electrodes are molten metals, and the electrolyte that conducts current between them is a molten salt. This results in an unusually resilient device that can quickly absorb large amounts of electricity. The electrodes can operate at electrical currents “tens of times higher than any [battery] that’s ever been measured,” says Donald Sadoway, a materials chemistry professor at MIT and one of the battery’s inventors. What’s more, the materials are cheap, and the design allows for simple manufacturing.

The first prototype consists of a container surrounded by insulating material. The researchers add molten raw materials: antimony on the bottom, an electrolyte such as sodium sulfide in the middle, and magnesium at the top. Since each material has a different density, they naturally remain in distinct layers, which simplifies manufacturing. The container doubles as a current collector, delivering electrons from a power supply, such as solar panels, or carrying them away to the electrical grid to supply electricity to homes and businesses.

Discharged, charging, charged: The molten active components (colored bands: blue, magnesium; green, electrolyte; yellow, antimony) of a new grid-scale storage battery are held in a container that delivers and collects electrical current (left). Here, the battery is ready to be charged, with positive magnesium and negative antimony ions dissolved in the electrolyte. As electric current flows into the cell (center), the magnesium ions in the electrolyte gain electrons and form magnesium metal, which joins the molten magnesium electrode. At the same time, the antimony ions give up electrons to form metal atoms at the opposite electrode. As metal forms, the electrolyte shrinks and the electrodes grow (right), an unusual property for batteries. During discharge, the process is reversed, and the metal atoms become ions again. ARTHUR MOUNTAs power flows into the battery, magnesium and antimony metal are generated from magnesium antimonide dissolved in the electrolyte. When the cell discharges, the metals of the two electrodes dissolve to again form magnesium antimonide, which dissolves in the electrolyte, causing the electrolyte to grow larger and the electrodes to shrink (see above).

Sadoway envisions wiring together large cells to form enormous battery packs. One big enough to meet the peak electricity demand in New York City–about 13,000 megawatts–would fill nearly 60,000 square meters. Charging it would require solar farms of unprecedented size, generating not only enough electricity to meet daytime power needs but enough excess power to charge the batteries for nighttime demand. The first systems will probably store energy produced during periods of low electricity demand for use during peak demand, thus reducing the need for new power plants and transmission lines.

Many other ways of storing energy from intermittent power sources have been proposed, and some have been put to limited use. These range from stacks of lead-acid batteries to systems that pump water uphill during the day and let it flow back to spin generators at night. The liquid battery has the advantage of being cheap, long-lasting, and (unlike options such as pumping water) useful in a wide range of places. “No one had been able to get their arms around the problem of energy storage on a massive scale for the power grid,” says Sadoway. “We’re literally looking at a battery capable of storing the grid.”

Since creating the initial prototypes, the researchers have switched the metals and salts used; it wasn’t possible to dissolve magnesium antimonide in the electrolyte at high concentrations, so the first prototypes were too big to be practical. (Sadoway won’t identify the new materials but says they work along the same principles.) The team hopes that a commercial version of the battery will be available in five years.

Intelligent Software Assistant

Adam Cheyer is leading the design of powerful software that acts as a personal aide.

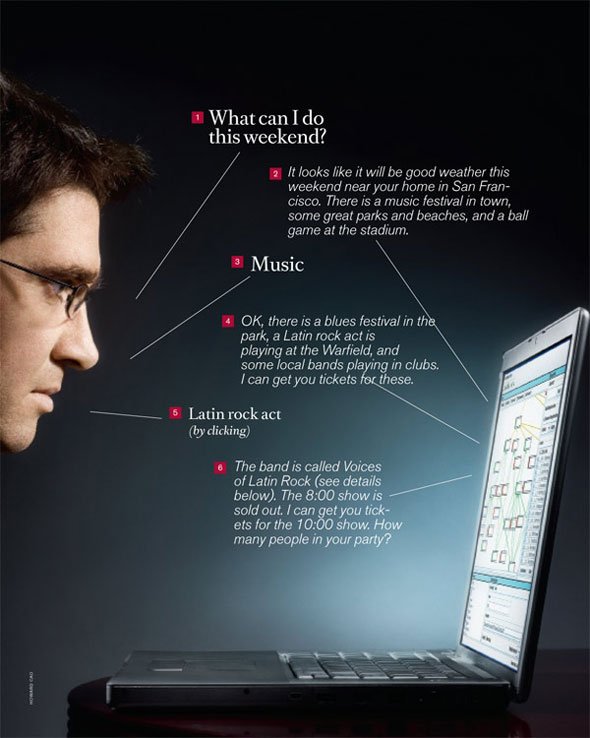

Weekend plans: Adam Cheyer participates in a conversation with the software. (Go to the next page to read the dialogue and an explanation of the artificial-intelligence behind it.) HOWARD CAOSearch is the gateway to the Internet for most people; for many of us, it has become second nature to distill a task into a set of keywords that will lead to the required tools and information. But Adam Cheyer, cofounder of Silicon Valley startup Siri, envisions a new way for people to interact with the services available on the Internet: a “do engine” rather than a search engine. Siri is working on virtual personal-assistant software, which would help users complete tasks rather than just collect information.

Cheyer, Siri’s vice president of engineering, says that the software takes the user’s context into account, making it highly useful and flexible. “In order to get a system that can act and reason, you need to get a system that can interact and understand,” he says.

Siri traces its origins to a military-funded artificial-intelligence project called CALO, for “cognitive assistant that learns and organizes,” that is based at the research institute SRI International. The project’s leaders–including Cheyer–combined traditionally isolated approaches to artificial intelligence to try to create a personal-assistant program that improves by interacting with its user. Cheyer, while still at SRI, took a team of engineers aside and built a sample consumer version; colleagues finally persuaded him to start a company based on the prototype. Siri licenses its core technology from SRI.

Mindful of the sometimes spectacular failure of previous attempts to create a virtual personal assistant, Siri’s founders have set their sights conservatively. The initial version, to be released this year, will be aimed at mobile users and will perform only specific types of functions, such as helping make reservations at restaurants, check flight status, or plan weekend activities. Users can type or speak commands in casual sentences, and the software deciphers their intent from the context. Siri is connected to multiple online services, so a quick interaction with it can accomplish several small tasks that would normally require visits to a number of websites. For example, a user can ask Siri to find a midpriced Chinese restaurant in a specific part of town and make a reservation there.

Recent improvements in computer processor power have been essential in bringing this level of sophistication to a consumer product, Cheyer says. Many of CALO’s abilities still can’t be crammed into such products. But the growing power of mobile phones and the increasing speed of networks make it possible to handle some of the processing at Siri’s headquarters and pipe the results back to users, allowing the software to take on tasks that just couldn’t be done before.

“Search does what search does very well, and that’s not going anywhere anytime soon,” says Dag Kittlaus, Siri’s cofounder and CEO. “[But] we believe that in five years, everyone’s going to have a virtual assistant to which they delegate a lot of the menial tasks.”

While the software will be intelligent and useful, the company has no aspiration to make it seem human. “We think that we can create an incredible experience that will help you be more efficient in your life, in solving problems and the tasks that you do,” Cheyer says. But Siri is always going to be just a tool, not a rival to human intelligence: “We’re very practical minded.”

Weekend Plans Siri cofounder Tom Gruber volunteered Adam Cheyer to participate in a conversation with the software (shown above). Gruber explains the artificial-intelligence tasks behind its responses. 1. “The user can ask a broad question like this because Siri has information that gives clues about what the user intends. For example, the software might store data about the user’s location, schedule, and past activities. Siri can deal with open-ended questions within specific areas, such as entertainment or travel.”

2. “Siri pulls information relevant to the user’s question from a variety of Web services and tools. In this case, it checks the weather, event listings, and directories of local attractions and uses machine learning to select certain options based on the user’s past preferences. Siri can connect to various Web applications and then integrate the results into a single response.”

3. “Siri interprets this reply in the context of the existing conversation, using it to refine the user’s request.”

4. “The software offers specific suggestions based on the user’s personal preferences and its ability to categorize. Because Siri is task-oriented, rather than a search engine, it offers to buy tickets that the user selects.”

5. “By now, the conversation has narrowed enough that all the user has to do is click on his choice.”

6. “Siri compiles information about the event, such as band members, directions, and prices, and structures it in a logical way. It also handles the task of finding out what’s available and getting the tickets.”

Nanopiezoelectronics

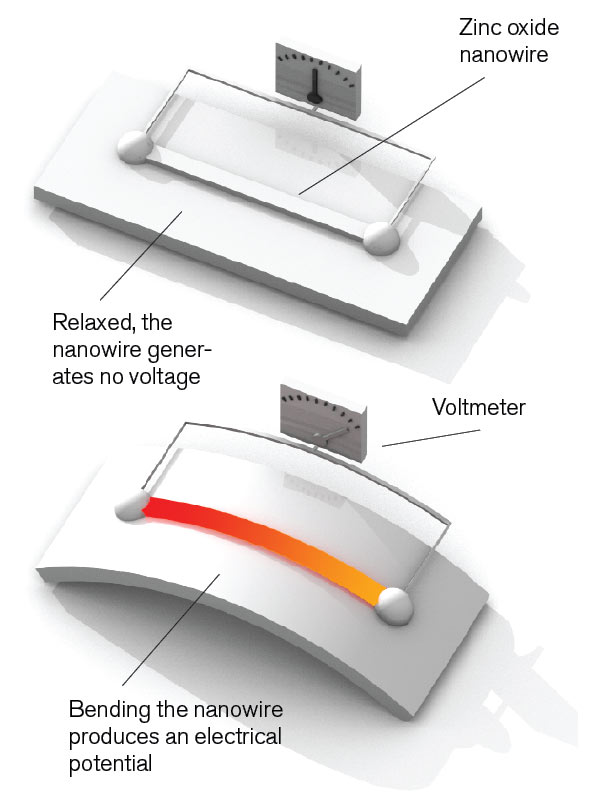

Zhong Lin Wang thinks piezoelectric nanowires could power implantable medical devices and serve as tiny sensors. BRYAN CHRISTIE DESIGN

BRYAN CHRISTIE DESIGNNanoscale sensors are exquisitely sensitive, very frugal with power, and, of course, tiny. They could be useful in detecting molecular signs of disease in the blood, minute amounts of poisonous gases in the air, and trace contaminants in food. But the batteries and integrated circuits necessary to drive these devices make them difficult to fully miniaturize. The goal of Zhong Lin Wang, a materials scientist at Georgia Tech, is to bring power to the nano world with minuscule generators that take advantage of piezoelectricity. If he succeeds, biological and chemical nano sensors will be able to power themselves.

The piezoelectric effect–in which crystalline materials under mechanical stress produce an electrical potential–has been known of for more than a century. But in 2005, Wang was the first to demonstrate it at the nanoscale by bending zinc oxide nanowires with the probe of an atomic-force microscope. As the wires flex and return to their original shape, the potential produced by the zinc and oxide ions drives an electrical current. The current that Wang coaxed from the wires in his initial experiments was tiny; the electrical potential peaked at a few millivolts. But Wang rightly suspected that with enough engineering, he could design a practical nanoscale power source by harnessing the tiny vibrations all around us–sound waves, the wind, even the turbulence of blood flow over an implanted device. These subtle movements would bend nanowires, generating electricity.

Piezoelectric wires: The mechanical stress produced by bending a zinc oxide nanowire creates an electrical potential across the wire. This drives current through a circuit. The conversion of mechanical energy to electrical energy is called the piezoelectric effect. It’s harnessed in the devices on the next page, which might be made from the nanowires. BRYAN CHRISTIE DESIGNLast November, Wang embedded zinc oxide nanowires in a layer of polymer; the resulting sheets put out 50 millivolts when flexed. This is a major step forward in powering tiny sensors.

And Wang hopes that these generators could eventually be woven into fabric; the rustling of a shirt could generate enough power to charge the batteries of devices like iPods. For now, the nanogenerator’s output is too low for that. “We need to get to 200 millivolts or more,” says Wang. He’ll get there by layering the wires, he says, though it might take five to ten more years of careful engineering.

Meanwhile, Wang has demonstrated the first components for a new class of nanoscale sensors. Nanopiezotronics, as he calls this technology, exploit the fact that zinc oxide nanowires not only exhibit the piezoelectric effect but are semiconductors. The first property lets them act as mechanical sensors, because they produce an electrical response to mechanical stress. The second means that they can be used to make the basic components of integrated circuits, including transistors and diodes. Unlike traditional electronic components, nanopiezotronics don’t need an external source of electricity. They generate their own when exposed to the same kinds of mechanical stresses that power nanogenerators.

Nanogenerator: (Left, clockwise) Arrays of zinc oxide nanowires packaged in a thin polymer film generate electrical current when flexed. The nanogenerator could be embedded in clothing and used to convert the rustling of fabric into current to power portable devices such as cell phones. Hearing aid: An array of vertically aligned piezoelectric nanowires could serve as a hearing aid. When sound waves hit them, the wires bend, generating an electrical potential. The electrical signal can then be amplified and sent directly to the auditory nerve. Signature verification: A grid of piezoelectric wires underneath a signature pad would record the pattern of pressure applied by each person signing. Combined with a database of such patterns, the system could authenticate signatures. Bone-loss monitor: A mesh of piezoelectric nanowires could monitor mechanical strain indicative of bone loss. Dangerous stress to the bone would generate an electrical current in the wires; this would cause the device to beam an alert signal outside the body. The sensor could be implanted in a minimally invasive procedure. BYRAN CHRISTIE DESIGNFreeing nanoelectronics from outside power sources opens up all sorts of possibilities. A nanopiezotronic hearing aid integrated with a nanogenerator might use an array of nanowires, each tuned to vibrate at a different frequency over a large range of sounds. The nanowires would convert sounds into electrical signals and process them so that they could be conveyed directly to neurons in the brain. Not only would such implanted neural prosthetics be more compact and more sensitive than traditional hearing aids, but they wouldn’t need to be removed so their batteries could be changed. Nanopiezotronic sensors might also be used to detect mechanical stresses in an airplane engine; just a few nanowire components could monitor stress, process the information, and then communicate the relevant data to an airplane’s computer. Whether in the body or in the air, nano devices would at last be set loose in the world all around us.

HashCache

Vivek Pai’s new method for storing Web content could make Internet access more affordable around the world.

Closing the divide: Students surf the Web at Ghana’s Kokrobitey Institute, a conference center with an Internet connection only about four times as fast as dial-up. The link is enhanced by Princeton’s low-cost, low-power HashCache technology, which stores frequently accessed Web content. OLIVIER ASSELIN/WPNThroughout the developing world, scarce Internet access is a more conspicuous and stubborn aspect of the digital divide than a dearth of computers. “In most places, networking is more expensive–not only in relative terms but even in absolute terms–than it is in United States,” says Vivek Pai, a computer scientist at Princeton University. Often, even universities in poor countries can afford only low-bandwidth connections; individual users receive the equivalent of a fraction of a dial-up connection. To boost the utility of these connections, Pai and his group created HashCache, a highly efficient method of caching–that is, storing frequently accessed Web content on a local hard drive instead of using precious bandwidth to retrieve the same information repeatedly.

Despite the Web’s protean nature, a surprising amount of its content doesn’t change often or by very much. But current caching technologies require not only large hard disks to hold data but also lots of random-access memory (RAM) to store an index that contains the “address” of each piece of content on the disk. RAM is expensive relative to hard-disk capacity, and it works only when supplied with electricity–which, like bandwidth, is often both expensive and scarce in the developing world.

HashCache abolishes the index, slashing RAM and electricity requirements by roughly a factor of 10. It starts by transforming the URL of each stored Web “object”–an image, graphic, or block of text on a Web page–into a shorter number, using a bit of math called a hash function. While most other caching systems do this, they also store each hash number in a RAM-hogging table that correlates it with a hard-disk memory address. Pai’s technology can skip this step because it uses a novel hash function: the number that the function produces defines the spot on the disk where the corresponding Web object can be found. “By using the hash to directly compute the location, we can get rid of the index entirely,” Pai says.

To be sure, some RAM is still needed, but only enough to run the hash function and to actually retrieve a specific Web object, Pai says. Though still at a very early stage of development, HashCache is being field-tested at the Kokrobitey Institute in Ghana and Obafemi Awolowo University in Nigeria.

The technology ends a long drought in fundamental caching advances, says Jim Gettys, a coauthor of the HTTP specification that serves as the basis of Internet communication. While it’s increasingly feasible for a school in a poor country to buy hundreds of gigabytes of hard-disk memory, Gettys says, those same schools–if they use today’s best available software–can typically afford only enough RAM to support tens of gigabytes of cached content. With HashCache, a classroom equipped with pretty much any kind of computers, even castoff PCs, could store and cheaply access one terabyte of Web data. That’s enough to store all of Wikipedia’s content, for example, or all the coursework freely available from colleges such as Rice University and MT.

Even with new fiber-optic cables connecting East Africa to the Internet, thousands of students at some African universities share connections that have roughly the same speed as a home DSL line, says Ethan Zuckerman, a fellow at the Berkman Center for Internet and Society at Harvard University. “These universities are extremely bandwidth constrained,” he says. “All their students want to have computers but almost never have sufficient bandwidth. This innovation makes it significantly cheaper to run a very large caching server.”

Pai plans to license HashCache in a way that makes it free for nonprofits but leaves the door open to future commercialization. And that means that it could democratize Internet access in wealthy countries, too.

Software-Defined Networking

Nick McKeown believes that remotely controlling network hardware with software can bring the Internet up to speed. SUPERBROTHERS

SUPERBROTHERSFor years, computer scientists have dreamed up ways to improve networks’ speed, reliability, energy efficiency, and security. But their schemes have generally remained lab projects, because it’s been impossible to test them on a large enough scale to see if they’d work: the routers and switches at the core of the Internet are locked down, their software the intellectual property of companies such as Cisco and Hewlett-Packard.

Frustrated by this inability to fiddle with Internet routing in the real world, Stanford computer scientist Nick McKeown and colleagues developed a standard called OpenFlow that essentially opens up the Internet to researchers, allowing them to define data flows using software–a sort of “software-defined networking.” Installing a small piece of OpenFlow firmware (software embedded in hardware) gives engineers access to flow tables, rules that tell switches and routers how to direct network traffic. Yet it protects the proprietary routing instructions that differentiate one company’s hardware from another.

With OpenFlow installed on routers and switches, researchers can use software on their computers to tap into flow tables and essentially control a network’s layout and traffic flow with the click of a mouse. This software-based access allows computer scientists to inexpensively and easily test new switching and routing protocols. “Today, security, routing, and energy management are dictated by the box, and it’s very hard to change,” says McKeown. “That’s why the infrastructure hasn’t changed for 40 years.”

Normally, when a data packet arrives at a switch, firmware checks the packet’s destination and forwards it according to predefined rules over which network operators have no control. All packets going to the same place are routed along the same path and treated the same way.

On a network running OpenFlow, computer scientists can add to, subtract from, and otherwise meddle with these rules. This means that researchers could, say, give video priority over e-mail, reducing the annoying stops and starts that sometimes plague streaming video. They could set up rules for traffic coming from or going to a certain destination, allowing them to quarantine traffic from a computer suspected of harboring viruses.

And OpenFlow can be used to improve cellular networks as well. Mobile-service providers have begun to expand their networks using commodity hardware built for the Internet. But such hardware is horrible at maintaining connections when a user is moving: just think about the less-than-seamless way that a laptop’s data connection is transferred from one wireless base station to another. OpenFlow, says McKeown, offers service providers a way to try out new solutions to the mobility problem.

McKeown’s group receives funding and equipment from networking companies such as Cisco, Juniper, HP, and NEC, as well as cellular providers including T-Mobile, Ericsson, and NTT DoCoMo. Ideas tested on switches running OpenFlow could be incorporated into the firmware of new routers, or they could be added to old ones through firmware updates. McKeown expects that within the year, one or more of these companies will ship products with OpenFlow built in.