Why adding bugs to software can make it safer

When it comes to radar tracking, one of the most effective countermeasure is to release a cloud of aluminum strips or metallized plastic. These strongly reflect radar and create thousands of targets, which swamp and confuse the radar returns. That confuses anything trying to track you, such as a radar-guided missile.

Most military aircraft and warships, and many ballistic missiles, have decoy systems that include chaff. And now cybersecurity researchers are applying the same idea to software.

The idea is simple in principle. Software often contains bugs, most of which go unnoticed by its creators and by legitimate users. But malicious actors actively search for these bugs so that they can exploit them for targeted attacks. Their goal is to take over the computer or otherwise manipulate it.

But not all bugs are equal. Some cannot be exploited for malicious purposes and do nothing worse than cause a program to crash. This can be serious, but there is a large class of software, such as background microservices, that is designed to handle crashes gracefully by restarting the software while the user is none the wiser. These bugs are much less serious than ones that allow malicious control.

But telling them apart is not always simple. After malicious coders find bugs, they have to distinguish those that are truly dangerous from those that are relatively benign, and that process is generally difficult and time consuming.

That’s the basis for a new approach developed by Zhenghao Hu and colleagues at New York University. Why not fill ordinary code with benign bugs as a way of fooling potential attackers?

The idea is to force attackers to use up their resources finding and testing bugs that will be of no use to them. Hu and co call these decoys “chaff bugs,” in analogy to the aluminum strips used to fool radar operators.

The idea is just the latest move in an increasingly complex cat-and-mouse game pitting security experts against attackers. In recent years, various groups have developed programs that hunt through code, looking for vulnerabilities that an attacker might exploit. Security experts use this approach to find and remove these vulnerabilities before the code becomes public, while malicious attackers use the same approach to find bugs they can exploit.

But for security researchers, developing these programs is hard and requires there to be vulnerabilities in the software in the first place. So researchers have developed another tool that automatically adds these bugs to software so that they can later be “discovered” by the vulnerability-hunting program.

It turns out that adding bugs is by no means straightforward. Random changes to the code tend to make it useless, rather than introducing interesting anomalies. Instead, the process involves running the code with different inputs and monitoring what happens to these inputs as the code progresses.

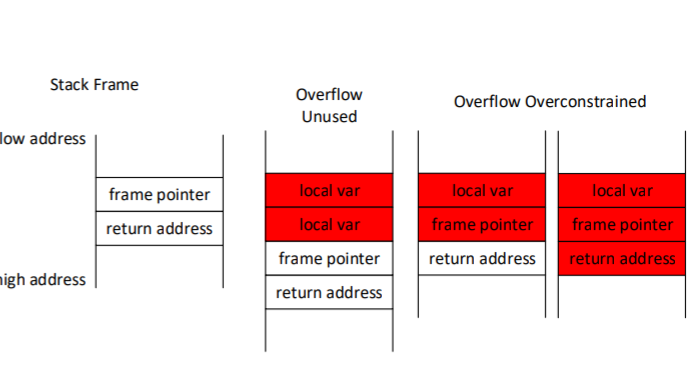

This process looks for points in the program where the input is no longer being used to make any future decisions. In that case, this dead input can be manipulated maliciously to corrupt or overflow the memory.

The vulnerability-spotting program notes where these dead zones are so that they can be exploited later.

It turns out that these potential bugs are common in code written in languages such as C and C++, which do not have systems that oversee memory usage.

Hu and co simply use this approach to add memory corruption bugs throughout the code. In ordinary circumstances, these bugs are benign. But if found by a malicious actor, they can only be exploited to crash the program—not for anything more sinister. That’s why they act as chaff.

“Attackers who attempt to find and exploit bugs in software will, with high probability, find an intentionally placed non-exploitable bug and waste precious resources in trying to build a working exploit,” say Hu and co.

The team go on to show that the current processes for spotting potential bugs are fooled by this approach. “We show that the functionality of the software is not harmed and demonstrate that our bugs look exploitable to current triage tools,” they say

That’s an interesting approach that has the potential to significantly sidetrack malicious attackers. “We believe that chaff bugs can serve as an effective deterrent against both human attackers and automated Cyber Reasoning Systems,” say Hu and co.

But it also raises some interesting questions. For example, there is no actual proof that triaging bugs to find the ones that are exploitable is necessarily hard and time consuming. It is theoretically possible that somebody, somewhere has found a quick way to do it.

If it turns out that there is a way to easily distinguish chaff bugs from exploitable ones, then this approach becomes less valuable. Indeed, Hu and co do not attempt to hide or disguise their bugs. “This means that they currently contain many artifacts that attackers could use to identify and ignore them,” they say.

There is also very little variation in these injected bugs. “[This] could allow an attacker to identify patterns in the bugs we produce and exclude those that match the pattern,” they say.

But there is significant potential ahead. The idea of adding bugs rather than removing them is a deliciously malevolent approach to cybercrime, and one that should spark some interesting avenues of future research.

Ref: arxiv.org/abs/1808.00659 : Chaff Bugs: Deterring Attackers by Making Software Buggier

Deep Dive

Computing

How ASML took over the chipmaking chessboard

MIT Technology Review sat down with outgoing CTO Martin van den Brink to talk about the company’s rise to dominance and the life and death of Moore’s Law.

Why it’s so hard for China’s chip industry to become self-sufficient

Chip companies from the US and China are developing new materials to reduce reliance on a Japanese monopoly. It won’t be easy.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.