The productivity paradox

To become wealthier, a country needs strong growth in productivity—the output of goods or services from given inputs of labor and capital. For most people, in theory at least, higher productivity means the expectation of rising wages and abundant job opportunities.

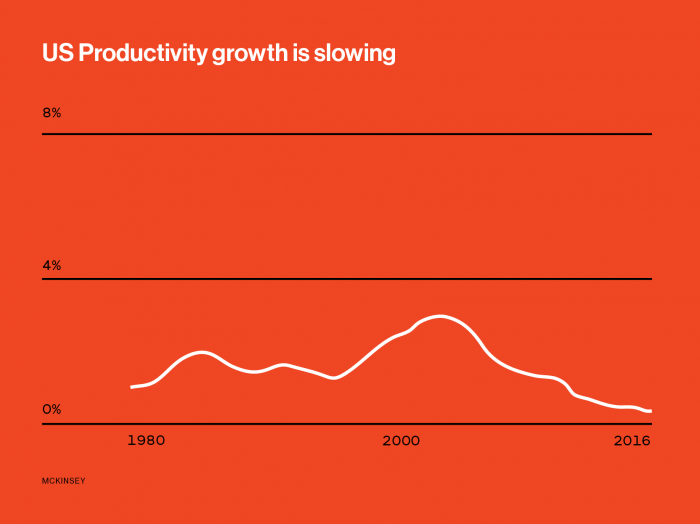

Productivity growth in most of the world’s rich countries has been dismal since around 2004. Especially vexing is the sluggish pace of what economists call total factor productivity—the part that accounts for the contributions of innovation and technology. In a time of Facebook, smartphones, self-driving cars, and computers that can beat a person at just about any board game, how can the key economic measure of technological progress be so pathetic? Economists have tagged this the “productivity paradox.”

Some argue that it’s because today’s technologies are not nearly as impressive as we think. The leading proponent of that view, Northwestern University economist Robert Gordon, contends that compared with breakthroughs like indoor plumbing and the electric motor, today’s advances are small and of limited economic benefit. Others think productivity is in fact increasing but we simply don’t know how to measure things like the value delivered by Google and Facebook, particularly when many of the benefits are “free.”

Both views probably misconstrue what is actually going on. It’s likely that many new technologies are used to simply replace workers and not to create new tasks and occupations. What’s more, the technologies that could have the most impact are not widely used. Driverless vehicles, for instance, are still not on most roads. Robots are rather dumb and remain rare outside manufacturing. And AI is mysterious for most companies.

We’ve seen this before. In 1987 MIT economist Robert Solow, who won that year’s Nobel Prize for defining the role of innovation in economic growth, quipped to the New York Times that “you can see the computer age everywhere but in the productivity statistics.” But within a few years that had changed as productivity climbed throughout the mid and late 1990s.

What’s happening now may be a “replay of the late ’80s,” says Erik Brynjolfsson, another MIT economist. Breakthroughs in machine learning and image recognition are “eye-popping”; the delay in implementing them only reflects how much change that will entail. “It means swapping in AI and rethinking your business, and it might mean whole new business models,” he says.

In this view, AI is what economic historians consider a “general-purpose technology.” These are inventions like the steam engine, electricity, and the internal-combustion engine. Eventually they transformed how we lived and worked. But businesses had to be reinvented, and other complementary technologies had to be created to exploit the breakthroughs. That took decades.

Illustrating the potential of AI as a general-purpose technology, Scott Stern of MIT’s Sloan School of Management describes it as a “method for a new method of invention.” An AI algorithm can comb through vast amounts of data, finding hidden patterns and predicting possibilities for, say, a better drug or a material for more efficient solar cells. It has, he says, “the potential to transform how we do innovation.”

But he also warns against expecting such a change to show up in macroeconomic measurements anytime soon. “If I tell you we’re having an innovation explosion, check back with me in 2050 and I’ll show you the impacts,” he says. General-purpose technologies, he adds, “take a lifetime to reorganize around.”

Even as these technologies appear, huge gains in productivity aren’t guaranteed, says John Van Reenen, a British economist at Sloan. Europe, he says, missed out on the dramatic 1990s productivity boost from the IT revolution, largely because European companies, unlike US-based ones, lacked the flexibility to adapt.

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.