US conservatives spread tweets by Russian trolls over 30 times more often than liberals

Toward the end of last year, the US Congress released a list of online accounts associated with Internet Research Agency, a Russian troll farm that systematically attempted to interfere with the 2016 presidential election. The list included over 2,700 trolls that had spread fake news and malicious rumors designed to manipulate opinion in the US.

These accounts have long since been deactivated, but their unveiling raises important questions. What kind of information did they post, who did it influence, and how was the misinformation spread so widely?

Today we get an answer thanks to the work of Adam Badawy and colleagues at the University of Southern California in Los Angeles, who have studied the messages created by trolls and followed their passage through cyberspace. They’ve even analyzed the political leanings reflected in the messages and the accounts that spread them.

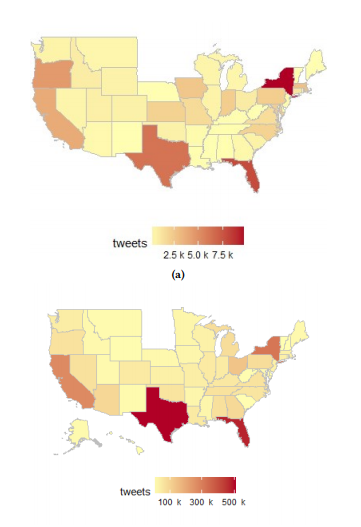

They say that the most retweets of Russian misinformation came from two US states: Tennessee and Texas. They also conclude that conservatives were significantly more likely than liberals to retweet the messages.

The team’s method is relatively straightforward. In the month before the election in 2016, the researchers took the temperature of the Twittersphere by downloading over 43 million election-related tweets generated by about 5.7 million users.

They worked out the political ideology of each of these accounts using a technique known as label propagation. For this, they create a map of the network and determine each user’s political ideology based on that of the nearest neighbors.

They then repeat this procedure to see how the ideologies propagate through the network, until the system settles into a steady state.

The process is based on the idea that people seek out others like themselves. So liberals are more likely to be linked to other liberals, and likewise for conservatives. The process gives good results so long as it is seeded with labels from users with known political affiliations.

“We were able to successfully determine the political ideology of most of the users using label propagation on the retweet network with precision and recall exceeding 90 percent,“ say Badawy and co.

The team then studied the tweets created by the 2,700 Russian trolls and analyzed how they spread. Of these, 221 troll accounts appeared in the database that Badawy and co gathered. And 85 of these troll accounts produced original tweets—861 of them.

Some of these trolls created messages with a liberal bias, but “text analysis on the content shared by trolls reveals that they had a mostly conservative, pro-Trump agenda,” say Badawy and co.

These messages were widely retweeted. Russian trolls themselves retweeted the messages over 6,000 times but the total retweets numbered over 80,000 by more than 40,000 different users.

So who were the retweeters? Badawy and co say the answer is clear. “Conservatives retweeted Russian trolls about 31 times more often than liberals and produced 36 times more tweets,” they conclude. “Additionally, most retweets of troll content originated from two Southern states: Tennessee and Texas.”

“Although an ideologically broad swath of Twitter users were exposed to Russian Trolls in the period leading up to the 2016 U.S. Presidential election, it was mainly conservatives who helped amplify their message,” conclude the team. “These accounts helped amplify the misinformation produced by trolls to manipulate public opinion during the period leading up to the 2016 U.S. Presidential election.”

Of course, the work raises important questions for the future. Chief among these is how this kind of activity can be spotted and stopped in real time. This kind of work will help, but it is by no means clear how trolls can be effectively nullified.

Ref: arxiv.org/abs/1802.04291 : Analyzing the Digital Traces of Political Manipulation: The 2016 Russian Interference Twitter Campaign

Deep Dive

Policy

A brief, weird history of brainwashing

L. Ron Hubbard, Operation Midnight Climax, and stochastic terrorism—the race for mind control changed America forever.

Why the Chinese government is sparing AI from harsh regulations—for now

The Chinese government may have been tough on consumer tech platforms, but its AI regulations are intentionally lax to keep the domestic industry growing.

AI was supposed to make police bodycams better. What happened?

New AI programs that analyze bodycam recordings promise more transparency but are doing little to change culture.

Three ways the US could help universities compete with tech companies on AI innovation

Empowering universities to remain at the forefront of AI research will be key to realizing the field’s long-term potential.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.