Inside the Moonshot Effort to Finally Figure Out the Brain

AI is only loosely modeled on the brain. So what if you wanted to do it right? You’d need to do what has been impossible until now: map what actually happens in neurons and nerve fibers.

"Here’s the problem with artificial intelligence today," says David Cox. Yes, it has gotten astonishingly good, from near-perfect facial recognition to driverless cars and world-champion Go-playing machines. And it’s true that some AI applications don’t even have to be programmed anymore: they’re based on architectures that allow them to learn from experience.

Yet there is still something clumsy and brute-force about it, says Cox, a neuroscientist at Harvard. “To build a dog detector, you need to show the program thousands of things that are dogs and thousands that aren’t dogs,” he says. “My daughter only had to see one dog”—and has happily pointed out puppies ever since. And the knowledge that today’s AI does manage to extract from all that data can be oddly fragile. Add some artful static to an image—noise that a human wouldn’t even notice—and the computer might just mistake a dog for a dumpster. That’s not good if people are using facial recognition for, say, security on smartphones (see “Is AI Riding a One-Trick Pony?”).

To overcome such limitations, Cox and dozens of other neuroscientists and machine-learning experts joined forces last year for the Machine Intelligence from Cortical Networks (MICrONS) initiative: a $100 million effort to reverse-engineer the brain. It will be the neuroscience equivalent of a moonshot, says Jacob Vogelstein, who conceived and launched MICrONS when he was a program officer for the Intelligence Advanced Research Projects Agency, the U.S. intelligence community’s research arm. (He is now at the venture capital firm Camden Partners in Baltimore.) MICrONS researchers are attempting to chart the function and structure of every detail in a small piece of rodent cortex.

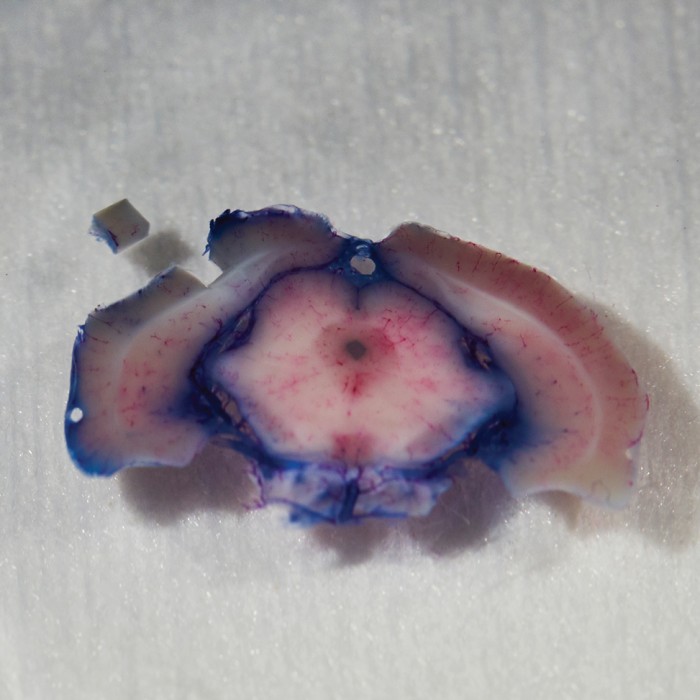

It’s a testament to the brain’s complexity that a moonshot is needed to map even this tiny piece of cortex, a cube measuring one millimeter on a side—the size of a coarse grain of sand. But this cube is thousands of times bigger than any chunk of brain anyone has tried to detail. It will contain roughly 100,000 neurons and something like a billion synapses, the junctions that allow nerve impulses to leap from one neuron to the next.

It’s an ambition that leaves other neuroscientists awestruck. “I think what they are doing is heroic,” says Eve Marder, who has spent her entire career studying much smaller neural circuits at Brandeis University. “It’s among the most exciting things happening in neuroscience,” says Konrad Kording, who does computational modeling of the brain at the University of Pennsylvania.

The ultimate payoff will be the neural secrets mined from the project’s data—principles that should form what Vogelstein calls “the computational building blocks for the next generation of AI.” After all, he says, today’s neural networks are based on a decades-old architecture and a fairly simplistic notion of how the brain works. Essentially, these systems spread knowledge across thousands of densely interconnected “nodes,” analogous to the brain’s neurons. The systems improve their performance by adjusting the strength of the connections. But in most computer neural networks the signals always cascade forward, from one set of nodes to the next. The real brain is full of feedback: for every bundle of nerve fibers conveying signals from one region to the next, there is an equal or greater number of fibers coming back the other way. But why? Are those feedback fibers the secret to one-shot learning and so many other aspects of the brain’s immense power? Is something else going on?

MICrONS should provide at least some of the answers, says Princeton University neuroscientist Sebastian Seung, who is playing a key role in the mapping effort. In fact, he says, “I don’t think we can answer these questions without a project like this.”

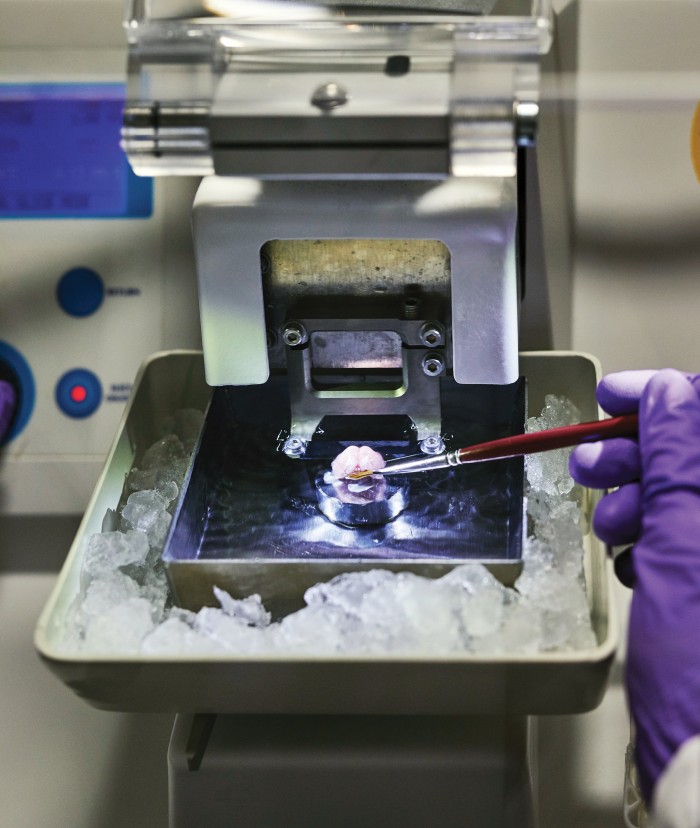

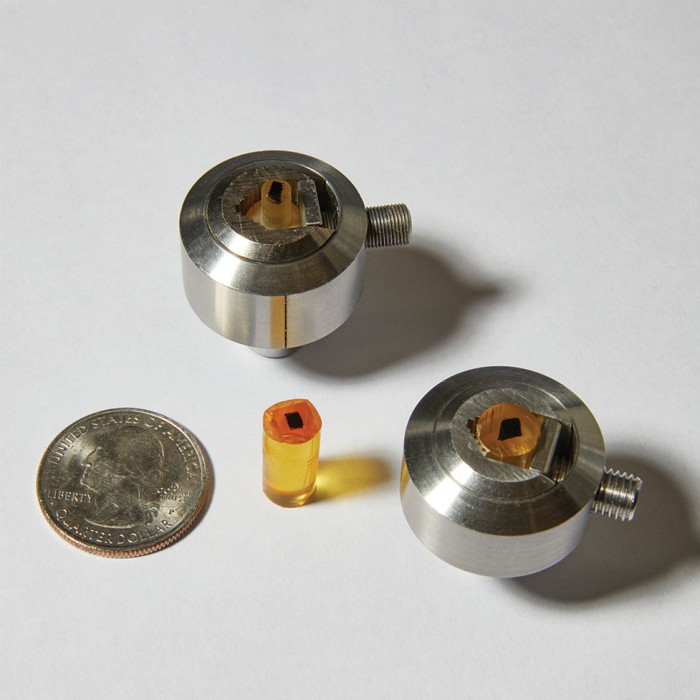

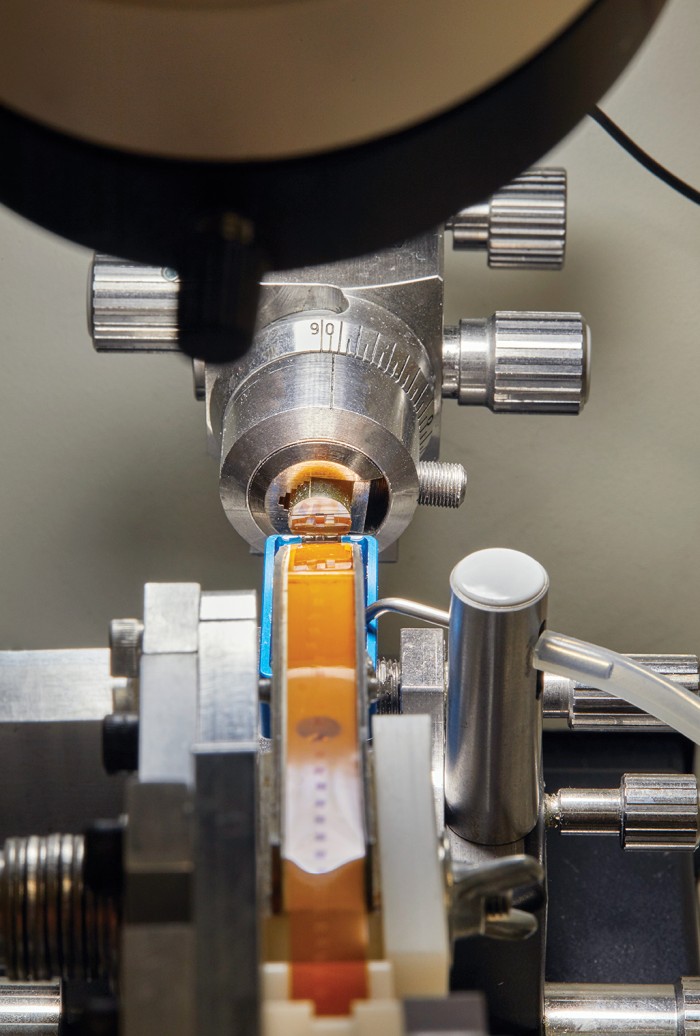

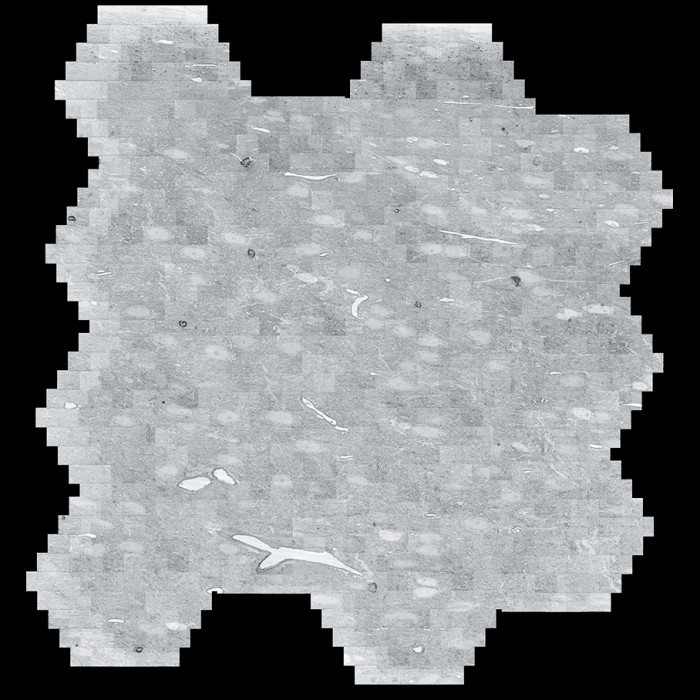

Image 1: The small cube in the upper left is the portion of the brain that will be mapped. Image 2: That piece of the brain is encased in acrylic in preparation for being sliced extremely thin.

Zooming in

The MICrONS teams—one led by Cox, one based at Rice University and the Baylor College of Medicine, and a third at Carnegie Mellon—are each pursuing something that is remarkably comprehensive: a reconstruction of all the cells in a cubic millimeter of a rat’s brain, plus a wiring diagram—a “connectome”—showing how every cell is connected to every other cell, and data showing exactly which situations make neurons fire and influence other neurons.

The first step is to look into the rats’ brains and figure out what neurons in that cubic millimeter are actually doing. When the animal is given a specific visual stimulus, such as a line oriented a certain way, which neurons suddenly start firing off impulses, and which neighbors respond?

As recently as a decade ago, capturing that kind of data ranged from difficult to impossible: “The tools just never existed,” Vogelstein says. It’s true that researchers could slide ultrathin wires into the brain and get beautiful recordings of electrical activity in individual neurons. But they couldn’t record from more than a few dozen at a time because the cells are packed so tightly together. Researchers could also map the overall geography of neural activity by putting humans and other animals in MRI machines. But researchers couldn’t monitor individual neurons that way: the spatial resolution was about a millimeter at best.

What broke that impasse was the development of techniques for making neurons light up when they fire in a living brain. To do it, scientists typically seed the neurons with fluorescent proteins that glow in the presence of calcium ions, which surge in abundance whenever a cell fires. The proteins can be inserted into a rodent’s brain chemically, carried in by a benign virus, or even encoded into the neurons’ genome. The fluorescence can then be triggered in several ways—perhaps most usefully, by a pair of lasers that pump infrared light into the rat through a window set into its skull. The infrared frequencies allow the photons to penetrate the comparatively opaque neural tissue without damaging anything, before getting absorbed by the fluorescent proteins. The proteins, in turn, combine the energy from two of the infrared photons and release it as a single visible-light photon that can be seen under an ordinary microscope as the animal looks at something or performs any number of other actions.

Andreas Tolias, who leads part of the team at Baylor, says this is “revolutionary” because “you can record from every single neuron, even those that are right next to one another.”

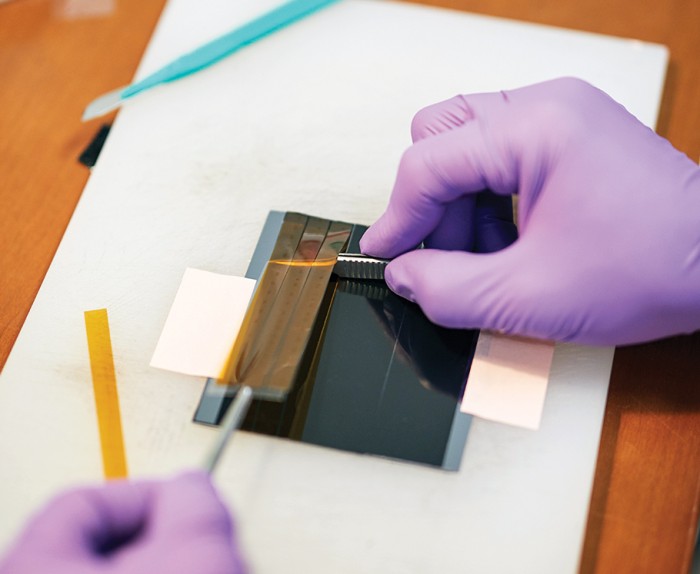

Once a team in Cox’s lab has mapped a rat’s neural activity, the animal is killed and its brain is infused with the heavy metal osmium. Then a team headed by Harvard biologist Jeff Lichtman cuts the brain into slices and figures out exactly how the neurons are organized and connected.

That process starts in a basement lab with a desktop machine that works like a delicatessen salami slicer. A small metal plate rises and falls, methodically carving away the tip of what seems to be an amber-colored crayon and adhering the slices to a conveyor belt made of plastic tape. The difference is that the “salami” is actually a tube of hard resin that encases and supports the fragile brain tissue, the moving plate contains an impossibly sharp diamond blade, and the slices are about 30 nanometers thick.

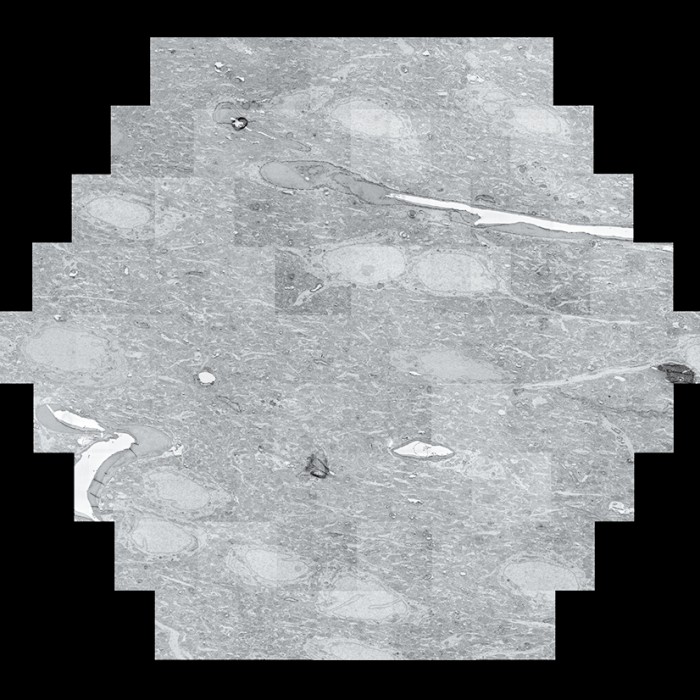

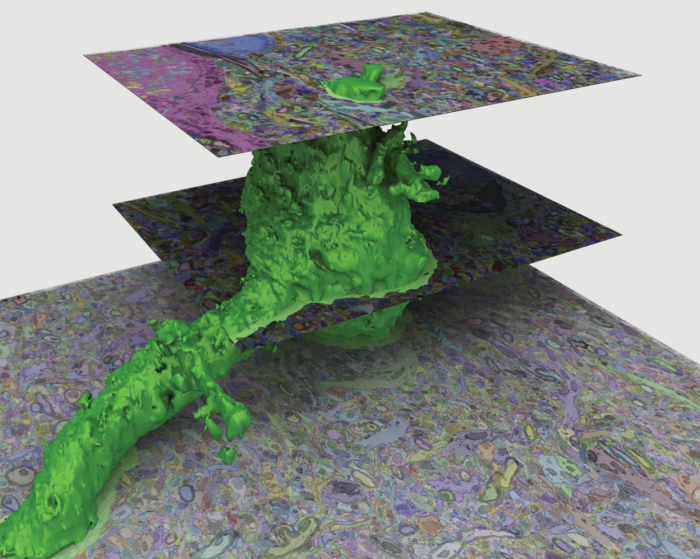

Scans of brain slices are stitched together by an algorithm.

A “multibeam field of view,” made of 61 images taken by the electron microscope, is seen at left; 14 multibeam fields of view are combined at right.

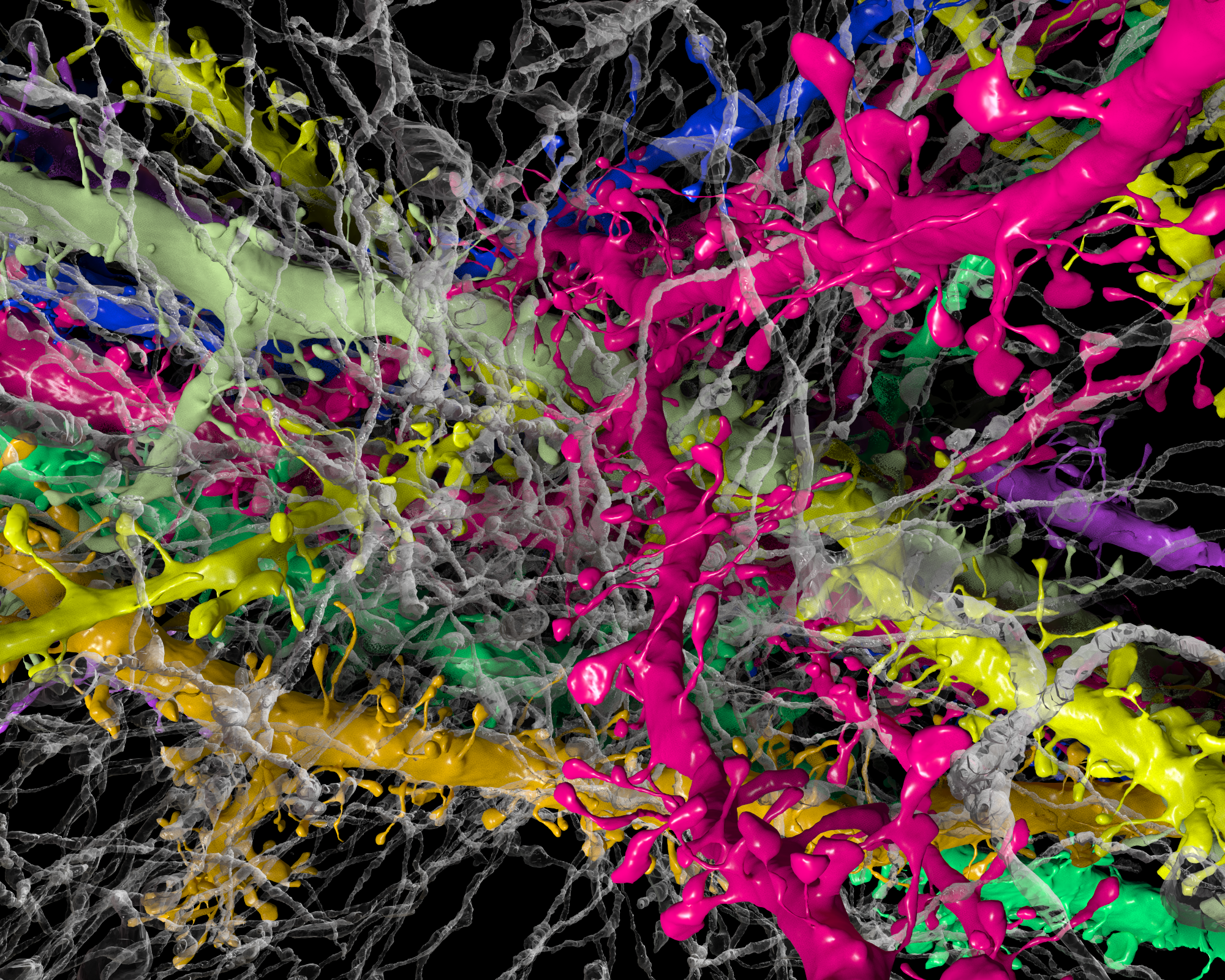

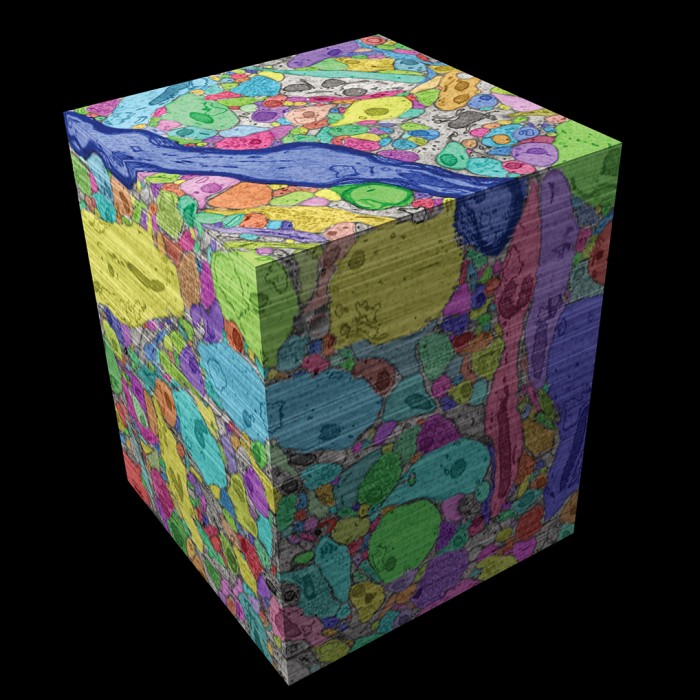

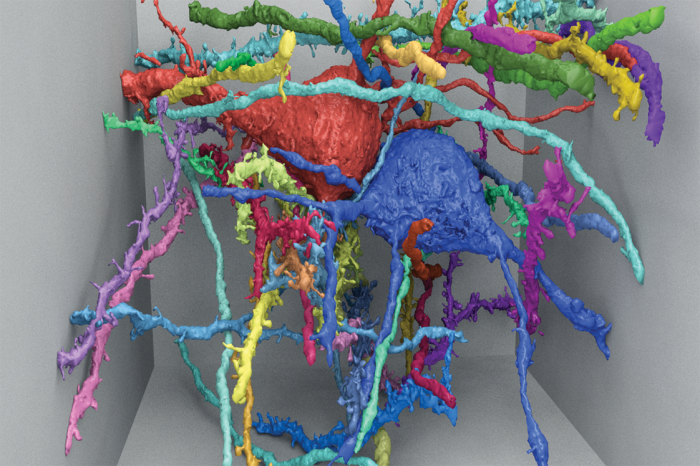

Scans are assembled into a cube and colorized.

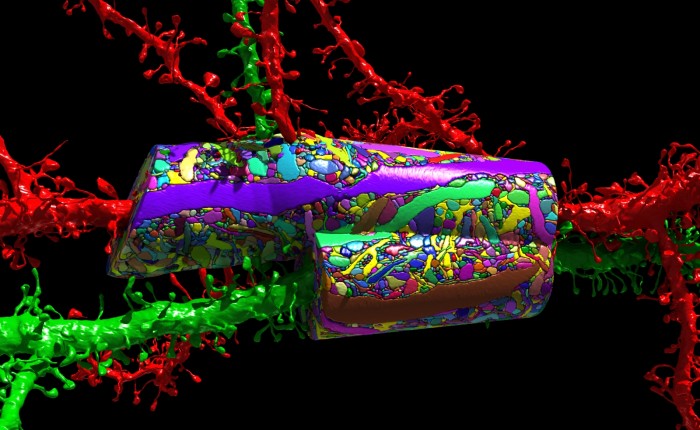

Next, at another lab down the hall, lengths of tape containing several brain slices each are mounted on silicon wafers and placed inside what looks like a large industrial refrigerator. The device is an electron microscope: it uses 61 electron beams to scan 61 patches of brain tissue simultaneously at a resolution of four nanometers.

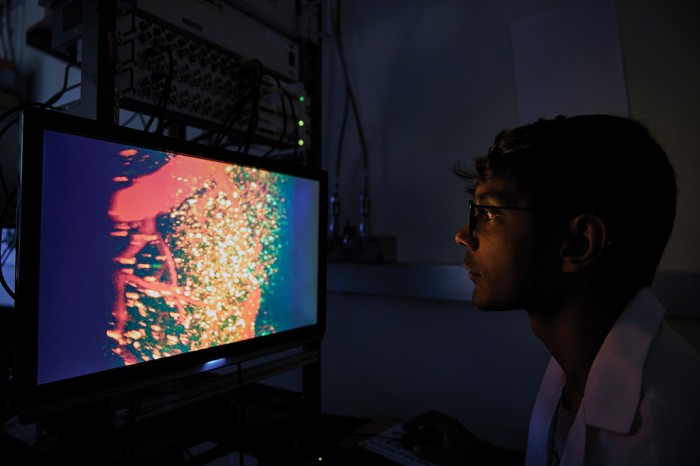

Each wafer takes about 26 hours to scan. Monitors next to the microscope show the resulting images as they build up in awe-inspiring detail—cell membranes, mitochondria, neurotransmitter-filled vesicles crowding at the synapses. It’s like zooming in on a fractal: the closer you look, the more complexity you see.

Slicing is hardly the end of the story. Even as the scans come pouring out of the microscope—“You’re sort of making a movie where each slice is deeper,” says Lichtman—they are forwarded to a team led by Harvard computer scientist Hanspeter Pfister. “Our role is to take the images and extract as much information as we can,” says Pfister.

That means reconstructing all those three-dimensional neurons—with all their organelles, synapses, and other features—from a stack of 2-D slices. Humans could do it with paper and pencil, but that would be hopelessly slow, says Pfister. So he and his team have trained neural networks to track the real neurons. “They perform a lot better than all the other methods we’ve used,” he says.

Each neuron, no matter its size, puts out a forest of tendrils known as dendrites, and each has another long, thin fiber called an axon for transmitting nerve impulses over long distances—completely across the brain, in extreme cases, or even all the way down the spinal cord. But by mapping a cubic millimeter as MICrONS is doing, researchers can follow most of these fibers from beginning to end and thus see a complete neural circuit. “I think we’ll discover things,” Pfister says. “Probably structures we never suspected, and completely new insights into the wiring.”

The power of anticipation

Among the questions the MICrONS teams hope to begin answering: What are the brain’s algorithms? How do all those neural circuits actually work? And in particular, what is all that feedback doing?

Many of today’s AI applications don’t use feedback. Electronic signals in most neural networks cascade from one layer of nodes to the next, but generally not backward. (Don’t be thrown by the term “backpropagation,” which is a way to train neural networks.) That’s not a hard-and-fast rule: “recurrent” neural networks do have connections that go backward, which helps them deal with inputs that change with time. But none of them use feedback on anything like the brain’s scale. In one well-studied part of the visual cortex, says Tai Sing Lee at Carnegie Mellon, “only 5 to 10 percent of the synapses are listening to input from the eyes.” The rest are listening to feedback from higher levels in the brain.

There are two broad theories about what the feedback is for, says Cox, and “one is the notion that the brain is constantly trying to predict its own inputs.” While the sensory cortex is processing this frame of the movie, so to speak, the higher levels of the brain are trying to anticipate the next frame, and passing their best guesses back down through the feedback fibers.

This the only way the brain can deal with a fast-moving environment. “Neurons are really slow,” Cox says. “It can take up to 170 to 200 milliseconds to go from light hitting the retina through all the stages of processing up to the level of conscious perception. In that time, Serena Williams’s tennis serve travels nine meters.” So anyone who manages to return that serve must be swinging her racket on the basis of prediction.

And if you’re constantly trying to predict the future, Cox says, “then when the real future arrives, you can adjust to make your next prediction better.” That meshes well with the second major theory being explored: that the brain’s feedback connections are there to guide learning. Indeed, computer simulations show that a struggle for improvement forces any system to build better and better models of the world. For example, Cox says, “you have to figure out how a face will appear if it turns.” And that, he says, may turn out to be a critical piece of the one-shot-learning puzzle.

“When my daughter first saw a dog,” says Cox, “she didn’t have to learn about how shadows work, or how light bounces off surfaces.” She had already built up a rich reservoir of experience about such things, just from living in the world. “So when she got to something like ‘That’s a dog,’” he says, “she could add that information to a huge body of knowledge.”

If these ideas about the brain’s feedback are correct, they could show up in MICrONS’s detailed map of a brain’s form and function. The map could demonstrate what tricks the neural circuitry uses to implement prediction and learning. Eventually, new AI applications could mimic that process.

Even then, however, we will remain far from answering all the questions about the brain. Knowing neural circuitry won’t teach us everything. There are forms of cell-to-cell communication that don’t go through the synapses, including some performed by hormones and neurotransmitters floating in the spaces between the neurons. There is also the issue of scale. As big a leap as MICrONS may be, it is still just looking at a tiny piece of cortex for clues about what’s relevant to computation. And the cortex is just the thin outer layer of the brain. Critical command-and-control functions are also carried out by deep-brain structures such as the thalamus and the basal ganglia.

The good news is that MICrONS is already paving the way for future projects that map larger sections of the brain.

Much of the $100 million, Vogelstein says, is being spent on data collection technologies that won’t have to be invented again. At the same time, MICrONS teams are developing faster scanning techniques, including one that eliminates the need to slice tissue. Teams at Harvard, MIT, and the Cold Spring Harbor Laboratory have devised a way to uniquely label each neuron with a “bar-coding” scheme and then view the cells in great detail by saturating them with a special gel that very gently inflates them to dozens or hundreds of times their normal size.

“So the first cubic millimeter will be hard to collect,” Vogelstein says, “but the next will be much easier.”

M. Mitchell Waldrop is a freelance writer in Washington, D.C. He is the author of Complexity and The Dream Machine and was formerly an editor at Nature.

Keep Reading

Most Popular

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Sam Altman says helpful agents are poised to become AI’s killer function

Open AI’s CEO says we won’t need new hardware or lots more training data to get there.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.