Big Questions Around Facebook’s Suicide-Prevention Tools

It’s been almost a year since the general rollout of Facebook Live, which lets you broadcast live video to followers, and in that time several people have killed themselves while sharing video of themselves—including a 14-year-old Florida girl who hanged herself in a bathroom in a foster home in January.

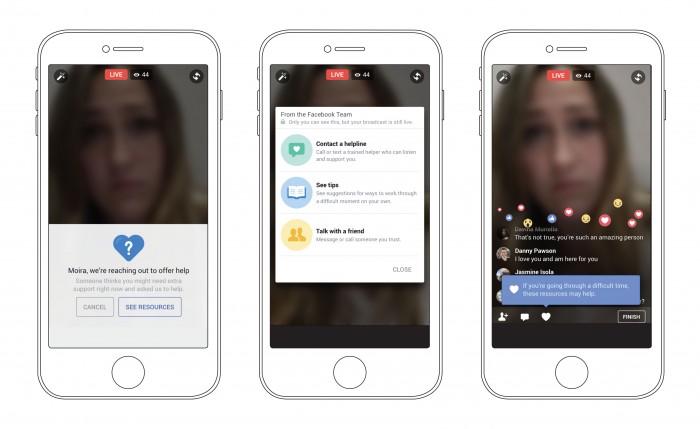

Facebook wants to avoid these tragedies, and on Wednesday it rolled out a handful of tools that it thinks may help. These include allowing viewers to report friends who are broadcasting via Facebook Live that appear to be veering toward self-injury or a suicide attempt; the broadcaster will then see a message—while still shooting the live video—that offers resources like the opportunity to contact a help line or talk with a friend. These are the same kinds of tools Facebook already offers to users when a friend on the site reports one of their status updates for similar concerns.

Can such an intervention be helpful, though? Joe Franklin, an assistant professor at Florida State University who runs the school’s Technology and Psychopathology Lab, says it’s a move in the right direction, but there’s no great scientific evidence that such things are particularly helpful.

“I don’t think it’s a bad thing and I think we should study it,” he says. “But I would immediately have questions—I would not assume it would be effective.”

Willa Casstevens, an associate professor at North Carolina State University whose work includes studying suicide prevention, is hopeful that such intervention might be positively received by younger people in particular, since they’re used to interacting via social media.

“In the moment, a caring hand reached out can move mountains and work miracles,” she says. “The question would then be if they would still be in a position to take advantage of it.”

Facebook also said Wednesday that it’s testing the use of pattern recognition to figure out when a post may contain suicidal thoughts. A flagged post can then be reviewed by the site’s community operations team, which can decide whether to reach out to the person who wrote it.

Franklin, whose research includes studying how machine learning can mine health records to determine a person’s risk of attempting suicide, sees this kind of method as the future of spotting suicidal behavior, particularly because it is so easy to scale (and, he thinks, can be more accurate than reports from other people). But in his work he’s found that people often use words like “suicide” or phrases like “kill myself” colloquially, and it’s hard for algorithms to do a good job of distinguishing that from situations where someone really means it.

Still, he says, “it’s a great step forward in terms of trying to identify people who are thinking about or considering suicide.”

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.