The Growing Problem of Bots That Fight Online

Software agents, or bots, permeate the Web. They gather data about Web pages, they correct vandalism on Wikipedia, they generate spam and even emulate humans.

And their impact is growing. By some measures, bots account for 49 percent of visits to Web pages and are responsible for over 50 percent of clicks on ads. This impact is set to increase as the number of bots rises exponentially.

“An increasing number of decisions, options, choices, and services depend now on bots working properly, efficaciously, and successfully,” say Taha Yasseri and pals at the University of Oxford in the U.K. “Yet, we know very little about the life and evolution of our digital minions.”

This raises an interesting question. How do bots interact with each other? And how do these interactions differ from the way humans interact?

Today, Yasseri and pals throw some light on these questions by studying the way bots on Wikipedia interact with each other. And all is not well in the land of cyberspace. “We find that, although Wikipedia bots are intended to support the encyclopedia, they often undo each other’s edits and these sterile ‘fights’ may sometimes continue for years,” they say.

Wikipedia has long used bots to handle repetitive tasks such as adding links between different language editions, checking spelling, undoing vandalism, and so on. In 2014, bots carried out some 15 percent of all the edits on all the language editions.

All these bots must be approved by Wikipedia and have to follow the organization’s bot policy. So in theory, Wikipedia’s bot world ought to be a happy one. In practice, things are rather different.

In particular, Yasseri and co focus on whether bots disagree with one another. One way to measure this on Wikipedia is by reverts—edits that change an article back to the way it was before a previous change.

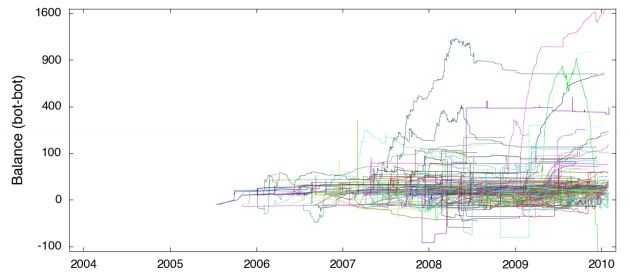

Over a 10-year period, humans reverted each other about three times on average. But bots were much more active. “Over the 10-year period, bots on English Wikipedia reverted another bot on average 105 times,” say Yasseri and co.

There is significant variation between different language editions of Wikipedia. For example, bots working on the German edition revert each other an average of 24 times, while those on the Portuguese edition had some 185 bot-bot reverts.

Bots and humans differ significantly in their revert habits. The most likely time for a human to make a revert is either within two minutes after a change has been made, after 24 hours, or after a year. That’s clearly related to the rhythms of human lifestyles.

Robots, of course, do not follow these rhythms: rather, they have a characteristic average response time of one month. “This difference is likely because, first, bots systematically crawl articles and, second, bots are restricted as to how often they can make edits,” say Yasseri and co.

Nevertheless, bots can end up in significant disputes with each other, and behave just as unpredictably and inefficiently as humans. But why?

Yasseri and co think they know the answer. “The disagreements likely arise from the bottom-up organization of the community, whereby human editors individually create and run bots, without a formal mechanism for coӧrdination with other bot owners,” they say.

Indeed, most of the disputes arise between bots that specialize in creating and modifying links between different language editions of Wikipedia. That’s possibly because the editors behind these bots speak different languages, which makes coӧrdination all the more difficult. “The lack of coӧrdination may be due to different language editions having slightly different naming rules and conventions,” say the team.

That’s interesting work because it shows how relatively simple bots can produce complex dynamics with unintended consequences. It is all the more concerning given that Wikipedia is a gated community where the use of robots is reasonably well governed.

That’s not the case in other areas, like social-media organizations such as Twitter. One group has estimated that in 2009, a quarter of all tweets were generated by bots. That’s likely to have changed significantly since then. And there are numerous examples of advanced bots that are increasingly hard to distinguish from humans.

The dynamics that these bots produce in relatively unregulated environments is anyone’s guess. But that means there is an interesting challenge here to understand the way bots interact and to map out the complex dynamics they create.

Ref: arxiv.org/abs/1609.04285 : Even Good Bots Fight

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.