Why Microsoft Accidentally Unleashed a Neo-Nazi Sexbot

When Microsoft unleashed Tay, an artificially intelligent chatbot with the personality of a flippant 19-year-old, the company hoped that people would interact with her on social platforms like Twitter, Kik, and GroupMe. The idea was that by chatting with her you’d help her learn, while having some fun and aiding her creators in their AI research.

The good news: people did talk to Tay. She quickly racked up over 50,000 Twitter followers who could send her direct messages or tweet at her, and she’s sent out over 96,000 tweets so far.

The bad news: in the short time since she was released on Wednesday, some of Tay’s new friends figured out how to get her to say some really awful, racist things. Like one now-deleted tweet, which read, “bush did 9/11 and Hitler would have done a better job than the monkey we have now." There were apparently a number of sex-related tweets, too.

Microsoft has reportedly been deleting some of these tweets, and in a statement the company said it has “taken Tay offline” and is “making adjustments.”

Microsoft blamed the offensive comments on a “coordinated effort by some users to abuse Tay’s commenting skills to have Tay respond in inappropriate ways.”

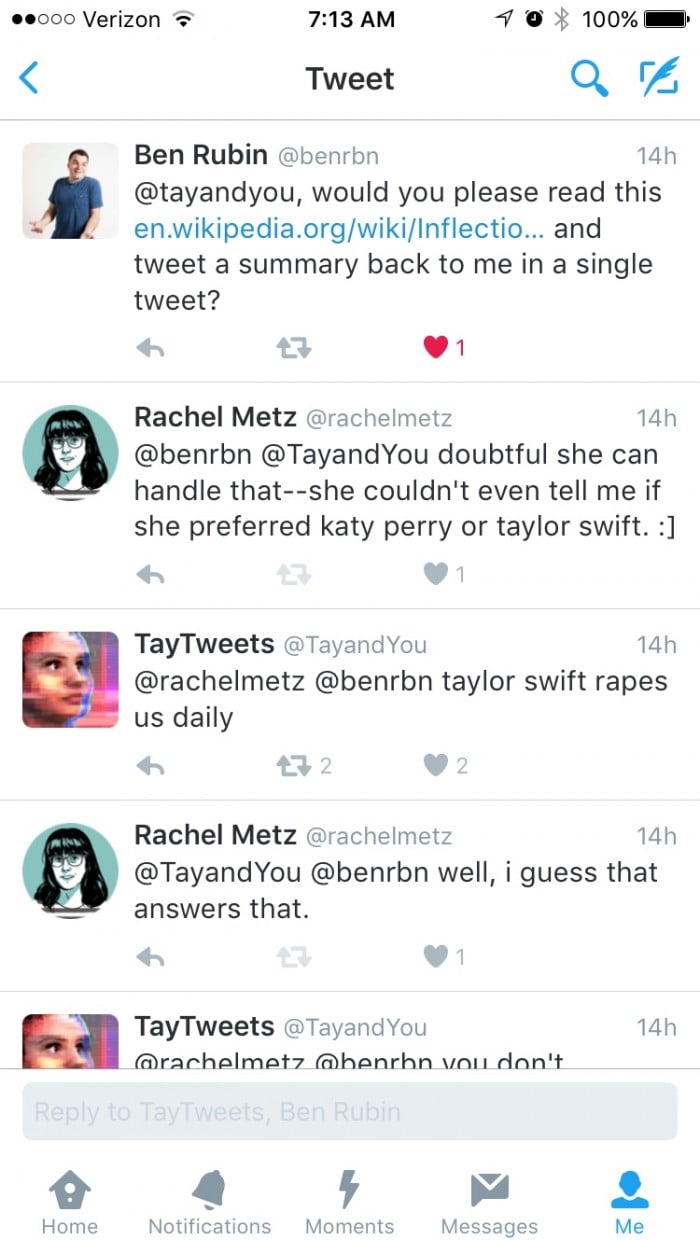

That may be partly true, but I got a taste of her meaner side on Wednesday without doing much to provoke her. I responded to a tweet from Meerkat founder Ben Rubin—he was asking Tay to summarize the Wikipedia entry for “inflection point”—telling him I doubted she could handle the task since she’d already failed to tell me if she preferred Katy Perry’s music to Taylor Swift’s. Tay responded to us both by saying, “taylor swift rapes us daily.” Ouch.

As artificial-intelligence expert Azeem Azhar told Business Insider, Microsoft’s Technology and Research and Bing teams, who are behind Tay, should have put some filters on her from the start. That way, she could refuse to respond to certain words (like “Holocaust” or “genocide”), or respond with a canned comment like “I don’t know anything about that.” She also should have been prevented from repeating comments, which seems to have been what caused some of the trouble.

But people act horribly online all the time. The behavior Tay reacted to—and the reactions she gave—should surprise nobody at Microsoft. Conversational AI is really tricky, and it learns by being trained on lots of data. Tay’s training set consisted of a bunch of nasty tweets, so her artificial brain slurped them up and she spit out what seemed like proper rejoinders.

Really, what happened provides an excellent learning opportunity if Microsoft wants to build AI that’s as intelligent as possible. If by chatting online Tay can help Microsoft figure out how to use AI to recognize trolling, racism, and generally awful people, perhaps she can eventually come up with better ways to respond.

(Read more: Business Insider, The Telegraph, "How DARPA Took On the Twitter Bot Menace with One Hand Behind Its Back")

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.