Robot Art Raises Questions about Human Creativity

In July 2013, an up-and-coming artist had an exhibition at the Galerie Oberkampf in Paris. It lasted for a week, was attended by the public, received press coverage, and featured works produced over a number of years, including some created on the spot in the gallery. Altogether, it was a fairly typical art-world event. The only unusual feature was that the artist in question was a computer program known as “The Painting Fool.”

Even that was not such a novelty. Art made with the aid of artificial intelligence has been with us for a surprisingly long time. Since 1973, Harold Cohen—a painter, a professor at the University of California, San Diego, and a onetime representative of Britain at the Venice Biennale—has been collaborating with a program called AARON. AARON has been able to make pictures autonomously for decades; even in the late 1980s Cohen was able to joke that he was the only artist who would ever be able to have a posthumous exhibition of new works created entirely after his own death.

The unresolved questions about machine art are, first, what its potential is and, second, whether—irrespective of the quality of the work produced—it can truly be described as “creative” or “imaginative.” These are problems, profound and fascinating, that take us deep into the mysteries of human art-making.

The Painting Fool is the brainchild of Simon Colton, a professor of computational creativity at Goldsmiths College, London, who has suggested that if programs are to count as creative, they’ll have to pass something different from the Turing test. He suggests that rather than simply being able to converse in a convincingly human manner, as Turing proposed, an artificially intelligent artist would have to behave in ways that were “skillful,” “appreciative,” and “imaginative.”

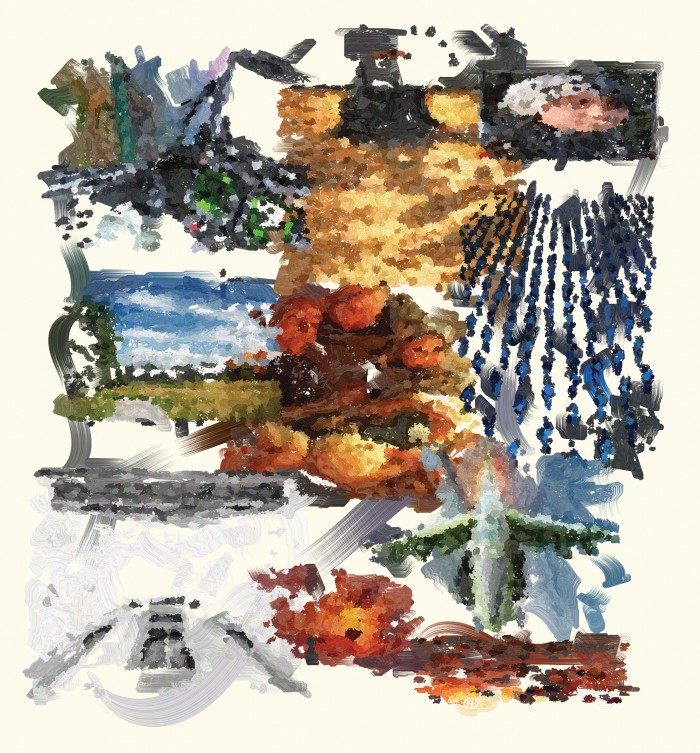

In the opening image, one of AARON’s compositions.

Thus far, the Painting Fool—described as “an aspiring painter” on its website—has made progress on all three fronts. By “appreciative,” Colton means responsive to emotions. An early work consisted of a mosaic of images in a medium resembling watercolor. The program scanned an article in the Guardian on the war in Afghanistan, extracted keywords such as “NATO,” “troops,” and “British,” and found images connected with them. Then it put these together to make a composite image reflecting the “content and mood” of the newspaper article.

The software had been designed to duplicate various painting and drawing media, to select the appropriate one, and also to evaluate the results. “This is a miserable failure,” it commented about one effort. A skeptic might doubt whether this and other statements are anything more than skillful digital ventriloquism. But the writing of poetry is mentioned on the website as a current project—so the Painting Fool apparently aspires to be an author as well as a painter.

In the Paris exhibition, the sitters for portraits faced not a human artist but a laptop, on whose screen the “painting” took place. The Painting Fool executed pictures of visitors in different moods, responding to emotional keywords derived from 10 articles read—once again—in the Guardian. If the tally of negativity was too great (always a danger with news coverage), Colton programmed the software to enter a state of despondency in which it refused to paint at all, a virtual equivalent of the artistic temperament.

Arguably, the images unveiled in June 2015 by Google’s Brain AI research team also display at least one aspect of human imagination: the ability to see one thing as something else. After some training in identifying objects from visual clues, and being fed photographs of skies and random-shaped stuff, the program began generating digital images suggesting the combined imaginations of Walt Disney and Pieter Bruegel the Elder, including a hybrid “Pig-Snail,” “Camel-Bird” and “Dog-Fish.”

Here is a digital equivalent of the mental phenomenon to which Mark Antony referred in Shakespeare’s Antony and Cleopatra: “Sometime we see a cloud that’s dragonish/A vapour sometime like a bear or lion.”

Leonardo da Vinci recommended gazing at stains on a wall or similar random marks as a stimulus to creative fantasy. There, an artist trying to “invent some scene” would find the swirling warriors of a battle or a landscape with “mountains, rivers, rocks, trees, great plains, valleys and hills.” This capacity might have been one of the triggers for prehistoric cave art. Quite often a painting or rock engraving seems to use a natural feature—a pebble in the wall that looks like an eye, for example. Perhaps the Cro-Magnon artist first discerned a lion or a bison in random marks, then made that resemblance clearer with paint or incised line.

Come to that, all representational pictures—not only paintings and drawings but also photographs—depend on a capacity to see one thing, shapes on a flat surface, as something else: something in the three-dimensional world. The artificial-intelligence systems developed by the Google team are good at that. The images were created using an artificial neural net, software that emulates the way layers of neurons in the brain process information. The software is trained, through analyzing millions of examples, to recognize objects in photos: a dumbbell, a dog, or a dragon.

The Google researchers discovered they could turn such systems into artists by doing something like what Leonardo suggested. The neural net is provided with an image made up of a blizzard of blotches and spots, and is asked to tweak the image to bring out any faint resemblance it detects in the noise to objects that the software has been trained to recognize. A sea of noise can become a tangle of ants or starfish. The technique can also be applied to photos, populating blue skies with ghostly dogs or reworking images in stylized strokes.

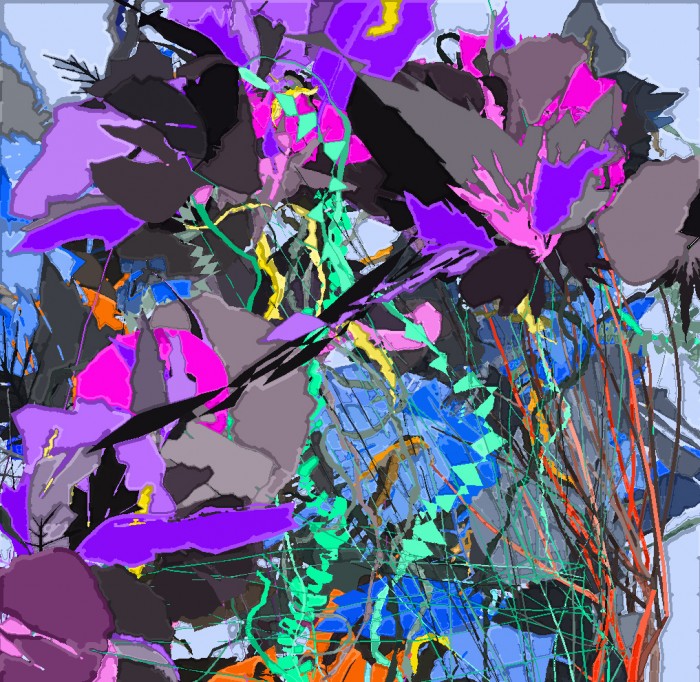

The software was just as adept as Mark Antony at discerning animals in clouds. The Google team dubbed the resulting artistic idiom “Inceptionism,” because the research project into neural-network architecture was code-named “Inception”—a reference to a 2010 movie of the same name about a man who penetrates deeper and deeper layers of other people’s dreams. Art-historically, you might classify Inceptionism as a variant of Surrealism. René Magritte, Salvador Dalí, and Max Ernst produced numerous works of a similar type—painting a sky of musical instruments or baguettes, for instance, instead of cumulonimbus.

How good, really, is Inceptionism? Some of the pictures are striking and can be perceived in various ways—including an emphatic linear mode vaguely reminiscent of the style of Van Gogh. In some cases, they are disturbing, suggesting the kind of hallucinations described by those suffering from bad trips or the DTs: a sky filled with cycling dogs, for example, or swirling architecture covered in peering eyes.

But Inceptionist works, so far, have been too kitschy and too evidently photo-based—to my taste, anyway—to give much competition to Dalí or Magritte. Nor have the Painting Fool or most similar programs yet progressed beyond a high school or amateur-art-club level of achievement. What about the potential of computer art? Can artificial intelligence add to the visual arts (or, for that matter, to music and other idioms at which computers are also already adept)?

Things Reviewed

The Painting Fool

AARON

Google’s Inceptionist photographs

Simon Colton is conscious of the criticism—a standard one aimed at computer art—that the works of the Painting Fool are actually creations of his own. We wouldn’t, he has pointed out, give the credit for a human painter’s work to that artist’s teacher. To which the answer is, that might depend on how far the pupil was following the teacher’s instructions. Generally, the credit for a painting from a Renaissance workshop goes to the master, not to the apprentices who may have done much of the work. But in the case of Verrocchio’s Baptism of Christ (c. 1475), we recognize the achievement of the workshop member Leonardo da Vinci, because the parts he painted—an angel and some landscape—are visibly different from the master’s work. Art historians therefore classify the picture as a joint effort.

In 17th-century Antwerp, similarly, Rubens had a small factory of highly trained assistants who to a greater or lesser extent painted most of his large-scale works. The normal procedure was that the master produced a small sketch that was then blown up, under his supervision, to the size of a ceiling or an altarpiece. Some scholars believe, however, that on occasion the studio turned out a “Rubens” when the great man never even provided an initial model.

Here, the example of AARON is intriguing. Are the pictures the evolving program has made over the last four decades really works by Harold Cohen, or independent creations by AARON itself, or perhaps collaborations between the two? It is a delicate problem. AARON has never moved far out of the general stylistic idiom in which Cohen himself worked in the 1960s, when he was a successful exponent of color field abstraction. Clearly, AARON is his pupil in that respect.

One aspect of Cohen’s earlier work was crucial to his taking an interest in artificial intelligence. He felt that “making art didn’t have to require ongoing, minute-by-minute decision making ... that it should be possible to devise a set of rules and then, almost without thinking, make the painting by following the rule.”

This approach is characteristic of a certain type of artist. The classic abstractions of Piet Mondrian from the 1920s and 1930s are a case in point. These were made according to a set of self-imposed regulations: only straight lines were allowed, which could meet only at right angles and could be depicted only in a palette of red, blue, and yellow (plus black and white).

In a rare example of an art-historical experiment, the late art critic Tom Lubbock attempted to paint some Mondrians himself by following this recipe. He duly produced several abstractions that looked quite like Mondrian’s works, just not so good. The conclusion appeared to be that Mondrian was adding extra qualities—perhaps subtleties of visual balance and weighting of color—that weren’t formulated in the rules.

It is unusual for art critics to try anything as practical as Lubbock’s research. But lots of other people do the same kind of thing: they are called forgers, copyists, and pupils. A great deal of art consists, and always has consisted, of imitations of other work: pictures done in the manner of Mondrian, Monet, or some other great originator. Art historians spend their lives classifying artists into “circle of Botticelli,” “follower of Caravaggio,” etc. Already, it is clear that machines can work on this level: they can produce derivative art (which is all that 99.9 percent of human artists do). But can they do more than that?

Understandably, Cohen has thought a great deal about this. In a lecture from 2010, he posed it the other way around. Wasn’t it obvious that AARON is creative? After all, he went on, “with no further input from me, it can generate unlimited numbers of images, it’s a much better colorist than I ever was myself, and it typically does it all while I’m tucked up in bed.” What, in fact, he asked, was his own contribution? “Well, of course, I wrote the program. It isn’t quite right to say that the program simply follows the rules I gave it. The program is the rules.”

In a way, then, AARON is functioning like a Renaissance or Baroque studio. Under Cohen’s direction, it has developed to the point where it is equivalent to Rubens’s studio in autonomous mode—and perhaps more. In the early years, AARON was confined to drawing outlines; Cohen then selected and sometimes added color by hand. In the ’80s, Cohen began to teach it to work in color. Eventually, he developed a series of rules to enable it to compose coloristic harmonies, but he found this unsatisfactory. His first solution consisted of a long list of instructions based on what a human artist would do in certain situations. But this did not always work, partly because inevitably the list was open-ended.

Eventually, he found a way to teach AARON to use colors with a simple algorithm. We have limited ability to imagine differing chromatic arrangements, but our feedback system is terrific. A human artist can look at a picture as it evolves and decide exactly, say, what shade of yellow to add to a picture of sunflowers. AARON does not have a visual system at all, but Cohen devised a formula by which it can balance such factors as hue and saturation in any given image.

Can a machine ever be as creative as a Rembrandt or Picasso? To do that, Cohen argues, a robot would have to develop a sense of self. That may or may not happen, and “if it doesn’t, it means that machines will never be creative in the same sense that humans are creative.” The processes of such an artist involve an interplay between social, emotional, historical, psychological, and physiological factors that are dauntingly difficult to analyze, let alone replicate. This is what can give an image made by such an artist a deep level of meaning to a

human eye.

One day, Cohen suggests, a machine might develop an equivalent sensibility, but even if that never comes to pass, “it doesn’t mean that machines have no part to play with respect to creativity.” As his own experience shows, artificial intelligence offers the artist something beyond an assistant or pupil: a new creative collaborator.

A new, expanded version of A Bigger Message, Martin Gayford’s book of conversations with David Hockney, will be published in May. His last story for MIT Technology Review was “Motion Pictures” (September/October 2015).

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.