Now You Can Use Emojis to Search for Cute Cat Videos

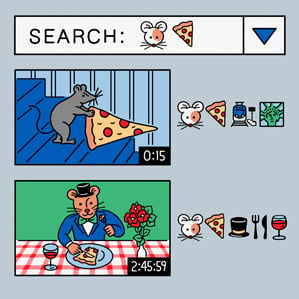

In a move that makes it possible to seamlessly combine your love of emojis with adorable animal videos, a group of researchers has built a prototype of a search engine that uses the tiny icons to search videos.

Called Emoji2Video, the search engine was made by researchers at the University of Amsterdam and Qualcomm Research as a way to show how emojis can be used to give a dense, easy-to-understand representation of what’s happening in images and videos—something that you can comprehend no matter what language you speak, or whether or not you can read.

Visitors to the site can click on one or more emojis from a curated list of 385 (the current full list curated by a group called the Unicode Consortium contains nearly 1,300 of them, but the researchers excluded ones they didn’t think would be that relevant to video searches, like symbols and numbers, and ones that were somewhat duplicative). The pictograms you choose will be used to search a data set of 45,000 YouTube videos for relevant matches.

“It’s a way to drill down very quickly to the very specific thing you’re looking for,” says Spencer Cappallo, a graduate student at the University of Amsterdam and coauthor of the paper.

Researchers are presenting Emoji2Video and a paper explaining the underlying technology used to make the search engine work this week at a technology conference in Brisbane, Australia.

Emojis were created in Japan in the 1990s, and have gotten increasingly popular in recent years as smartphone makers have added them to virtual keyboards, making it easy to punctuate a text message with a tiny pink heart or an itty-bitty martini.

The icons are used so frequently that some online services already let users search via emojis: Microsoft’s Bing search engine will accept emoji queries, while Yelp will let you search for businesses by typing in, say, a chicken emoji rather than the word “chicken.” Cappallo says the researchers wanted to focus on video in particular because they hadn’t seen much work done on how to relate emojis to pictures and videos, and vice versa.

The researchers used deep-learning techniques to produce emoji labels for videos that seem to appropriately represent what’s in them (a baseball or a dog, for instance) and to determine how likely it is that those things are in a given frame. About one out of every 50 frames was analyzed, Cappallo says, and the emojis chosen to represent those are averaged to get one short emoji list, ordered in decreasing confidence, for that particular video.

I tried giving Emoji2Video a few queries of increasing difficulty, just to see where it would take me. First, I gave it a simple test, by picking just the cat emoji. The results included a link to one video showing a cat licking grass in someone’s backyard, another advertising for a cat toy, and so on. In another search, I used the emojis for bikini and bicycle, and got a video of two women in tiny two-pieces washing a Harley. In a third search, I tried three emojis at once: an angry face, a birthday cake, and a camel. The top video result covered two out of the three, as it depicted someone showing off an impressive “Angry Birds”-themed birthday cake.

Clearly, the search engine is still in its infancy, and it’s only searching a tiny fraction of the videos available on YouTube, but the researchers say it wouldn’t be difficult to add more, and they plan to do so. Cappallo says that at this point top results are sometimes not representative of the emoji you’re searching with because the data set simply doesn’t contain a video with that specific thing.

Beyond video, Cappallo says, the work could be useful for creating small, icon-driven summaries of photo albums, or for getting information from small displays, such as smart watches, where it can be tricky to type but might not be so hard to tap on a tiny image or two.

Tyler Schnoebelen, a linguist and cofounder of Idibon, a startup that processes unstructured language data (including emoticons and emojis), says the project could be useful for finding videos made by those who don’t speak the same language as you. But for him, the point of the project seems to be that it’s simply fun.

He also notes that the meanings behind an emoji can vary from one culture to another, which could make it harder to find direct translations (the eggplant emoji is a standout example, as it is often considered to represent a phallus, rather than a versatile fruit).

“The meaning of an emoji is more than just the noun that it visualizes,” he says.

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.