Computational Linguistics Reveals How Wikipedia Articles Are Biased Against Women

One of Wikipedia’s more embarrassing features is that its workforce is dominated by men. Back in 2011, the New York Times reported that only 13 percent of Wikipedia’s editors were women compared to just under half its readers.

As a consequence, the Wikimedia Foundation, which runs the website, set itself various goals to change this gender bias including the target of increasing its proportion of female editors to 25 percent by 2015. Whether that will be achieved is not yet clear.

In the meantime, various researchers have kept a close eye on the way the gender bias among editors may be filtering through to the articles in the encyclopedia itself. Today, Claudia Wagner at the Leibniz Institute for the Social Sciences in Cologne, Germany, and pals at ETH Zurich, Switzerland and the University of Koblenz-Landau, say they have found evidence of serious bias in Wikipedia entries about women, suggesting that gender bias may be more deep-seated and engrained than previously imagined.

Wagner and co begin by comparing six different language versions of Wikipedia with three databases about notable men and women. These databases include Freebase, a database of 120,000 notable individuals, and Pantheon, a database of historical cultural popularity compiled by a team at MIT.

Wagner and co are interested in the proportion of men and women in these databases that are also covered by Wikipedia. And the results make for good reading at Wikipedia.

Wagner and co say that Wikipedia comes out well by this measure and, if anything, women are overestimated in all the language editions they studied. “We find that women on Wikipedia are covered well in all six Wikipedia language editions,” they say.

What’s more, the team also looked at the proportion of articles about men and women that appear on the start page of the English Wikipedia and say that this does not favor one sex over the other. “The selection procedure of featured articles of the Wikipedia community does not suffer from gender bias,” they conclude.

By these measures Wikipedia is doing well. “These are encouraging findings suggesting that the Wikipedia editor community is sensible to gender inequalities and participates in affirmative action practices that are showing some signs of success,” report Wagner and co.

But there are other signs of a more insidious gender bias that will be much harder to change. “We also find that the way women are portrayed on Wikipedia starkly differs from the way men are portrayed,” they say.

This conclusion is the result of first studying the network of connections between articles on Wikipedia. It turns out that articles about women are much more likely to link to articles about men than vice versa, a finding that holds true for all six language versions of Wikipedia that the team studied.

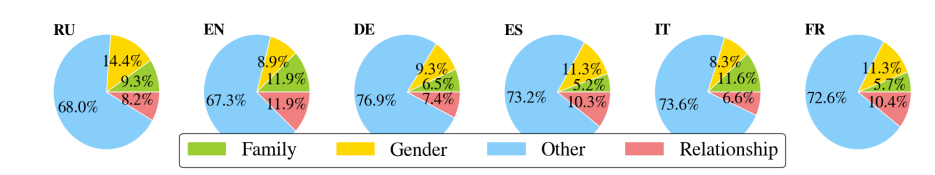

More serious is the difference in the way these articles refer to men and women as revealed by computational linguistics. Wagner and co studied this counting the number of words in each biographical article that emphasize the sex of the person involved.

Wagner and co say that articles about women tend to emphasize the fact that they are about women by overusing words like “woman,” “female,” or “lady” while articles about men tend not to contain words like “man,” “masculine,” or “gentleman.” Words like “married,” “divorced,” “children,” or “family” are also much more frequently used in articles about women, they say.

The team thinks this kind of bias is evidence for the practice among Wikipedia editors of considering maleness as the “null gender.” In other words, there is a tendency to assume an article is about a man unless otherwise stated. “This seems to be a plausible assumption due to the imbalance between articles about men and women,” they say.

That’s an interesting study that provides evidence that the Wikimedia Foundation’s efforts to tackle gender bias are bearing fruit. But it also reveals how deep-seated gender bias can be and how hard it will be to root out.

The first step, of course, is to identify and characterize the problem at hand. Wagner and co’s work is an important step in that direction that will allow editors at Wikipedia to continue their vigilance.

Ref: arxiv.org/abs/1501.06307 : It’s a Man’s Wikipedia? Assessing Gender Inequality in an Online Encyclopedia

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.