A Speech Synthesizer Direct to the Brain

Could a person who is paralyzed and unable to speak, like physicist Stephen Hawking, use a brain implant to carry on a conversation?

That’s the goal of an expanding research effort at U.S. universities, which over the last five years has proved that recording devices placed under the skull can capture brain activity associated with speaking.

While results are preliminary, Edward Chang, a neurosurgeon at the University of California, San Francisco, says he is working toward building a wireless brain-machine interface that could translate brain signals directly into audible speech using a voice synthesizer.

The effort to create a speech prosthetic builds on success at experiments in which paralyzed volunteers have used brain implants to manipulate robotic limbs using their thoughts (see “The Thought Experiment”). That technology works because scientists are able to roughly interpret the firing of neurons inside the brain’s motor cortex and map it to arm or leg movements.

Chang’s team is now trying to do the same for speech. It’s a trickier task, in part because complex language is unique to humans and the technology can’t easily be tested in animals.

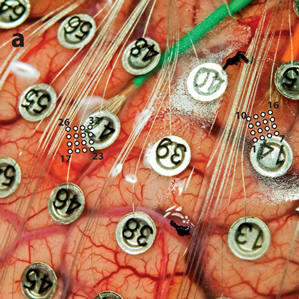

At UCSF, Chang has been carrying out speech experiments in connection with brain surgeries he performs on patients with epilepsy. A sheet of electrodes placed under the patients’ skulls records electrical activity from the surface of the brain. Patients wear the device, known as an electrocorticography array, for several days so that doctors can locate the exact source of seizures.

Chang has taken advantage of the opportunity to study brain activity as these patients speak or listen to speech. In a paper in Nature last year, he and his colleagues described how they used the electrode array to map patterns of electrical activity in an area of the brain called the ventral sensory motor cortex as subjects pronounced sounds like “bah,” “dee,” and “goo.”

“There are several brain regions involved in vocalization, but we believe [this one] is important for the learned, voluntary control of speech,” Chang says.

The idea is to record the electrical activity in the motor cortex that causes speech-related movements of the lips, tongue, and vocal cords. By mathematically analyzing these patterns, Chang says, his team showed that “many key phonetic features” can be detected.

One of the most terrifying consequences of diseases like ALS is that as paralysis spreads, people lose not only the ability to move, but also the faculty of speech. Some ALS patients use devices that make use of residual movement to communicate. In Hawking’s case, he uses software that lets him very slowly spell words by twitching his cheek. Other patients use eye trackers to operate a computer mouse.

The idea of using a brain-machine interface to achieve near-conversational speech has been proposed before, most notably by Neural Signals, a company that since the 1980s has been testing technology that uses a single electrode to record from directly inside the brains of people with “locked-in” syndrome. In 2009, the company described efforts to decode speech from a 25-year-old paralyzed man who is unable to move or speak at all (see “A Prosthesis for Speech”).

Another study, published this year by Marc Slutzky at Northwestern University, made an attempt to decode signals from the motor cortex as patients read aloud words containing all of the 39 English phonemes (consonant and vowel sounds that make up speech). The team identified phonemes with an average accuracy of 36 percent. The study used the same types of surface electrodes that Chang used.

Slutzky says that while that accuracy may seem low, it was achieved with a relatively small sample of words spoken in a limited amount of time. “We expect to achieve much better decoding in the future,” he says. Speech recognition software might also help guess which words people are attempting to say, scientists say.

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.