Readying Robots for the Real World

In a faded yellow warehouse on Albany Street, the Atlas robot is powering on. This hulking humanoid machine, standing six feet two inches tall and weighing 330 pounds, fires up with a powerful, high-pitched hum from a pump that pressurizes fluid for its hydraulic joints. Suspended just above the ground by a tether, it begins to move its feet and arms, part of a routine to calibrate the actuators in its 28 joints.

“This is kind of like the morning stretch,” says Scott Kuindersma, a postdoc in MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL).

As the robot is lowered to the floor, Pat Marion, a collaborating researcher who will begin working on his PhD in electrical engineering and computer science (EECS) this fall, sits in front of a set of computer monitors stationed several yards away and looks at the world through the robot’s eyes—or rather, cameras and lidars attached to its head and chest. One screen splices together a low-resolution fish-eye view of the room with a narrow, detailed image from a stereo camera. On another screen is a black-and-white rendering of the room created by a laser sensor. With a couple of clicks, Marion gives the robot a walking goal, indicating a spot on the screen ahead of its virtual image; the system responds with a suggested set of virtual footsteps on the screen. The robot slowly moves its feet, shifting its weight from side to side with each step as its hydraulic pump whines. After it reaches an overturned metal rack, Marion commands it to pick up a wooden board that is leaning against the rack. With a clawlike hand attachment, the robot carefully grips the board, lifts it up, and swings its arm to the right, letting the wood clatter to the ground. One task accomplished for the day.

The robot has been programmed by a team of students, postdocs, and faculty members led by EECS professor Seth Teller and Russ Tedrake, PhD ’04, an associate professor with dual appointments in EECS and aeronautics and astronautics. The team represents MIT in the U.S. Defense Advanced Research Projects Agency (DARPA) Robotics Challenge, a multi-year tournament designed to accelerate the development of robots that could aid humans in real-world disaster relief efforts and emergency scenarios—robots that could have gone into the Fukushima nuclear power plant after its triple meltdown, for instance, so that human workers wouldn’t be exposed to harmful radiation. The competition offered two tracks to the teams from academia, industry, and government: some built their own robots, while other teams, including MIT’s, focused on developing software to control the Atlas robot, designed and built by the MIT spinoff company Boston Dynamics. (Based in Waltham, Massachusetts, Boston Dynamics was cofounded by Marc Raibert, PhD ’77, a former EECS professor and member of MIT’s AI Lab. Google bought the company for an undisclosed amount last year.)

The DARPA Robotics Challenge (DRC) has three stages. In the first, the software teams used their programs to guide a simulated Atlas through several tasks in a Virtual Robotics Challenge held in June 2013. At the DRC Trials in December 2013, teams gathered at a racetrack in Florida to test the abilities of real robots—and to earn the right to compete for a $2 million prize in the third stage of the competition, the DRC Finals in 2015.

An Irresistible Challenge

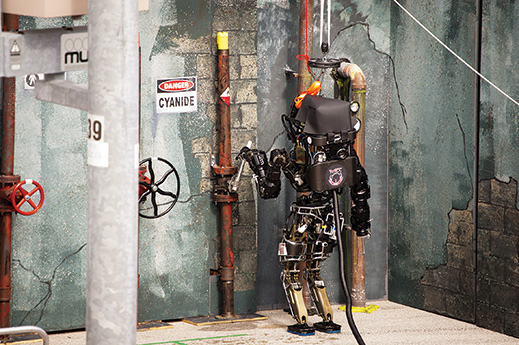

At first glance, the robots’ tasks for the trials seem surprisingly simple: open doors, climb a ladder, manipulate a hose, and operate valves, among others. A human first responder could do any of these things in seconds, and the powerful Atlas was certainly physically capable of accomplishing them. But it’s a huge challenge to design and program a robot that can navigate and manipulate objects in a disaster site, where conditions are messy and communication spotty. Though factory robots routinely perform complex tasks, they’re programmed to do one thing well in an environment explicitly designed for it.

“The real world is an unforgiving place,” says Teller. It’s impossible to anticipate the conditions that robots will encounter, so they need to get much more responsive and adaptable. “It’s not that you engineer the world to help the robot,” he says. “You engineer the robot to go out into the world to do the work that needs to be done.”

Teller and Tedrake decided to form a DRC team early in 2012, as chatter about the competition spread through the robotics community. Their skills are complementary. Teller, who heads the Robotics, Vision, and Sensor Networks group in CSAIL, focuses on helping machines sense their environments and interact with people; Tedrake, who leads CSAIL’s Robot Locomotion group, focuses on control of movements, particularly walking.

There was no shortage of researchers—and students—eager to help. “The DRC effort has all the excitement of a typical research project, along with the additional intensity of hard deadlines and high-visibility international competition on concrete tasks,” Teller says. With strict dates for competitions, there’s no time to reach perfection and little leeway if something doesn’t work properly. Teller and Tedrake recruited research scientist Maurice Fallon to lead the work on the robot’s perception and Kuindersma to be in charge of planning and control. Teller also tapped Matthew Antone ’95, MEng ’96, PhD ’01, who had worked with him on MIT’s 2006–’07 DARPA Urban Challenge team developing self-driving vehicles, while postdoc Sisir Karumanchi provided early support in developing software for manipulating objects. They and others from around MIT scrambled to respond to DARPA with a formal proposal by the end of May. (Marion, an accomplished software engineer, would join the team later to lead the development of the operator-robot interface.) In all, the team includes 12 students and 12 faculty, postdocs, and staff from CSAIL; the EECS, Mechanical Engineering, and Aero-Astro departments; and the Center for Ocean Engineering.

By the time the DRC officially began in October 2012 with the arrival of $375,000 in initial DARPA funding and a kickoff meeting at the agency, the MIT team had already spent six months raising funds, doing preliminary design and implementation studies, and investigating suitable lab spaces around campus.

The goal of the competition isn’t getting robots to think and act for themselves. But Teller says that from the beginning, the MIT team’s strategy focused on shifting many of the low-level decisions to the robot. Even though DARPA would provide explicit information about the set-up of each task, Teller says, they chose not to program the robot to do each one, and not to have a human “baby-sit” each movement.

Instead, they wanted a back-and-forth between human and robot. The operator would assess sensory information from the robot and decide what to do. The robot’s software would then develop a motion plan for accomplishing the task, which the human could approve or adjust. Every decision would be made on the fly.

Because the real world is unpredictable, “you can’t simply have a canned plan and expect it to succeed,” Teller says. He and his teammates were confident that their flexible system would ultimately be most efficient, reducing the human brainpower required for each step. But they had a lot of work to do.

Stage One: Is Your Software Atlas-Worthy?

In the weeks leading up to the Virtual Robotics Challenge (VRC), team members camped out in the CSAIL lab in the Stata Center round the clock, subsisting on a rotating selection of Pop-Tarts, fruit, and takeout as they tried to anticipate problems that might come up in the simulation and develop workarounds. On June 18, 19, and 20, more than two dozen teams took part in VRC simulations in their own labs. At CSAIL, the operators for each task sequestered themselves in a side office and focused intently on a rendering of the virtual robot as they put their software through its paces; other team members watched their progress on a video link outside.

The team’s preparation paid off. For the most part, having the software plan the robot’s movements worked. But for good measure, a few movements were scripted into the software to help the virtual robot get out of tough situations. Graduate student Andrés Valenzuela, SM ’11, figured out how to get it to crawl, for instance, which turned out to be useful; it fell during the competition and crawled to the finish line just as time expired.

When DARPA announced the results of the virtual trials, MIT finished third out of 26 teams. The researchers had cleared the first major hurdle, winning an additional $750,000 in funding and a berth at the December DRC Trials. Now they just had to transfer their virtual success into a 330-pound machine.

Getting to Know the Robot

With the software trials behind them, members of the MIT team planned their summer vacations around the much-anticipated August unveiling of their hard-won Atlas. A team from Boston Dynamics arrived at the Albany Street warehouse (space borrowed from the Department of Earth, Atmospheric, and Planetary Sciences, since the machinery is too noisy for the CSAIL labs as well as too messy—it would drip hydraulic fluid on the carpets). They gently unwrapped the robot, which they had named Helios, and hoisted it from a large wooden shipping crate.

For all the team members, this was a marvelous chance to see the algorithms they develop embodied in one of the most advanced humanoid robots ever created. In fact, that opportunity was a major draw for Tedrake himself. “I wanted to play with this robot,” he says. With a laboratory focused on designing control systems rather than building expensive hardware, he adds, he’d “never build something as beautiful as this robot.”

But time was short: there were just four months to prepare for the DRC Trials in Florida, which would take place in late December. The team would need to place in the top eight in those trials to receive $1 million in funding from DARPA to continue the work and compete for the $2 million prize in the DRC Finals.

Much of the software the researchers had developed for the simulator transferred seamlessly to the robot, though its low-level motor control systems required tweaking. But while they could get their simulated robot to crawl out of a bad situation, the delicate and expensive Atlas needed security tethers to keep it from falling. Occasionally, the robot would shoot pressurized hydraulic fluid through the air, so team members wore safety goggles and stood behind plexiglass. And it was incredibly loud: Tedrake bought noise-canceling headphones for people who needed a break.

One team member was designated as the primary operator of the robot for each task it would need to perform in Florida, while another was on hand to help the robot perceive its environment. Given the visual information from the robot’s cameras and sensors, the perception wingman could help it identify an object of interest—a drill, for instance, or a door handle. The robot could then access preprogrammed information about what drills and door handles were like and how to grasp and manipulate them.

By the time the trials were a couple of weeks away, the software was in place, and the team focused on practicing. With many students juggling finals, the members gathered in the stuffy warehouse at seven o’clock each morning, timing themselves as they ran the robot through each task and hoping that what they accomplished in the lab would transfer to an outdoor setting. After a brief foray onto the Albany Street sidewalk on a below-freezing morning—the robot’s first operation outdoors—they packed Helios and their equipment carefully into a truck and sent it to Florida.

Stage Two: Robots at the RaceTrack

The crowd gathered at the Homestead-Miami Speedway on December 20 and 21 was decidedly geekier than the NASCAR fans who usually filled the seats. But anticipation was just as high as spectators waited to see the world’s best roboticists and their machines duke it out. Each of the 16 teams (some funded by DARPA and some self-funded) had a control station set up in the pit lanes, where members remotely controlled their robot as it competed for points in eight different tasks: driving a vehicle, walking over bumpy terrain, climbing a ladder, clearing debris, opening a series of doors, cutting a shape in a wall with a cordless drill, turning a valve, and manipulating a hose. Within these tasks, a team could receive a point for each of three subtasks and a bonus point for doing all three without intervention. To simulate a disaster scenario, communication between the teams and their robots was periodically slowed down to a low-bandwidth signal.

Even though the teams had received explicit descriptions of the tasks ahead of time so they could practice, the trials showed just how difficult things become when conditions aren’t perfectly controlled. The outdoor setting introduced new variables: bright sunlight, strong breezes, and surprisingly warm temperatures for December, causing laptops to overheat.

Team Schaft, representing a University of Tokyo spinoff company that also was acquired by Google just before the event, was the clear winner: the robot it designed sailed through tasks with smooth movements. For the most part, however, the trials were an important reality check for anyone used to seeing humanoid robots charging across movie screens. Though the event took place on a racetrack built for speed, it looked more like a competition in extremely slow tai chi. The machines stepped gingerly over debris, struggled to open doors, and climbed ladders with excruciating deliberateness.

Some things simply didn’t go as planned. In their first trial, Fallon and Marion were guiding Helios through a task that involved removing 10 pieces of wood to clear a path to a doorway (they’d get one point for the first five pieces and one for the second five). Fallon had just gotten the robot to clear the fifth board away when the wood bounced and dropped back in front of it. Time was too short to complete the task as planned, but he was able to take the robot off the planned course to get the stray wood out of the way and capture a point. “As small and insignificant as that sounds, it was a big achievement for us,” he says. Later, Helios fell while attempting to walk through three doorways and while ascending a ladder. But the team won the full set of points for the drill and valve tasks.

For the teams competing, demonstrating even some of these skills was gratifying. Gill Pratt ’83, SM ’87, PhD ’90, program manager in DARPA’s Defense Sciences Office, says that despite the difficulties the teams faced, the trials exceeded his expectations. “We expected maybe the best team would get about half the points,” he says. Team Schaft swept the competition with 27 out of 32 possible points. A team from the Florida Institute for Human and Machine Cognition (IHMC) won second place with an Atlas robot, and Carnegie Mellon University came in third with a robot called CHIMP (for “CMU highly intelligent mobile platform”). MIT came in fourth, winning 16 points. Some of the teams with self-designed robots, including one designed by NASA’s Johnson Space Center, failed to complete any of the tasks in time.

Though some of the press coverage of the Florida trials focused on how “smart” the robots were, in reality every move was being choreographed by human operators. “Just because the body looks similar to a human being or an animal does not mean the brain of the robot is anywhere near as good,” says Pratt. Though the robots are increasingly able to plan and execute the details of their motions on their own, humans are still doing the major thinking.

But different teams had different ways of managing the communication between human and machine. “MIT had a particularly well-designed human-machine interface,” Pratt says. In particular, the team had created software that allowed operators to quickly help the robot perceive important objects in its environment. When an interface is well designed, he says, the operator can accomplish more with less work. Whereas many other teams used joystick-like controls to manipulate the robot, Teller says, the MIT team took “a more computational, less manual approach.” The idea is to give the robot increasingly high-level commands and eventually develop machines that rely less and less on a highly skilled operator to pull the strings.

Though the trials were intense, Teller says they were surprisingly fun; it was the first time teams that had been working for months on their own projects had a chance to meet and put their work on display. Though they were competitors, they found themselves helping one another and lending parts back and forth. And even though the MIT robot was bested by some of the others, its strong finish won the team another $1 million to continue its work toward the DRC Finals, which will probably take place next spring.

Stage Three: Gearing Up for a Final Showdown

Helios is now back in its home on Albany Street, and the MIT team finally has an extended period of time to improve its system. For the upcoming finals, the team is pushing the robot’s autonomy even further. The communication links between humans and robots may be slowed more dramatically and cut entirely from time to time, so the researchers are trying to give their machine task-level autonomy, meaning it could manage to pick up a hose or open a door on its own. In one recent demonstration, Marion got the Atlas to approach a table, pick up four objects from it, and drop them in a bucket, all in response to a simple “go” command.

Pratt says the finals will be more difficult in other ways, too. Security tethers that kept robots upright if they lost their balance are likely to be gone; the robots will have to pick themselves up if they fall. Instead of being wired into a power source, the machines will have to carry their own power reserves. And they will have to navigate a more continuous set of tasks. This will require the MIT team to make Helios even more fluid in its movements and better able to perceive its environment.

Tedrake says that with time to develop more sophisticated algorithms, all the teams will be able to demonstrate movements in the finals that are faster and less halting—a little less like tai chi. “What has to happen to make the robots more dynamic, more graceful, is that they have to understand their own physics better and be able to reason about it faster,” he says. Teller, Tedrake, and team have already begun publishing papers about their approach, and they have made some of their source code available publicly.

Even with software that allows robots to move faster and accomplish more, there will still be times when the machines will stumble, miss a target, or have to scramble to the finish line. People “have this image of robots as perfection,” Teller says, but the image of those assembly-line machines operating in rigidly controlled conditions is “not the way these things are going to be when they’re deployed out in the real world.” Still, that’s part of the fun of designing robots that can manage the messiness of life. “We’re not out for perfection here,” he says. “We’re out for capability. Can people and machines, working together, get the job done?

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.