Three things to know about the White House’s executive order on AI

Experts say its emphasis on content labeling, watermarking, and transparency represents important steps forward.

MIT Technology Review Explains: Let our writers untangle the complex, messy world of technology to help you understand what's coming next. You can read more from the series here.

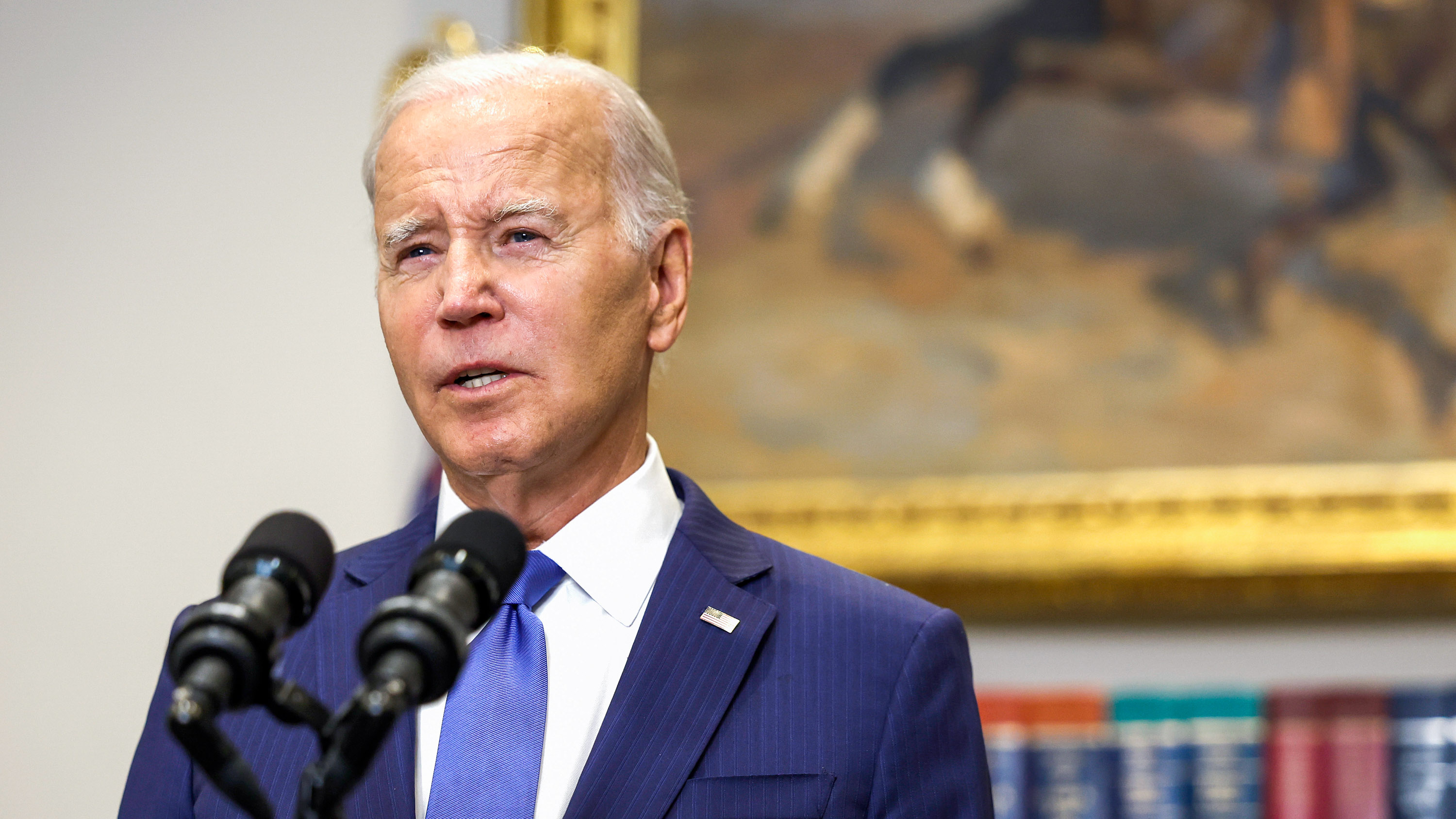

The US has set out its most sweeping set of AI rules and guidelines yet in an executive order issued by President Joe Biden today. The order will require more transparency from AI companies about how their models work and will establish a raft of new standards, most notably for labeling AI-generated content.

The goal of the order, according to the White House, is to improve “AI safety and security.” It also includes a requirement that developers share safety test results for new AI models with the US government if the tests show that the technology could pose a risk to national security. This is a surprising move that invokes the Defense Production Act, typically used during times of national emergency.

The executive order advances the voluntary requirements for AI policy that the White House set back in August, though it lacks specifics on how the rules will be enforced. Executive orders are also vulnerable to being overturned at any time by a future president, and they lack the legitimacy of congressional legislation on AI, which looks unlikely in the short term.

“The Congress is deeply polarized and even dysfunctional to the extent that it is very unlikely to produce any meaningful AI legislation in the near future,” says Anu Bradford, a law professor at Columbia University who specializes in digital regulation.

Nevertheless, AI experts have hailed the order as an important step forward, especially thanks to its focus on watermarking and standards set by the National Institute of Standards and Technology (NIST). However, others argue that it does not go far enough to protect people against immediate harms inflicted by AI.

Here are the three most important things you need to know about the executive order and the impact it could have.

What are the new rules around labeling AI-generated content?

The White House’s executive order requires the Department of Commerce to develop guidance for labeling AI-generated content. AI companies will use this guidance to develop labeling and watermarking tools that the White House hopes federal agencies will adopt. “Federal agencies will use these tools to make it easy for Americans to know that the communications they receive from their government are authentic—and set an example for the private sector and governments around the world,” according to a fact sheet that the White House shared over the weekend.

The hope is that labeling the origins of text, audio, and visual content will make it easier for us to know what’s been created using AI online. These sorts of tools are widely proposed as a solution to AI-enabled problems such as deepfakes and disinformation, and in a voluntary pledge with the White House announced in August, leading AI companies such as Google and Open AI pledged to develop such technologies.

The trouble is that technologies such as watermarks are still very much works in progress. There currently are no fully reliable ways to label text or investigate whether a piece of content was machine generated. AI detection tools are still easy to fool.

The executive order also falls short of requiring industry players or government agencies to use these technologies.

On a call with reporters on Sunday, a White House spokesperson responded to a question from MIT Technology Review about whether any requirements are anticipated for the future, saying, “I can imagine, honestly, a version of a call like this in some number of years from now and there'll be a cryptographic signature attached to it that you know you’re actually speaking to [the White House press team] and not an AI version.” This executive order intends to “facilitate technological development that needs to take place before we can get to that point.”

The White House says it plans to push forward the development and use of these technologies with the Coalition for Content Provenance and Authenticity, called the C2PA initiative. As we’ve previously reported, the initiative and its affiliated open-source community has been growing rapidly in recent months as companies rush to label AI-generated content. The collective includes some major companies like Adobe, Intel, and Microsoft and has devised a new internet protocol that uses cryptographic techniques to encode information about the origins of a piece of content.

The coalition does not have a formal relationship with the White House, and it’s unclear what that collaboration would look like. In response to questions, Mounir Ibrahim, the cochair of the governmental affairs team, said, “C2PA has been in regular contact with various offices at the NSC [National Security Council] and White House for some time.”

The emphasis on developing watermarking is good, says Emily Bender, a professor of linguistics at the University of Washington. She says she also hopes content labeling systems can be developed for text; current watermarking technologies work best on images and audio. “[The executive order] of course wouldn’t be a requirement to watermark, but even an existence proof of reasonable systems for doing so would be an important step,” Bender says.

Will this executive order have teeth? Is it enforceable?

While Biden’s executive order goes beyond previous US government attempts to regulate AI, it places far more emphasis on establishing best practices and standards than on how, or even whether, the new directives will be enforced.

The order calls on the National Institute of Standards and Technology to set standards for extensive “red team” testing—meaning tests meant to break the models in order to expose vulnerabilities—before models are launched. NIST has been somewhat effective at documenting how accurate or biased AI systems such as facial recognition are already. In 2019, a NIST study of over 200 facial recognition systems revealed widespread racial bias in the technology.

However, the executive order does not require that AI companies adhere to NIST standards or testing methods. “Many aspects of the EO still rely on voluntary cooperation by tech companies,” says Bradford, the law professor at Columbia.

The executive order requires all companies developing new AI models whose computational size exceeds a certain threshold to notify the federal government when training the system and then share the results of safety tests in accordance with the Defense Production Act. This law has traditionally been used to intervene in commercial production at times of war or national emergencies such as the covid-19 pandemic, so this is an unusual way to push through regulations. A White House spokesperson says this mandate will be enforceable and will apply to all future commercial AI models in the US, but will likely not apply to AI models that have already been launched. The threshold is set at a point where all major AI models that could pose risks “to national security, national economic security, or national public health and safety” are likely to fall under the order, according to the White House’s fact sheet.

The executive order also calls for federal agencies to develop rules and guidelines for different applications, such as supporting workers’ rights, protecting consumers, ensuring fair competition, and administering government services. These more specific guidelines prioritize privacy and bias protections.

“Throughout, at least, there is the empowering of other agencies, who may be able to address these issues seriously,” says Margaret Mitchell, researcher and chief ethics scientist at AI startup Hugging Face. “Albeit with a much harder and more exhausting battle for some of the people most negatively affected by AI, in order to actually have their rights taken seriously.”

What has the reaction to the order been so far?

Major tech companies have largely welcomed the executive order.

Brad Smith, the vice chair and president of Microsoft, hailed it as “another critical step forward in the governance of AI technology.” Google’s president of global affairs, Kent Walker, said the company looks “forward to engaging constructively with government agencies to maximize AI’s potential—including by making government services better, faster, and more secure.”

“It’s great to see the White House investing in AI’s growth by creating a framework for responsible AI practices,” said Adobe’s general counsel and chief trust officer, Dana Rao.

The White House’s approach remains friendly to Silicon Valley, emphasizing innovation and competition rather than limitation and restriction. The strategy is in line with the policy priorities for AI regulation set forth by Senate Majority Leader Chuck Schumer, and it further crystallizes the lighter touch of the American approach to AI regulation.

However, some AI researchers say that sort of approach is cause for concern. “The biggest concern to me in this is it ignores a lot of work on how to train and develop models to minimize foreseeable harms,” says Mitchell.

Instead of preventing AI harms before deployment—for example, by making tech companies’ data practices better—the White House is using a “whack-a-mole” approach, tackling problems that have already emerged, she adds.

The highly anticipated executive order on artificial intelligence comes two days before the UK’s AI Safety Summit and attempts to position the US as a global leader on AI policy.

It will likely have implications outside the US, adds Bradford. It will set the tone for the UK summit and will likely embolden the European Union to finalize its AI Act, as the executive order sends a clear message that the US agrees with many of the EU’s policy goals.

“The executive order is probably the best we can expect from the US government at this time,” says Bradford.

Correction: A previous version of this story had Emily Bender's title wrong. This has now been corrected. We apologize for any inconvenience.

Deep Dive

Policy

A brief, weird history of brainwashing

L. Ron Hubbard, Operation Midnight Climax, and stochastic terrorism—the race for mind control changed America forever.

Africa’s push to regulate AI starts now

AI is expanding across the continent and new policies are taking shape. But poor digital infrastructure and regulatory bottlenecks could slow adoption.

Why the Chinese government is sparing AI from harsh regulations—for now

The Chinese government may have been tough on consumer tech platforms, but its AI regulations are intentionally lax to keep the domestic industry growing.

AI was supposed to make police bodycams better. What happened?

New AI programs that analyze bodycam recordings promise more transparency but are doing little to change culture.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.