The race to find a better way to label AI

An internet protocol called C2PA uses cryptography to label images, video, and audio

This article is from The Technocrat, MIT Technology Review's weekly tech policy newsletter about power, politics, and Silicon Valley. To receive it in your inbox every Friday, sign up here.

I recently wrote a short story about a project backed by some major tech and media companies trying to help identify content made or altered by AI.

With the boom of AI-generated text, images, and videos, both lawmakers and average internet users have been calling for more transparency. Though it might seem like a very reasonable ask to simply add a label (which it is), it is not actually an easy one, and the existing solutions, like AI-powered detection and watermarking, have some serious pitfalls.

As my colleague Melissa Heikkilä has written, most of the current technical solutions “don’t stand a chance against the latest generation of AI language models.” Nevertheless, the race to label and detect AI-generated content is on.

That’s where this protocol comes in. Started in 2021, C2PA (named for the group that created it, the Coalition for Content Provenance and Authenticity) is a set of new technical standards and freely available code that securely labels content with information clarifying where it came from.

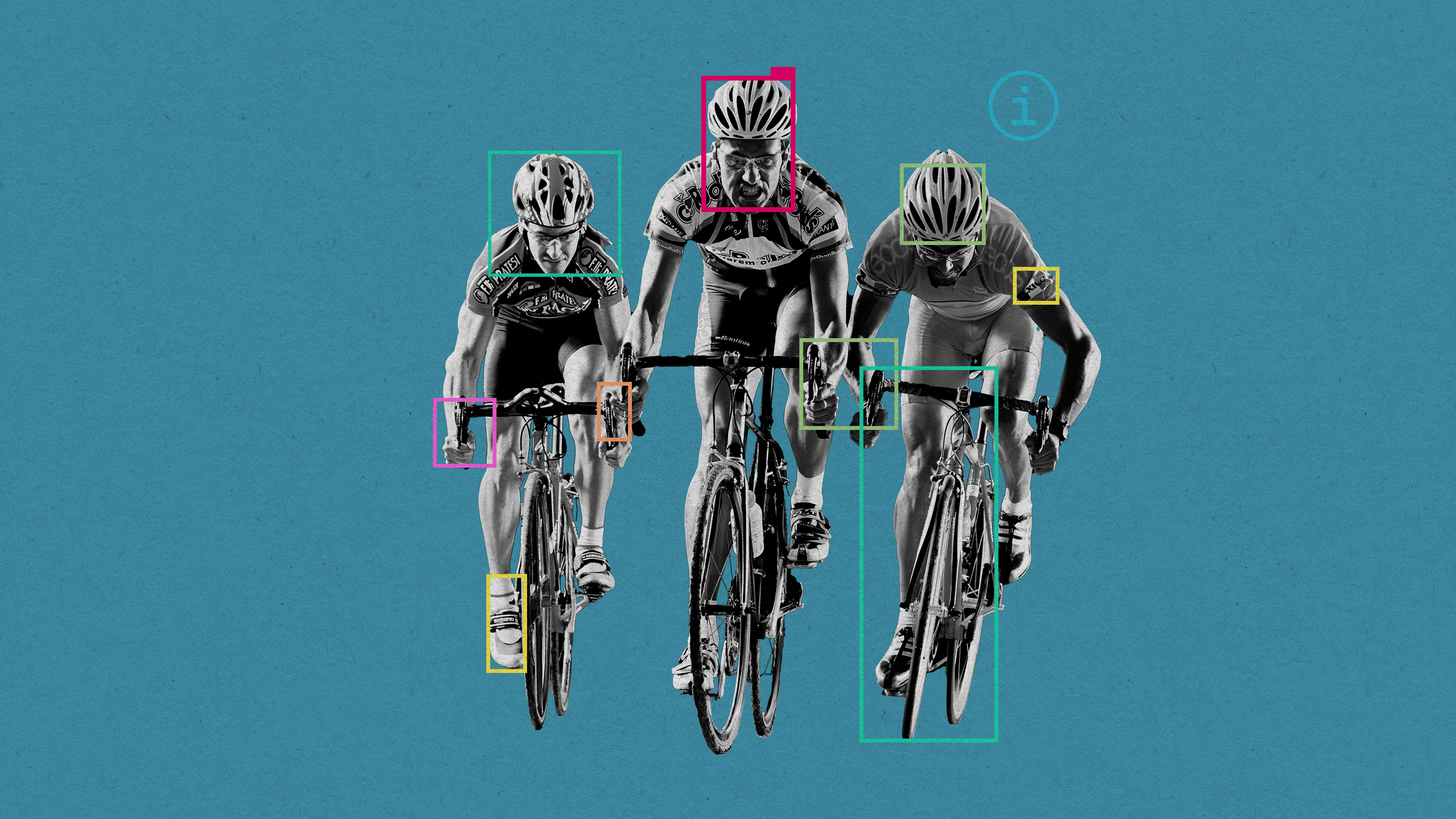

This means that an image, for example, is marked with information by the device it originated from (like a phone camera), by any editing tools (such as Photoshop), and ultimately by the social media platform that it gets uploaded to. Over time, this information creates a sort of history, all of which is logged.

The tech itself—and the ways in which C2PA is more secure than other AI-labeling alternatives—is pretty cool, though a bit complicated. I get more into it in my piece, but it’s perhaps easiest to think about it like a nutrition label (which is the preferred analogy of most people I spoke with). You can see an example of a deepfake video here with the label created by Truepic, a founding C2PA member, with Revel AI.

“The idea of provenance is marking the content in an interoperable and tamper-evident way so it can travel through the internet with that transparency, with that nutrition label,” says Mounir Ibrahim, the vice president of public affairs at Truepic.

When it first launched, C2PA was backed by a handful of prominent companies, including Adobe and Microsoft, but over the past six months, its membership has increased 56%. Just this week, the major media platform Shutterstock announced that it would use C2PA to label all of its AI-generated media.

It’s based on an opt-in approach, so groups that want to verify and disclose where content came from, like a newspaper or an advertiser, will choose to add the credentials to a piece of media.

One of the project’s leads, Andy Parsons, who works for Adobe, attributes the new interest in and urgency around C2PA to the proliferation of generative AI and the expectation of legislation, both in the US and the EU, that will mandate new levels of transparency.

The vision is grand—people involved admitted to me that real success here depends on widespread, if not universal, adoption. They said they hope all major content companies adopt the standard.

For that, Ibrahim says, usability is key: “You wanna make sure no matter where it goes on the internet, it’ll be read and ingested in the same way, much like SSL encryption. That’s how you scale a more transparent ecosystem online.”

This could be a critical development as we enter the US election season, when all eyes will be watching for AI-generated misinformation. Researchers on the project say they are racing to release new functionality and court more social media platforms before the expected onslaught.

Currently, C2PA works primarily on images and video, though members say that they are working on ways to handle text-based content. I get into some of the other shortcomings of the protocol in the piece, but what’s really important to understand is that even when the use of AI is disclosed, it might not stem the harm of machine-generated misinformation. Social media platforms will still need to decide whether to keep that information on their sites, and users will have to decide for themselves whether to trust and share the content.

It’s a bit reminiscent of initiatives by tech platforms over the past several years to label misinformation. Facebook labeled over 180 million posts as misinformation ahead of the 2020 election, and clearly there were still considerable issues. And though C2PA does not intend to assign indicators of accuracy to the posts, it’s clear that just providing more information about content can’t necessarily save us from ourselves.

What I am reading this week

- We published a handy roadmap that outlines how AI might impact domestic politics, and what milestones to watch for. It’s fascinating to think about AI submitting or contributing to a public testimony, for example.

- Vittoria Elliott wrote a very timely story about how watermarking, which is also meant to bring transparency to AI-generated content, is not sufficient in managing the threat of disinformation. She explains that experts say the White House needs to do more than just push voluntary agreements on AI.

- And here’s another story I thought was interesting on the race to develop better watermarking tech.

- Speaking of AI … our AI reporter Melissa also wrote about a new tool developed by MIT researchers that can help prevent photos from being manipulated by AI. It might help prevent problems like AI-generated porn that uses real photos from unconsenting women.

- TikTok is dipping its toe further into e-commerce. New features on the app allow users to purchase products directly from influencers, leading some to complain about a feed that feels like a flood of sponsored content. It’s a mildly alarming development in the influencer economy and highlights the selling power of social media platforms.

What I learned this week

Researchers are still trying to sort out just how social media platforms, and their algorithms, affect our political beliefs and civic discourse. This week, four new studies about the impact of Facebook and Instagram on users’ politics during the 2020 election showed that the effects are quite complicated. The studies, published by University of Texas, New York University, Princeton, and other institutions, found that while the news people read on the platforms showed a high degree of segregation by political views, removing reshared content from feeds on Facebook did not change political beliefs.

The size of the studies is making them sort of a big deal in the academic world this week, but the research is getting some scrutiny for its close collaboration with Meta.

Deep Dive

Policy

A brief, weird history of brainwashing

L. Ron Hubbard, Operation Midnight Climax, and stochastic terrorism—the race for mind control changed America forever.

Africa’s push to regulate AI starts now

AI is expanding across the continent and new policies are taking shape. But poor digital infrastructure and regulatory bottlenecks could slow adoption.

Why the Chinese government is sparing AI from harsh regulations—for now

The Chinese government may have been tough on consumer tech platforms, but its AI regulations are intentionally lax to keep the domestic industry growing.

AI was supposed to make police bodycams better. What happened?

New AI programs that analyze bodycam recordings promise more transparency but are doing little to change culture.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.