AI systems are deployed all the time, but it can take months or even years until it becomes clear whether, and how, they’re biased.

The stakes are often sky-high: unfair AI systems can cause innocent people to be arrested, and they can deny people housing, jobs, and basic services.

Today a group of AI and machine-learning experts are launching a new bias bounty competition, which they hope will speed the process of uncovering these kinds of embedded prejudice.

This story is only available to subscribers.

Don’t settle for half the story.

Get paywall-free access to technology news for the here and now.

Subscribe now

Already a subscriber?

Sign in

The competition, which takes inspiration from bug bounties in cybersecurity, calls on participants to create tools to identify and mitigate algorithmic biases in AI models.

It’s being organized by a group of volunteers who work at companies like Twitter, software company Splunk, and deepfake detection startup Reality Defender. They’ve dubbed themselves the “Bias Buccaneers.”

The first bias bounty competition is going to focus on biased image detection. It’s a common problem: in the past, for example, flawed image detection systems have misidentified Black people as gorillas.

Competitors will be challenged to build a machine-learning model that labels each image with its skin tone, perceived gender, and age group, which will make it easier to measure and spot biases in datasets. They will be given access to a data set of around 15,000 images of synthetically generated human faces. Participants are ranked on how accurately their model tags images and how long the code takes to run, among other metrics. The competition closes on November 30.

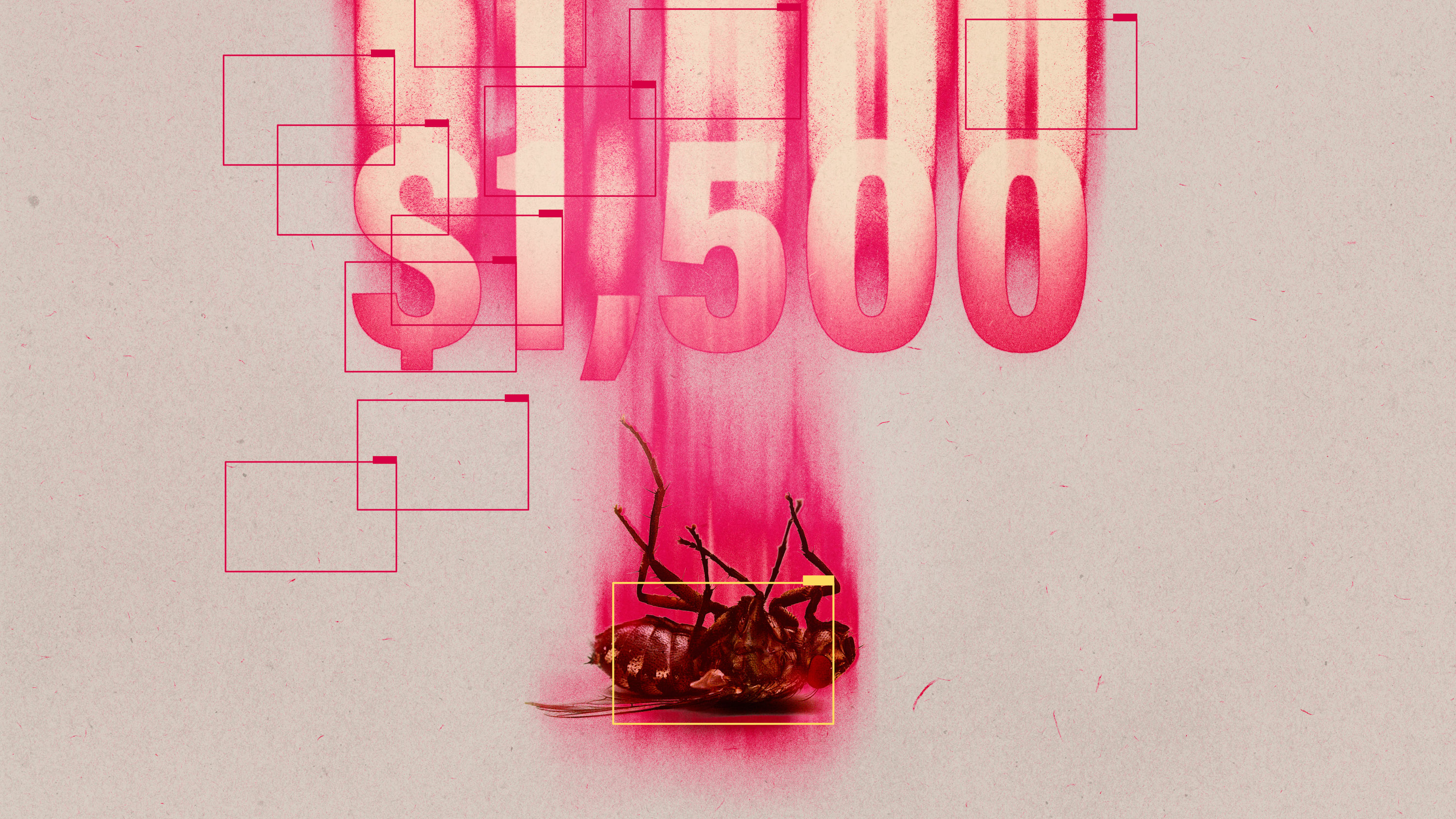

Microsoft and startup Robust Intelligence have committed prize money of $6,000 for the winner, $4,000 for the runner-up, and $2,000 for whoever comes third. Amazon has contributed $5,000 to the first set of entrants for computing power.

The competition is an example of a budding industry that’s emerging in AI: auditing for algorithmic bias. Twitter launched the first AI bias bounty last year, and Stanford University just concluded its first AI audit challenge. Meanwhile, nonprofit Mozilla is creating tools for AI auditors.

These audits are likely to become more and more commonplace. They’ve been hailed by regulators and AI ethics experts as a good way to hold AI systems accountable, and they are going to become a legal requirement in certain jurisdictions.

The EU’s new content moderation law, the Digital Services Act, includes annual audit requirements for the data and algorithms used by large tech platforms, and the EU’s upcoming AI Act could also allow authorities to audit AI systems. The US National Institute of Standards and Technology also recommends AI audits as a gold standard. The idea is that these audits will act like the sorts of inspections we see in other high-risk sectors, such as chemical plants, says Alex Engler, who studies AI governance at the think tank the Brookings Institution.

The trouble is, there aren’t enough independent contractors out there to meet the coming demand for algorithmic audits, and companies are reluctant to give them access to their systems, argue researcher Deborah Raji, who specializes in AI accountability, and her coauthors in a paper from last June.

That’s what these competitions want to cultivate. The hope in the AI community is that they’ll lead more engineers, researchers, and experts to develop the skills and experience to carry out these audits.

Much of the limited scrutiny in the world of AI so far comes either from academics or from tech companies themselves. The aim of competitions like this one is to create a new sector of experts who specialize in auditing AI.

“We are trying to create a third space for people who are interested in this kind of work, who want to get started or who are experts who don’t work at tech companies,” says Rumman Chowdhury, director of Twitter’s team on ethics, transparency, and accountability in machine learning, the leader of the Bias Buccaneers. These people could include hackers and data scientists who want to learn a new skill, she says.

The team behind the Bias Buccaneers’ bounty competition hopes it will be the first of many.

Competitions like this not only create incentives for the machine-learning community to do audits but also advance a shared understanding of “how best to audit and what types of audits we should be investing in,” says Sara Hooker, who leads Cohere for AI, a nonprofit AI research lab.

The effort is “fantastic and absolutely much needed,” says Abhishek Gupta, the founder of the Montreal AI Ethics Institute, who was a judge in Stanford’s AI audit challenge.

“The more eyes that you have on a system, the more likely it is that we find places where there are flaws,” Gupta says.