Tomorrow’s computer, yesterday

Four decades ago at Endicott House, an MIT professor convened a conference that launched quantum computing.

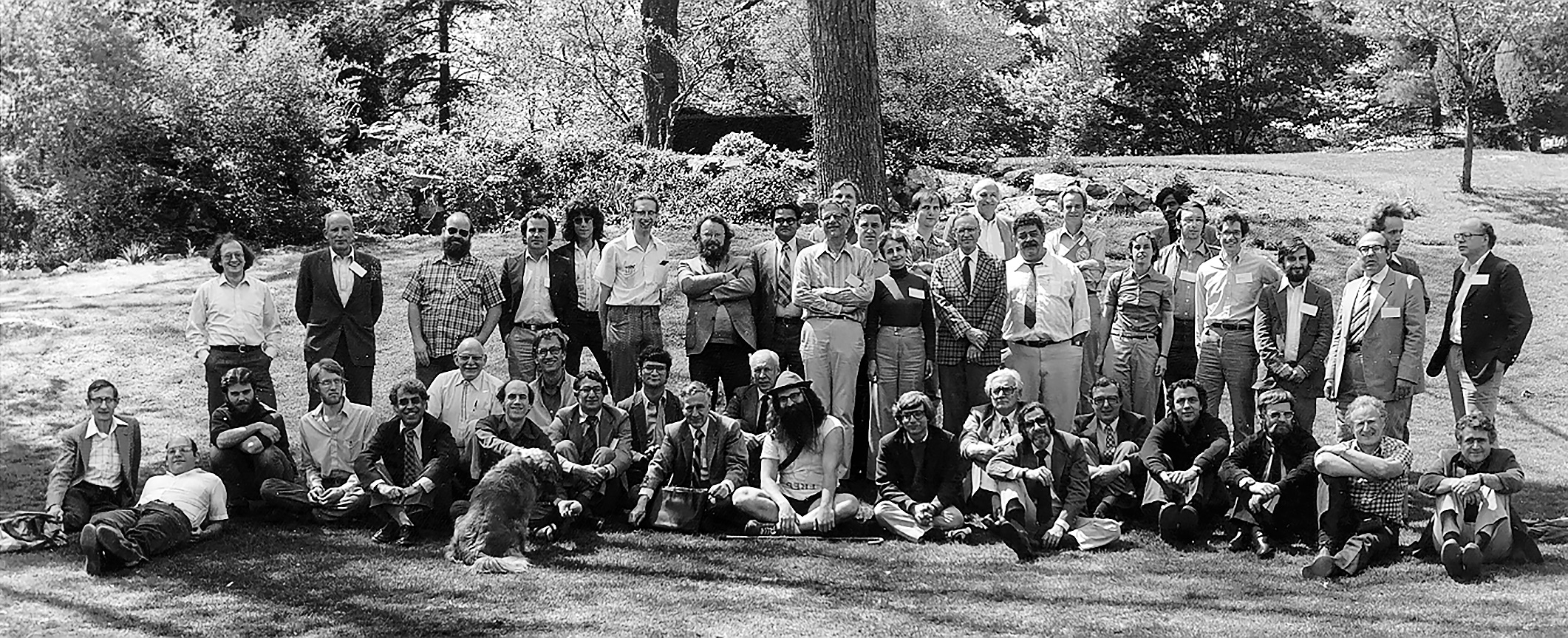

Quantum computing as we know it got its start 40 years ago this spring at the first Physics of Computation Conference, organized at MIT’s Endicott House by MIT and IBM and attended by nearly 50 researchers from computing and physics—two groups that rarely rubbed shoulders.

Twenty years earlier, in 1961, an IBM researcher named Rolf Landauer had found a fundamental link between the two fields: he proved that every time a computer erases a bit of information, a tiny bit of heat is produced, corresponding to the entropy increase in the system. In 1972 Landauer hired the theoretical computer scientist Charlie Bennett, who showed that the increase in entropy can be avoided by a computer that performs its computations in a reversible manner. Curiously, Ed Fredkin, the MIT professor who cosponsored the Endicott Conference with Landauer, had arrived at this same conclusion independently, despite never having earned even an undergraduate degree. Indeed, most retellings of quantum computing’s origin story overlook Fredkin’s pivotal role.

Fredkin’s unusual career began when he enrolled at the California Institute of Technology in 1951. Although brilliant on his entrance exams, he wasn’t interested in homework—and had to work two jobs to pay tuition. Doing poorly in school and running out of money, he withdrew in 1952 and enlisted in the Air Force to avoid being drafted for the Korean War.

A few years later, the Air Force sent Fredkin to MIT Lincoln Laboratory to help test the nascent SAGE air defense system. He learned computer programming and soon became one of the best programmers in the world—a group that probably numbered only around 500 at the time.

Upon leaving the Air Force in 1958, Fredkin worked at Bolt, Beranek, and Newman (BBN), which he convinced to purchase its first two computers and where he got to know MIT professors Marvin Minsky and John McCarthy, who together had pretty much established the field of artificial intelligence. In 1962 he accompanied them to Caltech, where McCarthy was giving a talk. There Minsky and Fredkin met with Richard Feynman ’39, who would win the 1965 Nobel Prize in physics for his work on quantum electrodynamics. Feynman showed them a handwritten notebook filled with computations and challenged them to develop software that could perform symbolic mathematical computations.

Fredkin left BBN in 1962 and started Information International Incorporated, one of the world’s first AI startups. When Triple-I went public in 1968 and Fredkin became a millionaire, Minsky recruited him to become the associate director of his AI Lab at MIT. Three years later Fredkin became director of Project MAC, the progenitor of MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL). MIT made him a full professor, despite his lack of academic credentials. But Fredkin soon tired of that too, so in 1974 he headed back to Caltech to spend a year with Feynman. The deal was that Fredkin would teach Feynman computing, and Feynman would teach Fredkin quantum physics.

Fredkin came to understand quantum physics, but he didn’t believe it. He thought the fabric of reality couldn’t be based on something that could be described by a continuous measurement. Quantum mechanics holds that quantities like charge and mass are quantized—made up of discrete, countable units that cannot be subdivided—but that things like space, time, and wave equations are fundamentally continuous. Fredkin, in contrast, believed (and still believes) with almost religious conviction that space and time must be quantized as well, and that the fundamental building block of reality is thus computation. Reality must be a computer! In 1978 Fredkin taught a graduate course at MIT called Digital Physics, which explored ways of reworking modern physics along such digital principles.

Feynman, however, remained unconvinced that there were meaningful connections between computing and physics beyond using computers to compute algorithms. So when Fredkin asked his friend to deliver the keynote address at the 1981 conference, he initially refused. When promised that he could speak about whatever he wanted, though, Feynman changed his mind—and laid out his ideas for how to link the two fields in a detailed talk that proposed a way to perform computations using quantum effects themselves.

Feynman was at first unconvinced that there were meaningful connections between computing and physics.

Feynman explained that computers are poorly equipped to help simulate, and thereby predict, the outcome of experiments in particle physics—something that’s still true today. Modern computers, after all, are deterministic: give them the same problem, and they come up with the same solution. Physics, on the other hand, is probabilistic. So as the number of particles in a simulation increases, it takes exponentially longer to perform the necessary computations on possible outputs. The way to move forward, Feynman asserted, was to build a computer that performed its probabilistic computations using quantum mechanics.

Feynman hadn’t prepared a formal paper for the conference, but with the help of Norm Margolus, PhD ’87, a graduate student in Fredkin’s group who recorded and transcribed what he said there, his talk was published in the International Journal of Theoretical Physics under the title “Simulating Physics with Computers.” This, along with Fredkin’s article “Conservative Logic,” coauthored with MIT research scientist Tommaso Toffoli (and based in part on a Digital Physics term paper written by William Silver ’75, SM ’80), formed the basis of the nascent field.

In 1983, Bennett and Gilles Brassard, a professor at the University of Montreal, invented what’s now called quantum cryptography—a way to use quantum mechanics to send information while preventing eavesdropping. Feynman, meanwhile, continued to develop his idea, as explained in a talk titled “Tiny Computers Obeying Quantum Mechanical Laws” that he gave at both Los Alamos National Laboratory and a 1984 conference on optics.

Still, quantum computers would likely have remained an intellectual plaything were it not for Peter Williston Shor, PhD ’85, who in 1994 came up with an approach that could use one of Feynman’s as-yet-unbuilt quantum computers and some clever number theory to quickly factor a large number. This got the interest of governments and corporations, since the security of nearly all modern cryptographic systems depends on the fact that it is easy to multiply together two very large prime numbers, but extraordinarily difficult to break the product back into its prime factors. With Shor’s Algorithm (as it is now called) and a big enough quantum computer on which to run it, that task would become simple, and most of the world’s confidential data moving over airwaves and across the Internet could be readily decrypted once intercepted.

Beyond spawning the seminal papers in the field, the 1981 conference also yielded a photo of some of the greatest thinkers in computing and physics alive in 1981. Taken on the lawn of Endicott House, the photo includes Feynman and Fredkin; Freeman Dyson, one of the most talented physicists of the 20th century; Konrad Zuse, the German engineer who had built the world’s first fully programmable automatic digital computer in 1941; Hans Moravec, who had just built a robot that could navigate by sight; Danny Hillis ’78, SM ’81, PhD ’88, who went on to found Thinking Machines and hired Feynman as its first employee; and many others who are now household names (in the households of computer scientists and physicists, at least). It’s reminiscent of the famous 1927 photograph of the Fifth Solvay Conference on Electrons and Photons that shows Albert Einstein, Niels Bohr, Pauli, Heisenberg, and other leading figures of the nascent field of quantum mechanics.

Alas, Charlie Bennett isn’t in the photo: he’s the person who snapped the picture.

Physics of Computation Conference, Endicott House, MIT, May 6–8, 1981.

1 Freeman Dyson, 2 Gregory Chaitin, 3 James Crutchfield, 4 Norman Packard, 5 Panos Ligomenides, 6 Jerome Rothstein, 7 Carl Hewitt, 8 Norman Hardy, 9 Edward Fredkin, 10 Tom Toffoli, 11 Rolf Landauer, 12 John Wheeler, 13 Frederick Kantor, 14 David Leinweber, 15 Konrad Zuse, 16 Bernard Zeigler, 17 Carl Adam Petri, 18 Anatol Holt, 19 Roland Vollmar, 20 Hans Bremerman, 21 Donald Greenspan, 22 Markus Buettiker, 23 Otto Floberth, 24 Robert Lewis, 25 Robert Suaya, 26 Stand Kugell, 27 Bill Gosper, 28 Lutz Priese, 29 Madhu Gupta, 30 Paul Benioff, 31 Hans Moravec, 32 Ian Richards, 33 Marian Pour-El, 34 Danny Hillis, 35 Arthur Burks, 36 John Cocke, 37 George Michaels, 38 Richard Feynman, 39 Laurie Lingham, 40 P. S. Thiagarajan, 41 Marin Hassner, 42 Gerald Vichnaic, 43 Leonid Levin, 44 Lev Levitin, 45 Peter Gacs, 46 Dan Greenberger. (Photo courtesy Charles Bennett)

Editor’s note: Simson Garfinkel uncovered this story while researching his book Law and Policy for the Quantum Age, coauthored with Chris Hoofnagle (forthcoming from Cambridge University Press).

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.