Battle to Provide Chips for the AI Boom Heats Up

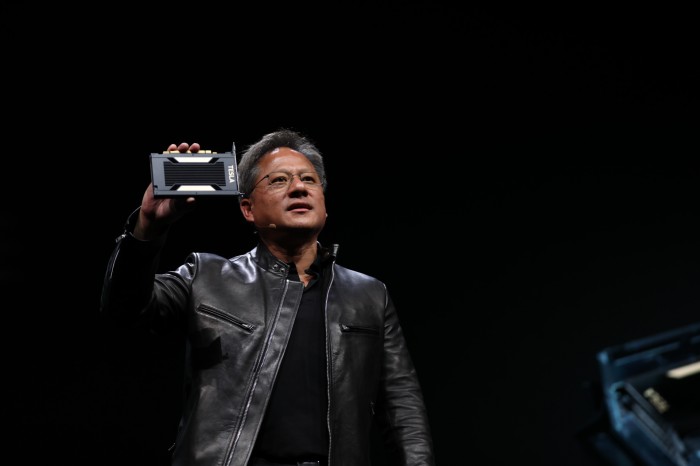

Jensen Huang beamed out over a packed conference hall in San Jose, California, on Wednesday as he announced his company’s new chip aimed at accelerating artificial intelligence algorithms. But metaphorically speaking, the CEO of chip maker Nvidia was looking over his shoulder.

Nvidia’s profits and stock have surged over the past few years because the graphics processors it invented to power gaming and graphics production have enabled many recent breakthroughs in machine learning (see “10 Breakthrough Technologies 2013: Deep Learning”). But as investment in artificial intelligence soars, Huang’s company now faces competition from Intel, Google, and others working on their own AI chips.

At Nvidia’s annual developer conference on Wednesday, Huang carefully avoided mentioning any competitors by name as he introduced Nvidia’s latest chip, named the Tesla V100. He referred to Google only as “some people,” for example. But he made clear swipes at the technology of Nvidia’s challengers, particularly when talking about the big opportunity opening up to supply AI chips for use in cloud computing.

Companies in many industries such as health care and finance are investing in machine-learning infrastructure. Leading cloud computing providers Google, Amazon, and Microsoft are all betting that many companies will want to pay them to run artificial intelligence software, and are going to spend heavily on new hardware to support that.

Nvidia came to dominate the burgeoning AI chip market by smartly seizing on a lucky coincidence. The basic mathematical operations needed for computer graphics are the same as those underlying an approach to machine learning known as artificial neural networks. Starting in 2012, researchers showed that by putting new power behind that technique, graphics processors permitted software to become much, much smarter at tasks such as interpreting images or speech.

As the AI market has grown, Nvidia has tweaked its chip designs with features to support neural networks. The new V100 chip announced this week is the culmination of that effort, and features a new core specialized for accelerating deep-learning math.

Huang said its power and energy efficiency would help companies or cloud providers to dramatically upgrade their capability to use AI. “You could increase the throughput of your data center by 15 times instead of having to build new data centers,” he said.

Nvidia’s new competitors argue that they can make hardware faster and more efficient at running AI software by designing chips tuned for the purpose from scratch instead of adapting graphics chip technology.

For example, Intel promises to release a chip for deep learning later this year built on top of technology acquired with startup Nervana in 2016 (see “Intel Outside as Other Companies Prosper from Graphics Chips”).

The company is also preparing to release a product to speed up deep learning based on technology from its $16.7 billion acquisition of Altera, which made chips called FPGAs that can be reconfigured to power specific algorithms. Microsoft has invested heavily in using FPGAs to power its machine-learning software and made them a core piece of its cloud platform, Azure.

Meanwhile, Google disclosed last summer that it was already using a chip customized for AI, developed in-house, called a Tensor Processing Unit, or TPU. The chips underpinned the software AlphaGo’s victory over a champion player of the board game Go last year. They aren’t for sale, but Google says companies that use its cloud computing services will get the benefits of their power and energy efficiency.

Several engineers that built Google’s chip have since left the company to form a startup with $10 million in funding called Groq that is building a specialized machine-learning chip. Other startups working on similar projects include Wave Computing, which says it is already letting customers test its hardware.

Huang claimed Wednesday that Nvidia’s technology hits a sweet spot other efforts don’t. Custom chips like Google’s TPU are too inflexible to work equally well with many different kinds of neural networks, he said—a significant downside given how quickly new ideas are being tested and adopted in AI. He claimed FPGAs, like those favored by Microsoft and being bet on by Intel, consume too much energy.

“We are creating the most productive platform for deep learning,” he said. As Huang’s rivals reveal more about their products this year, that claim will come under close scrutiny.

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.