Google Builds a Robotic Hive-Mind Kindergarten

How many robots does it take to open a door? If the robots are trying to figure out how to do the task from scratch, then it helps to have as many as possible involved.

In three separate research papers posted online Monday, researchers at Google and other Alphabet subsidiaries showed several ways in which robots can learn to perform simple tasks more quickly by sharing different types of learning experiences.

The researchers are training teams of industrial robots to perform simple tasks using a technique called reinforcement learning, which combines trial and error with positive feedback. For the moment, these tasks are extremely simple, like opening doors or nudging objects around. But such advances will be crucial if robots are ever going to be capable of helping with everyday chores like folding the laundry or doing the dishes.

Although robots are becoming cheaper and more capable, programming them to behave reliably in unpredictable everyday situations is a near impossible task. Reinforcement learning offers a solution, by letting robots essentially program themselves as they learn on the job. But it can be very time-consuming for an individual bot to try many different ways of performing a chore. Sharing the learning process, a technique often called cloud robotics, can help accelerate the process, although the idea remains at an early stage (see “10 Breakthrough Technologies: Robots That Teach Each Other”).

In the three papers released Monday, Sergey Levine, a research scientist at Google who is leading the robot learning effort, and colleagues detail several learning strategies that can be distributed across a group of robots.

In each case, the robots involved use neural networks that try to predict the result of different actions. Each robot varies its behavior slightly, and then reinforces the variations that give bigger rewards. These networks are then periodically fed back to a central server which builds a new neural network that combines all of the learned behavior, and that network is redistributed back to the robots for another round of training.

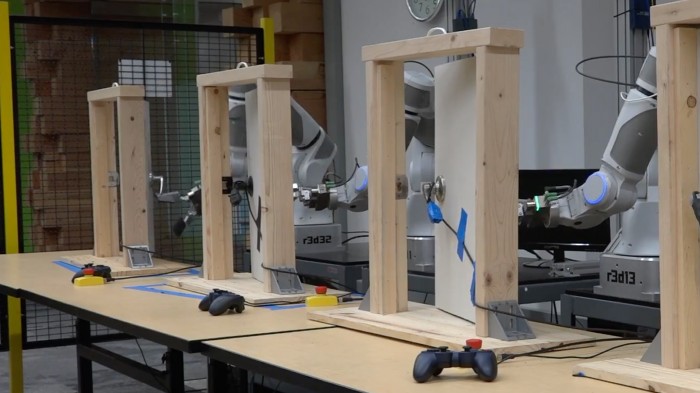

In the first experiment, the goal was turning a door handle and opening a door, and four different robots were set to work practicing on different doors and handle types. "Since the robots were trained on doors that look different from each other, the final policy succeeds on a door with a handle that none of the robots had seen before," Levine wrote in a blog post coauthored with Timothy Lillicrap, a researcher at Google DeepMind, and Mrinal Kalakrishnan, a researcher at X, Google's "moonshot" research facility.

In the second experiment, the robots' learning process was sped up thanks to the interactions of a person who guides a robot arm. And in a third, a robot figured out how to move and rotate objects using input from a camera and a learned ability to predict how actions would change the picture—what the researchers describe as a simple physical model of the world. Stephanie Tellex, an assistant professor at Brown University who studies robot learning, says this is an exciting idea. "Predicting the physical effects of actions like pushing is exciting because it enables the robot to understand something about how the world works," she says.

The company is evidently keen to make the most of what could be a coming revolution in the field thanks to the application of machine-learning techniques. Already some robotics manufacturers are exploring ways to use reinforcement learning to streamline the programming of their products.

"Of course, the kinds of behaviors that robots today can learn are still quite limited," the authors wrote. "However, as algorithms improve and robots are deployed more widely, their ability to share and pool their experiences could be instrumental for enabling them to assist us in our daily lives."

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.