The Emerging Challenge of Augmenting Virtual Worlds With Physical Reality

Augmented reality provides a live view of the real world with computer generated elements superimposed. Pilots have long used head-up displays to access air speed data and other parameters while they fly. Some smartphone cameras can superimpose computer-generated characters on to the view of the real world. And emerging technologies such as Google Glass aim to superimpose useful information on to a real world view, such as navigation directions and personal data.

But there’s a related problem that most people will not yet have considered. Imagine wearing a virtual reality headset and that you are immersed in a virtual world quite unlike the physical one around you. Now suppose you want to take a sip of water from a cup on the desk in front of you.

The only way to succeed is by feeling your way to the cup while still immersed in the virtual world or by removing the virtual reality headset and returning to the physical world. Neither of these is particularly good, say Pulkit Budhiraja and pals at the University of Illinois at Urbana-Champaign, who have come up with a solution.

These guys have been testing ways of superimposing physical reality onto a virtual reality experience. The goal is to find a way to allow users to interact with real physical objects while they remain immersed in a virtual world—a kind of augmented virtual reality

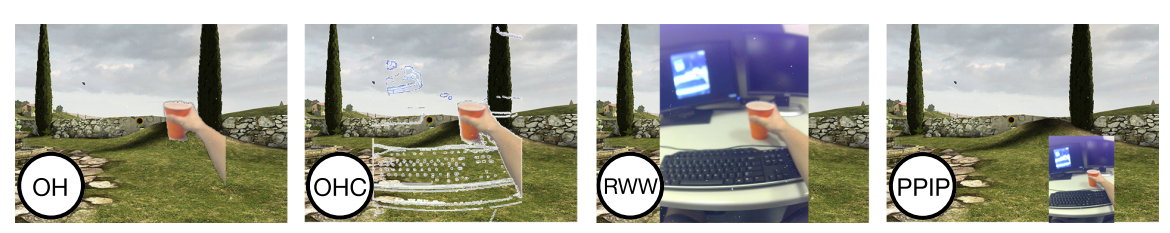

Budhiraja and co began by modifying an Oculus Rift virtual reality headset with a pair of cameras that produce a stereo view of the real world in front of the headset. They then came up with four different ways of superimposing the real world images onto the virtual world for the task of picking up and drinking from a cup, while remaining immersed.

The first superimposes the user’s hand and the cup onto the virtual world. The second also includes the outlines of nearby real objects such as a desk and keyboard to provide a context for any hand movements. The third creates a window in the centre of the view showing the real world, which obscures the virtual world. And the final option is a kind of picture-in-picture view of the real world in the bottom corner right hand corner of the virtual world.

The team recruited 10 volunteers to test each of these scenarios while immersed in three different types of virtual worlds. One world involved watching a film which required no user interaction. Another was a first person shooter game that required significant user involvement. And the final environment was a car racing game which required high levels of user involvement.

In each case, the user had to reach out, pick up a cup of water and take a sip while remaining immersed.

The results were something of a surprise to Budhiraja and co who expected people to prefer the first type of superimposition, showing just the cup and hand. They thought people would prefer this because it was not overly distracting.

Instead, most users preferred the option which also showed the context of any hand movement by superimposing the edges of salient objects such as desks, tables and so on.

One user described the reasons for the preferred choice like this: “The extra lines helped me find the cup and put it back, and it wasn’t distracting, it didn’t break my concentration on the game.” Another who also preferred the context over the hands and cup alone said: “I felt lost and had to feel the physical space around me to look for the cup.”

That’s interesting work on a problem that most of us have yet to experience—how to combine real world information in an immersive virtual environment. The answer is to include the relevant objects and the edges of other objects that provide context.

That’s what you might call a first world problem—not something that most people on the planet are likely to worry about in the near future. Nevertheless, virtual reality technology is evolving rapidly and devices such as the Oculus Rift are finally bringing it to the consumer.

So here’s a question for TR readers about the future of this technology: how long do you think it will be before half of your friends have experienced the problem this paper is designed to solve? Answers in the comments section please.

Ref: arxiv.org/abs/1502.04744 : Where’s My Drink? Enabling Peripheral Real World Interactions While Using HMDs

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.