The tech industry still forecasts new products based on an observation made in 1965 by Intel cofounder Gordon Moore. In what became known as Moore’s Law, he noticed that the number of transistors in a chip tended to double every two years, and that has more or less held true ever since. With more transistors has come ever-more computing power. But the transistors and other features of a chip can get only so much smaller. Here are other ways that computers can be made more useful.

Resistive Memory

Rice University researchers say that porous silicon oxide is the magic ingredient that enables a technology called “resistive random-access memory” (RRAM) that could hold a terabyte of data on a device the size of a postage stamp, or more than 50 times the capacity of flash memory devices today. While RRAM usually needs to be produced at high temperatures at costly prices, the researchers have demonstrated a way to make it at room temperature. The research was published online in the American Chemical Society’s Nano Letters journal in July.

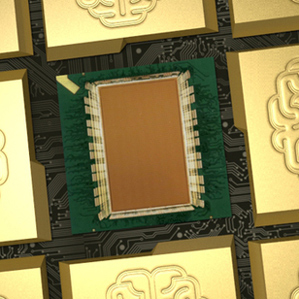

Brain Design

IBM and Cornell University published a paper in August documenting a new computer chip, based on the architecture of the human brain, called TrueNorth. The new chip uses a million digital neurons and 256 million equivalents of synapses to do things like recognize pieces of sensory data like speech and images. IBM says that the chip can fit 16 times more simulated neurons than other chips also based on the human brain. The chip is significant because it can be scaled up into larger systems to process sensory data and still use significantly less power than today’s microprocessors. The research was published in the journal Science.

Deeper Detection

To develop self-driving cars and better search tools, software must become better at recognizing not only where objects are but what they are. In a paper submitted as part of an annual academic contest called the “ImageNet Large Scale Visual Recognition Challenge,” Google researchers explain how an image detection method called “DeepMultiBox” could be important. The researchers say they’ve solved some of the shortcomings seen in one of the winning technologies from 2012. That model had trouble detecting a single object appearing multiple times in the same image. Google’s researchers created a method for identifying objects that would teach the detection tool to identify objects that are similar to ones it has seen before, but which it has never been explicitly taught to identify. For example, it could be trained to know what a dog is by seeing one type of breed, but then correctly identify the type of animal when looking at other breeds as well.

The takeaway:

Engineers continue to cram more transistors on chips—Intel has announced a new process to manufacture chips with features as small as 14 nanometers. However, those efforts can only go so far with today’s manufacturing technologies. With this in mind, scientists are increasingly looking to chips with new architectures.

Do you have a big question? Send suggestions to questionoftheweek@technologyreview.com.

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.