How to Spot a Social Bot on Twitter

Back in 2011, a team from Texas A&M University carried out a cyber sting to trap nonhuman Twitter users that were polluting the Twittersphere with spam. Their approach was to set up “honeypot” accounts which posted nonsensical content that no human user would ever be interested in. Any account that retweeted this content, or friended the owner, must surely be a nonhuman user known as a social bot.

The team set up 60 honeypots and harvested some 36,000 potential social bot accounts. The result surprised many observers because of the sheer number of nonhuman accounts that were active. These bots were generally unsophisticated and simply retweeted more or less any content they came across.

Since then, social bots have become significantly more advanced. They search social networks for popular and influential people, follow them and capture their attention by sending them messages. These bots can identify keywords and find content accordingly and some can even answer inquiries using natural language algorithms.

That makes identifying social bots much more difficult. But today, Emilio Ferrara and pals at Indiana University in Bloomington, say they have developed a way to spot sophisticated social bots and distinguish them from ordinary human users.

The technique is relatively straightforward. They start by gathering a set of social bots from the original group outed in 2011. They chose 15,000 of these and collected their 200 most recent tweets as well as the 100 most recent tweets mentioning them. That produced a dataset of some 2.6 million tweets. The team then gathered a similar dataset for 16,000 human users consisting of more than 3 million tweets.

Finally, the researchers created an algorithm called Bot or Not? to mine this data looking for significant differences between the properties of human users and social bots. The algorithm looked at over 1,000 features associated with these accounts, such as the number of tweets and retweets each user posted, the number of replies, mentions and retweets each received, the username length, and even the age of the account.

It turns out that there are significant differences between human accounts and bot accounts. Bots tend to retweet far more often than humans and they also have longer usernames and younger accounts. By contrast, humans receive more replies, mentions, and retweets.

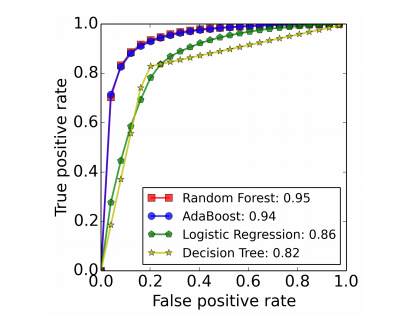

Together these factors create a kind of fingerprint that can be used to detect bots. “Bot or Not? achieves very promising detection accuracy,” say Ferrara and pals.

There are some limitations, however. First, the team took social bots originally identified in 2011 so it’s quite possible that there are now more advanced bots that are less easy to detect.

And there are also borderline cases that contain posts from both humans and social bots, for example when humans lend their accounts to bots or when accounts have been hacked by bots. “Detecting these anomalies is currently impossible,” admit Ferrara and co.

Nevertheless, this is an interesting start in the process of identifying social bots. But it is a task that is likely to become more difficult as time goes on. With only 140 characters, Twitter places significant constraints on the type of communication that is possible. It is therefore much easier for a computer to recreate the very limited behavior that humans demonstrate in this space.

For those interested, Ferrara and co have made their Bot or Not? algorithm available at this website. Simply enter the screen name of the Twitter user and it will analyze its features and most recent posts to determine the likelihood of it being a social bot.

It wasn’t working at the time of writing, perhaps the victim of an aggrieved social bot. But if it is working now, give it a try and post your thoughts in the comments section below.

Ref: http://arxiv.org/abs/1407.5225 : The Rise of Social Bots

Deep Dive

Policy

Is there anything more fascinating than a hidden world?

Some hidden worlds--whether in space, deep in the ocean, or in the form of waves or microbes--remain stubbornly unseen. Here's how technology is being used to reveal them.

A brief, weird history of brainwashing

L. Ron Hubbard, Operation Midnight Climax, and stochastic terrorism—the race for mind control changed America forever.

What Luddites can teach us about resisting an automated future

Opposing technology isn’t antithetical to progress.

Africa’s push to regulate AI starts now

AI is expanding across the continent and new policies are taking shape. But poor digital infrastructure and regulatory bottlenecks could slow adoption.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.