The Shadowy World of Wikipedia’s Editing Bots

In a little over a decade, Wikipedia has evolved from an Internet experiment into a global crowdsourcing phenomenon. Today, this online encyclopedia provides free access to more than 30 million articles in 287 languages.

Less well known is Wikidata, an information repository designed to share basic facts for use on different language versions of Wikipedia. Wikidata therefore plays a crucial role in lubricating the flow of information between these online communities.

Maintaining all this data is a difficult job. It requires significant editing and polishing, mostly involving mindless, repetitive tasks such as formatting links and sources but also adding basic facts.

So much of this kind of work is automated. Behind the scenes, automated bots scan Wikipedia and Wikidata pages continually polishing the content for human consumption.

But that raises an important question. How much bot activity is there? What are these bots doing and how does it compare to human activity?

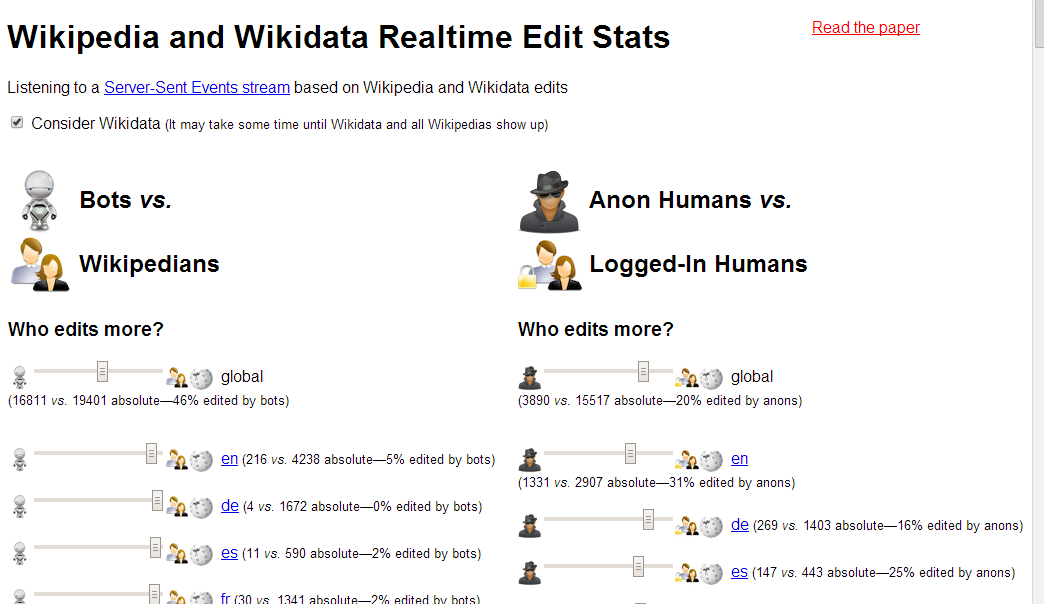

Today, we get an answer thanks to the work of Thomas Steiner at Google’s German operation in Hamburg. Steiner has created an application that monitors editing activity across all 287 language versions of Wikipedia and on Wikidata. And he publishes the results in real time online so that anybody can see exactly how many bots and humans are editing any of these sites at any instant.

For example, at the time of writing, across all language version of Wikipedia there are 10,407 edits being carried out by Bots and 11,148 by human Wikipedians. So that’s a 49/51 split between bots and humans.

But a closer look at the data reveals some interesting variations. For example, only 5 percent of the edits to the English language version of Wikipedia are being done by bots right now. By contrast, 94 percent of the edits to the Vietnamese version are by bots.

And on Wikidata, 77 percent of the 15,000 edits are being done by bots.

Steiner’s page also lists the most active bots. Wikipedia and Wikidata have long recognized the damage that bots can do and so have strict guidelines about their behavior. Wikidata even lists bots with approved tasks.

What’s curious about the automated edits on Wikidata is that the most active bots are not on this list. For example, at the time of writing a bot called Succubot is making 5797 edits to Wikidata entries and yet appears to be unknown to Wikidata. What is this bot doing?

Steiner’s page will give administrators a useful window into this seemingly shadowy behavior. In truth, any nefarious activity is usually spotted quickly and the perpetrator blocked. But this kind of oversight will still be hugely useful.

What’s more, Steiner has open-sourced the code so that anybody can use it to study the behavior of bots and humans in more detail.

An interesting corollary is that bots are becoming much more capable at producing articles of all kinds. The first Wikipedia bot, which was developed in 2002, automatically created entries for U.S. towns using a simple text template.

Today, there are automated feeds that produce stories about financial results and sporting results using simple templates: “Team A” beat “Team B” by “X amount” today in a match played at “Venue Y.” All that’s required is to cut and paste the relevant information into the correct places.

It’s not hard to see how this could become much more sophisticated. And while this kind of automated writing can be hugely useful, particularly for Wikipedia and its well documented problems with manpower, it could also be used maliciously too.

So ways of monitoring automated changes to text are likely to become more important in future.

Ref: arxiv.org/abs/1402.0412: Bots vs. Wikipedians, Anons vs. Logged-Ins

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.