Here’s how social-media firms should tackle online hate, according to physics

Policing online hate groups is like a never-ending game of whack-a-mole: moderators remove one neo-Nazi page on Facebook, only for another to appear hours later. It’s an approach that isn’t working, but a team of physicists have used a study of networks to suggest several alternative strategies that might.

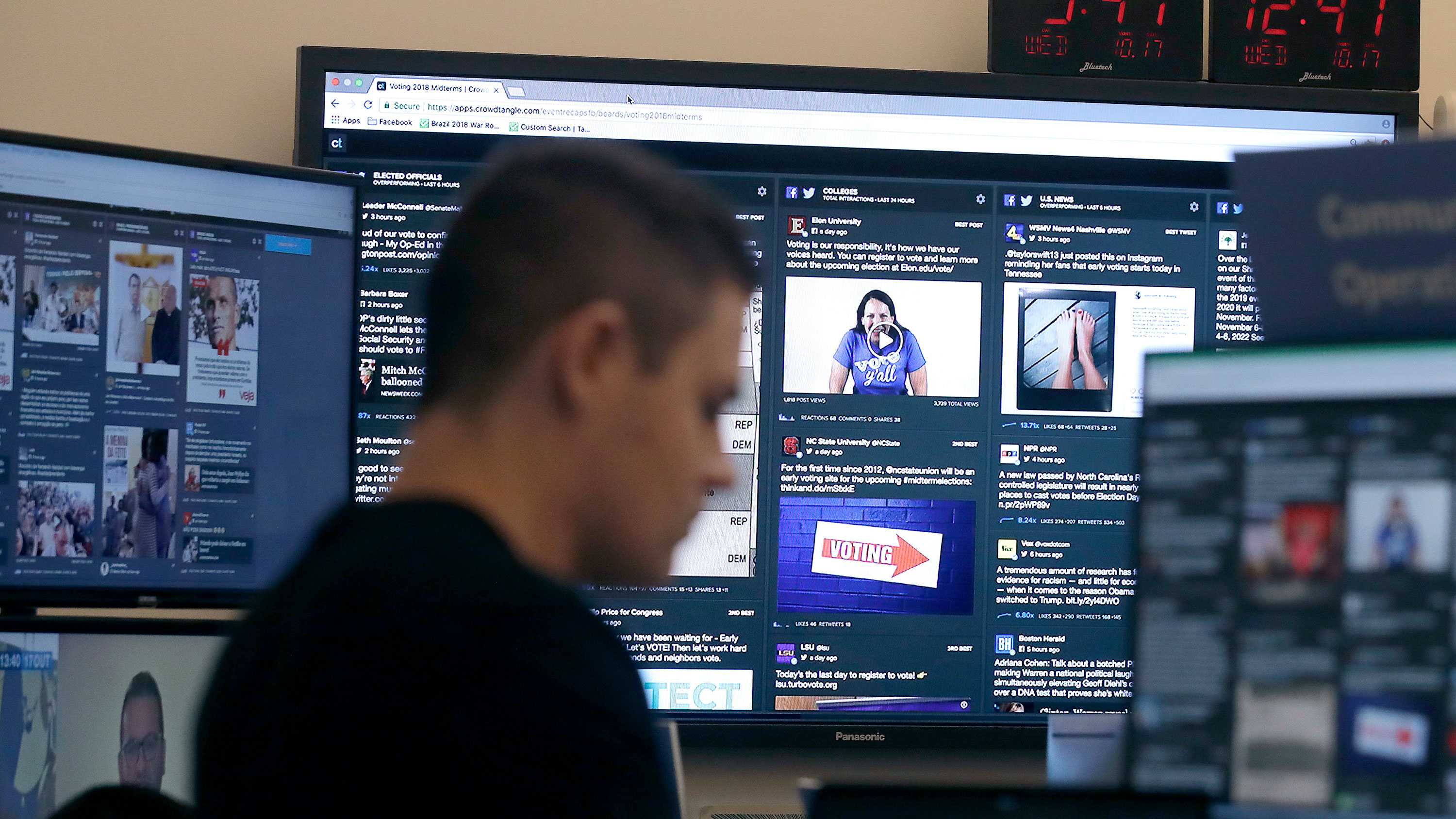

The scale of the problem: The team from George Washington University examined the dynamics of “hate communities”—groups that organize individuals with similar views—on social-media platforms Facebook and VKontakte (the equivalent in Russia) over a few months. They found that these networks are remarkably globally interconnected and resilient at the micro level when attacked, crossing platforms and jumping between countries, continents, and languages. Real-world evidence of this interconnectedness can be seen in the way white extremist attackers in Norway, New Zealand, and the US have explicitly drawn inspiration from each other.

The current approach is broken: The researchers’ mathematical model predicts that policing within a single platform, like Facebook, can actually make the spread of hate speech worse and could eventually push it underground, where it’s even harder to study and combat. The team explained their findings in a paper in Nature this week.

What can be done? The researchers suggest policies that could be implemented by social media companies:

— Ban relatively small hate clusters, rather than the largest. These are easier to locate, and eliminating them can help stop the larger clusters from forming in the first place.

— Ban a small number of users chosen randomly from online hate clusters. This avoids banning whole groups of users, which results in outrage and allegations of speech suppression.

— Encourage clusters of “anti-hate” users to form; they can counteract hate clusters.

— Since many hate groups online have opposing views, platform administrators should introduce an artificial group of users to sow division between these groups. The researchers found that these sorts of battles could bring down large hate clusters that have opposing views.

How likely is it? Some of the policies, especially the latter two, are pretty radical. But since current approaches are so profoundly ineffective, it’s surely worthwhile for social-media companies to try them out. Over to you, Mark Zuckerberg.

Sign up here for our daily newsletter The Download to get your dose of the latest must-read news from the world of emerging tech.

Deep Dive

Policy

Is there anything more fascinating than a hidden world?

Some hidden worlds--whether in space, deep in the ocean, or in the form of waves or microbes--remain stubbornly unseen. Here's how technology is being used to reveal them.

What Luddites can teach us about resisting an automated future

Opposing technology isn’t antithetical to progress.

A brief, weird history of brainwashing

L. Ron Hubbard, Operation Midnight Climax, and stochastic terrorism—the race for mind control changed America forever.

Africa’s push to regulate AI starts now

AI is expanding across the continent and new policies are taking shape. But poor digital infrastructure and regulatory bottlenecks could slow adoption.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.