The geek

When Chris Schmandt was an undergraduate at MIT in 1977, he saw an advertisement for a campus job doing “graphics programming in PL/I.” It paid the same amount as his burger-flipping gig in the cafeteria, he’d learned the PL/I programming language as a computer science and engineering major, and he liked the idea of a job that didn’t require showering at the end of each shift. So he went to work for Nicholas Negroponte’s Architecture Machine Group (AMG), the forerunner of the MIT Media Lab.

The job involved programming a Model 85 frame buffer, which was one of the first computer graphics systems that could render a “frame” of colored pixels, allowing the machine to display text, graphics, diagrams, and even color photos. But first, somebody needed to write the low-level microcode for the frame buffer’s controller. Somebody had to procure fonts and code them up, too. That person was Schmandt. “I started painting pixels on the screen and I was hooked,” he says.

That job had huge ramifications, not just for Schmandt but for all of us: his work ultimately led to the development of modern navigation systems like the Garmin GPS and Google Maps, as well as the voice interfaces that people are increasingly using to interact with computers.

“I remember very clearly that Nicholas was telling everybody that someday every computer would have one of these built in,” said Schmandt of the frame buffer at his retirement celebration in December 2018. “It was prescient, but he didn’t say that every phone would have one!”

A circuitous route to the lab

Schmandt’s groundbreaking work integrating speech and computing almost didn’t happen. He started at MIT in 1969, and had he graduated with his entering class in 1973, he might never have encountered the frame buffer. But by 1971, he’d declared a major in urban studies, fallen in love with linguistics, and nearly completed a humanities degree … and was doing meteorology research through the MIT Undergraduate Research Opportunities Program (UROP). “I decided I didn’t know what I was doing,” he says.

So he withdrew from school to figure it out and turned his UROP into a full-time job in the meteorology department, transporting punch cards to the computer center in a shopping cart. Before long, he’d learned enough about programming to fix typos on the cards, which allowed him to avoid schlepping across campus a second time so his boss could fix them. But Schmandt soon got wanderlust and set out on what would be a five-year journey. He traveled—mainly hitchhiking—from London to Spain, Morocco, Algeria, Niger, Cameroon, Zaire, Uganda, and Kenya, where he was berated by the cops for not speaking Swahili, and scrambled up to 19,000 feet on a ridge leading to the summit of Everest. “You’ve never seen anything like it—huge, snowy peaks all around you, looking down on three glaciers,” he says. “And at night the glaciers sing and groan as they slowly grind down the valleys.”

Traveling along what was known as the Hippie Trail in India, he met some British and Australian programmers, who convinced him that programming was a good livelihood one could pursue anywhere. So in 1977, he returned to MIT and reenrolled as an electrical engineering and computer science major.

Getting to work on the frame buffer convinced Schmandt that this had been a good decision. The frame buffer was one of the first computer graphics systems that didn’t flash when the image on the screen got more complex—a feat it pulled off through buffering, or storing, the entire screen in dedicated memory. With 400 rows of 512 pixels each, the frame buffer required more than 200,000 bytes of random-access memory, which was fantastically expensive at the time. So Schmandt had to write a program to run the Model 85 that would fit in the limited memory it had available.

This was “really geeky stuff,” even for the AMG, Schmandt recalls. To rib him, one of his labmates eventually hacked the lab’s synthesizer, programming it to produce a short musical fanfare and say “Geek!” when Schmandt logged in. He cheerfully accepted his new nickname.

Negroponte’s lab was creating a computing environment called the Spatial Data Management System (SDMS) that would let a person use gaze, gestures, and voice to interact with a database containing text, photos, maps, and even video. Located in the Department of Architecture and handsomely supported by the US Defense Advanced Research Projects Agency (DARPA) and corporate sponsors, the AMG was overflowing with equipment, ideas, and money. So when Schmandt completed his undergraduate degree in 1979, he stayed on to get a master’s in architecture in 1980.

“The lab had a bunch of really cool stuff because of the SDMS project and the support from DARPA,” he says. “The NEC DP-100 voice recognizer was $100K. Nobody knew what the light valve—it was the first video projection system—cost. I could sit and play with a million dollars’ worth of hardware.”

Put That There

The learning curve required to master each piece of equipment in the AMG was steep, so Negroponte assigned each device a “mother” who would be responsible for writing the software or building the hardware to help it grow into something useful. For his master’s thesis, Schmandt adopted a little box that, when strapped to your wrist, would tell the lab’s interactive computer where your wrist was and where it was pointing. Known as a six-degree-of-freedom device, it had been developed to mount on the helmets of helicopter pilots, who used them in simulators for training and over the battlefield for targeting; today low-cost versions are in many virtual-reality headsets.

Eric Hulteen ’80, SM ’82, was given the task of developing software for the NEC voice recognizer. Working together, Schmandt and Hulteen created Put That There, a drawing system controlled by voice and gestures that they first demoed in 1979.

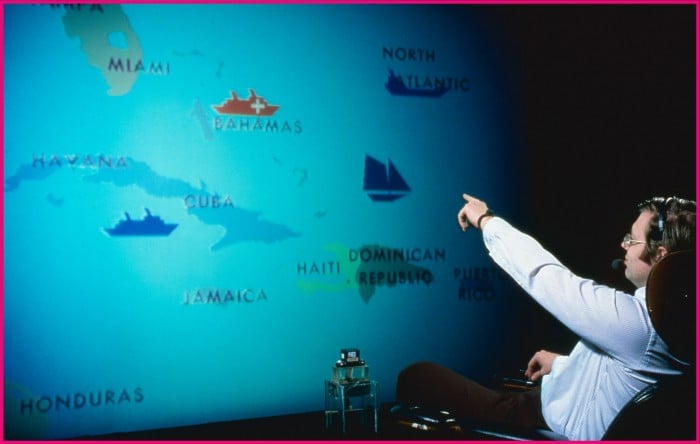

To run Put That There, you’d sit in a leather Eames chair in the lab’s soundproof “media room” and point your hand at the room’s wall-sized rear-projection screen, which was illuminated by the light valve, a projector on the other side of the wall. With the sensor and the microphones set up, you could say “Put a yellow circle there,” and the computer would obey. If you didn’t specify where the circle should go, the computer would ask “Where?” and wait for you to point and say “There!” Schmandt says it was one of the two first conversational systems ever written.

In 1980, Schmandt and Hulteen gave Put That There an upgrade: they turned the background into a map of the Caribbean and taught the computer to respond to commands like “Create a green freighter there” and “Move the cruise ship north of the Dominican Republic.” This made the program even more popular with the lab’s military sponsors, who could sit in the Eames chair and play out fantasies like surrounding Cuba with battleships and frigates.

“Jerry Wiesner was president of MIT, and his office was one floor below us,” Negroponte recalled at Schmandt’s retirement dinner. “When he went out to lunch, he would bring his friends and guests and say, ‘Oh, let’s stop by and see the Architecture Machine.’ … I don’t know how many people sat in that chair, but there were lots of them.”

Schmandt had no interest in getting a PhD. (“I didn’t want to spend two years working on a project,” he says.) But the research kept him at MIT. He joined AMG’s staff and before long had started a research group focused on developing ways for people to interact with computers using speech. The goal was to make computer technology available outside an office environment.

Back Seat Driver

In 1985 AMG morphed into the Media Lab. As a founding member, Schmandt has had many students over the years. But a project with one of the first, Jim Davis ’77, PhD ’89, would prove one of the most far-reaching. Davis had also had a UROP at the AMG in the 1970s, and he worked at a few companies before returning to the Media Lab in 1985. During a stint at the Cambridge supercomputer startup Thinking Machines the summer after starting his PhD, he developed a program called Direction Assistance that could take a detailed street map of a city, a starting point, and a destination, and provide turn-by-turn instructions in English for an efficient route. So the logical thing for Davis to do at the lab was to make Direction Assistance run in a car and deliver directions using a synthetic voice.

“At that time, GPS was only available to the military,” says Davis; civilians had access to a version with degraded precision. NEC, a lab sponsor, had developed an in-car navigation system that used dead reckoning instead of GPS: it noted the distance traveled and fit the car’s movements to a computerized map.

“It only worked with the Acura Legend, a high-end car,” Davis says. “So to do the research, the Media Lab needed an Acura Legend!” That was quite a step up for them: Schmandt was driving Datsun 210 station wagon so he could transport his skis, and Davis didn’t even have a car.

NEC installed the equipment, which displayed a map with a dot showing the car’s location on a screen built into the center of the dashboard. “That’s horrible,” Schmandt explains. “Because if anything moves in your peripheral visual field, you have a very strong reflex to look at it—it might be a wild animal about to pounce on you!” Schmandt worried that a moving screen would distract drivers from keeping their eyes on the road. “It was seeing [the NEC map] that got me convinced that this is where speech was going to make it,” he says. “And we were right—just 30 years ahead of time.”

The system that Schmandt and Davis built sent the car’s position (as determined by the NEC map) by cell phone to a program running on a powerful computer at the Media Lab. The program calculated the next set of street directions required, taking into account the driver’s position, the speed of the car, and how long it would take to speak the computed utterance. These directions were then fed into an early speech synthesizer called a DECtalk and sent back to the car over a second cell phone. Spotty reliability of cellular coverage caused a lot of problems, so the second version of the system moved the computer directly into the trunk. Schmandt and Davis called the system Back Seat Driver.

“I’m really pleased that we did Back Seat Driver,” he says. “It was a sweet application of voice.” The patent he and Davis filed on it—which he says was liberally cribbed from Davis’s dissertation—had been cited by 523 other patents as of February 2019, according to the US Patent and Trademark Office. “For some period of time it was the most highly cited patent in MIT’s portfolio,” he says. Yet they never made any money on it. All the patents that cited theirs go to great lengths to explain how they are different, he says, explaining that that’s how the patent game is played.

“You don’t always know where great ideas are going to come from,” Schmandt says, adding that he learned over the years to listen to the students. “My job is to help them hone their ideas—figure out what part of the idea is the good part. Where is the real novelty? How can you build this, how can you explain this?”

Cindy Hsin-Liu Kao, PhD ’18, says Schmandt’s encouragement was crucial to her research, which involved user interfaces that attach to the body with skin-friendly materials. “Thank you for always believing in me,” she said at his retirement dinner, which she attended virtually, using a handheld computer with built-in speech recognition, from the top of St. Peter’s Basilica in Rome. “When I was thinking to go in a direction that was more conventional or to explore something really weird, you were always saying to go do something weird.” Today, Kao is an assistant professor of design and environmental analysis at Cornell University. And her wireless video feed at Schmandt’s dinner surprised no one in attendance: today everybody does indeed have a frame buffer in their pocket.

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.