How to mod a smartphone camera so it shoots a million frames per second

Back in the 1930s, a young electrical engineer named Harold Edgerton began experimenting with electronic flash photography at MIT. He famously used it to freeze the action of everyday objects such as a stream of water, a bursting balloon, and a bullet hitting an apple.

Edgerton went on to pioneer a wide range of single and multiflash techniques (as well as many other imaging techniques). Many of these are now available as standard on popular cameras and smartphones.

Since then, shutter speeds have edged higher. Some iPhones can snap single images with a shutter speed of 1/10,000th of a second. Specialist cameras can record images at the rate of 40,000 per second. But this kind of gear is complex and expensive, so these techniques are inaccessible for most people.

Today, that looks set to change thanks to the work of Sam Dillavou at Harvard University and a couple of colleagues, who have developed a technique that allows ordinary electronic cameras and smartphones to record images at the breathtaking rate of millions of frames per second.

First some background. Modern cameras use electronic pixels to record the light that hits them. These pixels register the intensity, usually on a scale of 16 levels. This is determined by the bit level of pixels. So during an exposure, each pixel can record 14 shades of gray between black and white.

But for many types of imaging this level of detail is unnecessary—all that’s needed is a simple black or white image. In that case, the extra gray detail is redundant.

That gave Dillavou and colleagues an idea for teasing single images apart into multiple shorter exposures.

Here’s how. Imagine a white wall covered by a black curtain. Now open the curtain from left to right to reveal the wall, and record the movement in a single exposure.

During the exposure, the pixels on the left-hand side of the image first record the black curtain but then the white wall as the curtain moves across the frame. This averages out to a light gray.

By contrast, pixels recording the right-hand side of the image see the black curtain for most of the exposure. Only at the end of the exposure is the white wall revealed. In that case, the average is a dark gray.

Similarly, the pixels in the middle of the image will record a mid-gray. And the final image appears as a graduated gray, which represents the movement of the curtain.

The key idea of Dillavou and colleagues is that this single image can be thought of as many black-and-white snapshots of the moving curtain superimposed on each other. Indeed, the number of images that can be teased out of a single image depends on the number of bit levels the pixels record.

Armed with this insight, they developed a mathematical technique to extract these images from a single image, an idea they call the virtual frame technique. In this case, it can create 16 images of the curtain moving across the wall.

This technique has the potential to turn almost any electronic camera into a high-speed marvel. The team has tested it by comparing the virtual frames with those taken by a high-speed camera recording at 40,000 frames per second.

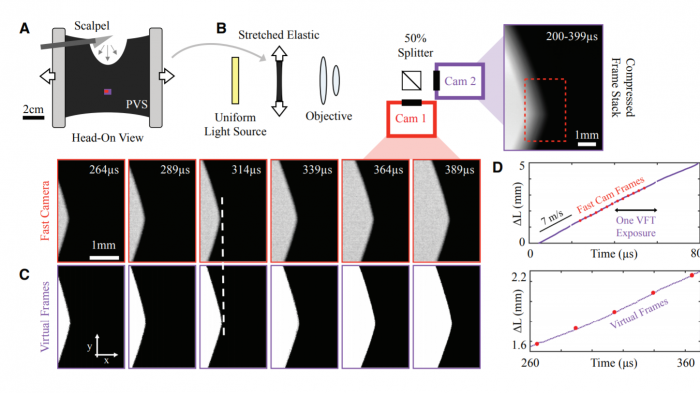

They placed a stretched rubber sheet in front of a beam splitter that sent the light to both cameras, so both had the same view of the sheet. The team set up the lighting so that the rubber sheet appeared black while the background appeared white. They used a scalpel to nick the rubber sheet and recorded the way it split with both cameras as the fracture raced across the sheet.

Finally, the team used the virtual frame technique to extract high-frame-rate images and compare them with those from the high-speed camera shooting at 40,000 frames per second. A video of this comparison is downloadable here.

The results show that the virtual frame technique has huge potential. First, both sets of images show a similar fracturing process, which confirms the merit of the approach. But it also has a number of significant advantages. It records 1.3 megapixels in each image, compared with the standard camera’s 60 kilopixels. This dramatically increases the field of view and therefore the distance over which the fracture can be recorded.

The virtual frame rate is much faster, too. “The effective frame rate achieved using [the virtual framing technique] is 1MHz,” say Dillavou and co.

They go on to study how applying the new technique to a variety of different cameras increases their frame rates. The numbers are eye-opening. “As an example, the Nikon D850 records virtual frames at 16MHz while maintaining a resolution over 50Mpx,” they say. Even an iPhone X is capable of recording at up to a million frames per second using this technique.

There’s little reason to think this can’t be managed easily, since all the processing is done later. “This enhanced virtual frame rate does not require any change in the operation of the camera,” say the team.

However, there are some important limits to the types of phenomena that can be recorded in this way. First, the object of interest must be black against a white background (or vice versa). Any grays in the scene will ruin the results.

And the phenomenon itself must be what Dillavou and co call monotonic. In other words, any change during an exposure must proceed from black to white (or vice versa) but not back again. In the example with curtain, the virtual frame technique works if the curtain moves in one direction but not if it moves back and forth.

Nevertheless, the virtual frame technique is a significant advance for photography. It has the potential to turn the smartphone camera in your back pocket into a high-speed recorder, the likes of which would have impressed even Doc Edgerton.

Ref: arxiv.org/abs/1811.02936 : Virtual Frame Technique: Ultrafast Imaging with Any Camera

Deep Dive

Artificial intelligence

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

What’s next for generative video

OpenAI's Sora has raised the bar for AI moviemaking. Here are four things to bear in mind as we wrap our heads around what's coming.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.