Why humans learn faster than AI—for now

In 2013, DeepMind Technologies, then a little-known company, published a groundbreaking paper showing how a neural network could learn to play 1980s video games the way humans do—by looking at the screen. These networks then went on to thrash the best human players.

A few months later, Google bought the company for $400 million. DeepMind has since gone on to apply deep learning in a range of situations, most famously to outperform humans in the ancient game of Go.

But while this work is impressive, it highlights one of the significant limitations of deep learning. Compared with humans, machines using this technology take a huge amount of time to learn. What is it about human learning that allows us to perform so well with relatively little experience?

Today we get an answer of sorts thanks to the work of Rachit Dubey and colleagues at the University of California, Berkeley. They have studied the way humans interact with video games to find out what kind of prior knowledge we rely on to make sense of them.

It turns out that humans use a wealth of background knowledge whenever we take on a new game. And this makes the games significantly easier to play. But faced with games that make no use of this knowledge, humans flounder, whereas machines plod along in exactly the same way.

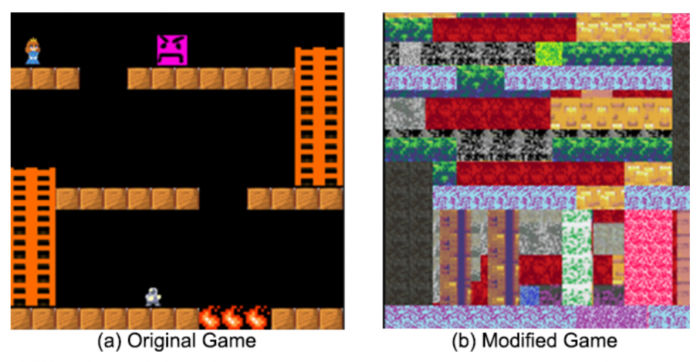

Take a look at the computer game shown above on the left (the original game). This game is based on a classic called Montezuma’s Revenge, originally released for the Atari 8-bit computer in 1984.

There is no manual and no instructions; you aren’t even told which “sprite” you control. And you get feedback only if you successfully finish the game.

Would you be able to do so? How long would it take? You can try it at this website (along with the other games mentioned in the paper).

In all likelihood, the game will take you about a minute, and in the process you’ll probably make about 3,000 keyboard actions. That’s what Dubey and co found when they gave the game to 40 workers from Amazon’s crowdsourcing site Mechanical Turk, who were offered $1 to finish it.

“This is not overly surprising as one could easily guess that the game’s goal is to move the robot sprite towards the princess by stepping on the brick-like objects and using ladders to reach the higher platforms while avoiding the angry pink and the fire objects,” the researchers say.

By contrast, the game is hard for machines: many standard deep-learning algorithms couldn’t solve it at all, because there is no way for an algorithm to evaluate progress inside the game when feedback comes only from finishing.

The best machine performer was a curiosity-based reinforcement-learning algorithm that took some four million keyboard actions to finish the game. That’s equivalent to about 37 hours of continuous play.

So what makes humans so much better? It turns out that we do not approach this game with a blank slate. A human will see that he or she has control over the robot, and that the robot should avoid fire, climb ladders, jump over gaps, and avoid a frowning enemy to reach the princess. All this is thanks to prior knowledge that certain objects are good while others (with frowns or flames) are bad, that platforms support objects while ladders can be climbed, that things that look the same behave in the same way, that gravity pulls objects down, and even what “objects” are: things that are separate from other things and have different properties.

By contrast, a machine knows none of this.

So Dubey and co refashioned the game to make this prior information irrelevant and then measured how long it took human Turkers to finish. The team then assumed that any increase in this time is a proxy for the importance of that information.

“We created different versions of the video game by re-rendering various entities such as ladders, enemies, keys, platforms etc. using alternate textures,” they explain. They chose these textures to mask various forms of prior knowledge, and they changed physical properties of the game, such as the effect of gravity, and the way the agent interacts with its environment. In every version, the underlying dynamics of the game were the same.

The results make for fascinating reading. “We find that removal of some prior knowledge causes a drastic degradation in the speed with which human players solve the game,” say Dubey and co. Indeed, the time it takes for humans to solve the game increases from a minute to over 20 minutes as different kinds of prior information are removed.

By contrast, removing this information usually makes no difference to the rate at which the machine algorithm learns.

The team is even able to rank different kinds of information in importance by the increase in time that removing it causes. Removing object semantics, such as a frowning face or fire symbol, requires human players to spend longer before finishing. But masking the concept of “object” makes things so much harder that many Turkers simply refused to play. “We had to increase the pay to $2.25 to encourage participants not to quit,” say Dubey and co.

This ranking has an interesting link to the way humans learn. Psychologists have found that at two months old, babies possess a primitive notion of objects that they expect to move as connected wholes. But at this age, babies do not recognize object categories.

By three to five months of age, infants learn to recognize object categories; by 18 to 24 months, they learn to recognize individual objects. At about this time, they also learn the properties of objects (object affordances, as psychologists call them), and thus they learn the difference between a walkable step along flat ground and an unwalkable step off a cliff.

It turns out that Dubey and co’s experiments rank this kind of learned information in exactly the same order that babies learn it. “It is quite interesting to note that the order in which infants increase their knowledge matches the importance of different object priors,” they say.

“Our work takes first steps toward quantifying the importance of various priors that humans employ in solving video games and in understanding how prior knowledge makes humans good at such complex tasks,” they add.

That suggests an interesting way forward for computer scientists working on machine intelligence—to program their charges with the same basic knowledge that humans pick up at an early age. In this way, machines should be able to catch up with humans in their speed of learning, and maybe even outperform them.

We will look forward to seeing the results.

Ref: arxiv.org/abs/1802.10217: Investigating Human Priors for Playing Video Games

Deep Dive

Artificial intelligence

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

What’s next for generative video

OpenAI's Sora has raised the bar for AI moviemaking. Here are four things to bear in mind as we wrap our heads around what's coming.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.