Bias already exists in search engine results, and it’s only going to get worse

The internet might seem like a level playing field, but it isn’t. Safiya Umoja Noble came face to face with that fact one day when she used Google’s search engine to look for subjects her nieces might find interesting. She entered the term “black girls” and came back with pages dominated by pornography.

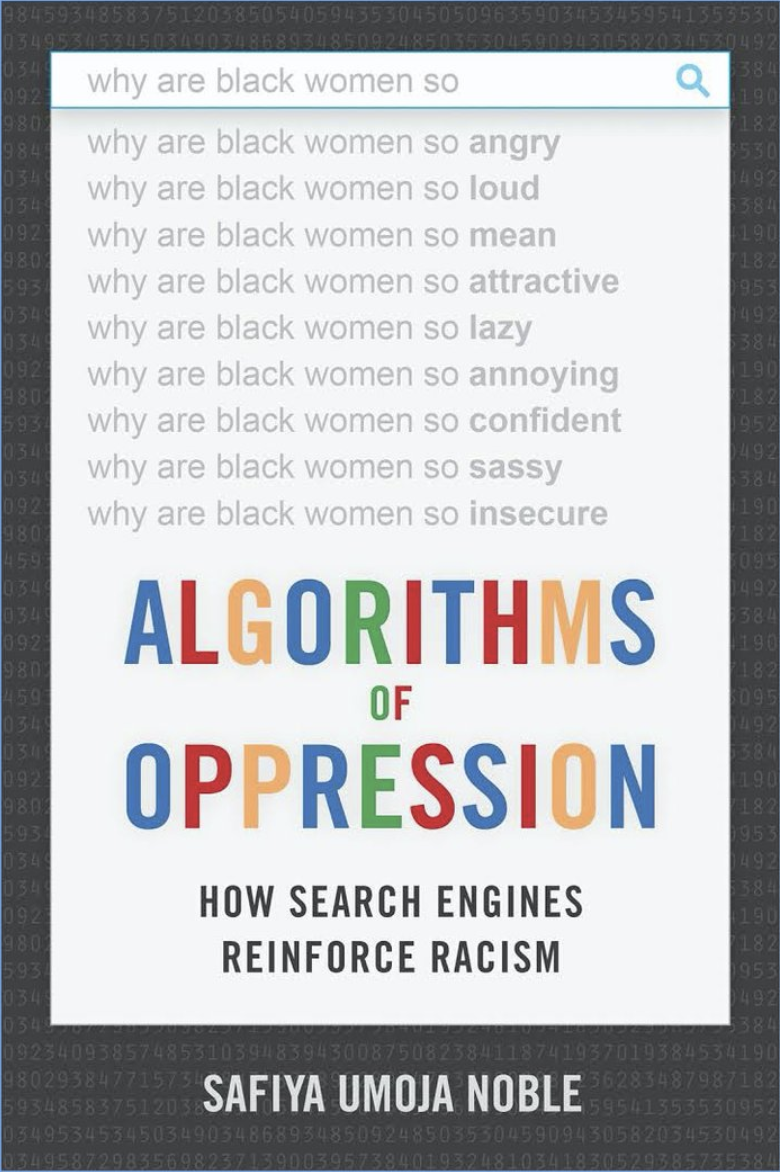

Noble, a USC Annenberg communications professor, was horrified but not surprised. For years she has been arguing that the values of the web reflect its builders—mostly white, Western men—and do not represent minorities and women. Her latest book, Algorithms of Oppression, details research she started after that fateful Google search, and it explores the hidden structures that shape how we get information through the internet.

The book, out this month, argues that search engine algorithms aren’t as neutral as Google would like you to think. Algorithms promote some results above others, and even a seemingly neutral piece of code can reflect society’s biases. What’s more, without any insight into how the algorithms work or what the broader context is, searches can unfairly shape the discussion of a topic like black girls.

Noble spoke to MIT Technology Review about the problems inherent with the current system, how Google could do better, and how artificial intelligence might make things worse.

What do people get wrong about how search engines work?

If we’re looking for the closest Starbucks, a specific quote, or something very narrow that is easily understood, it works fine. But when we start getting into more complicated concepts around identity, around knowledge, this is where search engines start to fail us. This wouldn’t be so much of a problem except that the public really relies upon search engines to give them what they think will be the truth, or something vetted, or something that’s credible. This is where, I think, we have the greatest misunderstanding in the public about what search engines are.

To address bias, Google normally suppresses certain results. Is there a better approach?

We could think about pulling back on such an ambitious project of organizing all the world’s knowledge, or we could reframe and say, “This is a technology that is imperfect. It is manipulatable. We’re going to show you how it’s being manipulated. We’re going to make those kinds of dimensions of our product more transparent so that you know the deeply subjective nature of the output.” Instead, the position for many companies—not just Google—is that [they are] providing something that you can trust, and that you can count on, and this is where it becomes quite difficult.

How might machine learning perpetuate some of the racism and sexism you write about?

I've been arguing that artificial intelligence, or automated decision-making systems, will become a human rights issue this century. I strongly believe that, because machine-learning algorithms and projects are using data that is already biased, incomplete, flawed, and [we are] teaching machines how to make decisions based on that information. We know [that’s] going to lead to a variety of disparate outcomes. Let me just add that AI will be harder and harder to intervene upon because it will become less clear what data has been used to inform the making of that AI, or the making of those systems. There are many different kinds of data sets, for example, that are not standardized, that are coalescing to make decisions.

Since you first searched for “black girls” in 2010, have you seen things get better or worse?

Since I started writing about and speaking publicly about black girls in particular being associated with pornography, things have changed. Now the pornography and hypersexualized content is not on the first page, so I think that was a quiet improvement that didn’t come about with a lot of fanfare. But other communities, like Latina and Asian girls, are still highly sexualized in search results.

Deep Dive

Artificial intelligence

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

What’s next for generative video

OpenAI's Sora has raised the bar for AI moviemaking. Here are four things to bear in mind as we wrap our heads around what's coming.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.