A Startup Uses Quantum Computing to Boost Machine Learning

A company in California just proved that an exotic and potentially game-changing kind of computer can be used to perform a common form of machine learning.

The feat raises hopes that quantum computers, which exploit the logic-defying principles of quantum physics to perform certain types of calculations at ridiculous speeds, could have a big impact on the hottest area of the tech industry: artificial intelligence.

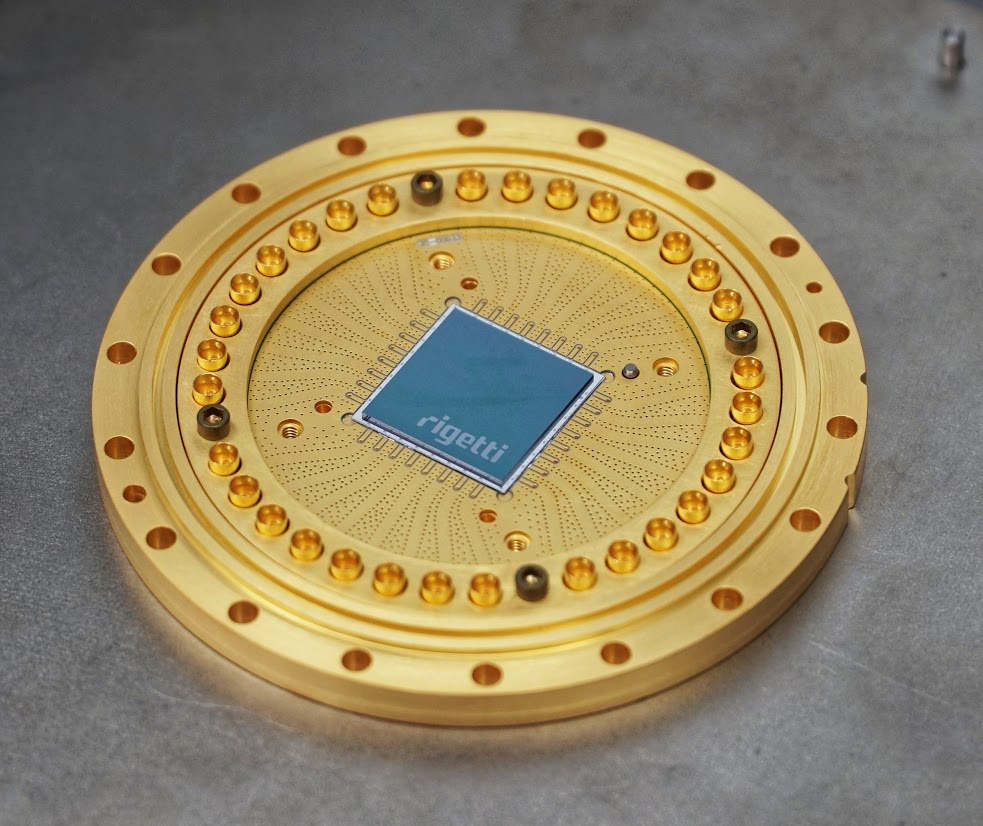

Researchers at Rigetti Computing, a company based in Berkeley, California, used one of its prototype quantum chips—a superconducting device housed within an elaborate super-chilled setup—to run what’s known as a clustering algorithm. Clustering is a machine-learning technique used to organize data into similar groups. Rigetti is also making the new quantum computer—which can handle 19 quantum bits, or qubits—available through its cloud computing platform, called Forest, today.

The demonstration does not, however, mean quantum computers are poised to revolutionize AI. Quantum computers are so exotic that no one quite knows what the killer apps might be. Rigetti’s algorithm, for instance, isn’t of any practical use, and it isn’t entirely clear how useful it would be to perform clustering tasks on a quantum machine.

Still, Will Zeng, head of software and applications at Rigetti, argues that the work represents a key step toward building a quantum machine. “This is a new path toward practical applications for quantum computers,” Zeng says. “Clustering is a really fundamental and foundational mathematical problem. No one has ever shown you can do this.”

There is currently a remarkable amount of excitement surrounding efforts to develop practical quantum computers. Big technology companies, including IBM, Google, Intel, and Microsoft, as well as a few well-funded startups are racing to build exotic machines that promise to usher in a fundamentally new form of computing.

First dreamed up by physicists almost 40 years ago, quantum computers do not handle information using binary 1s and 0s. Instead, they exploit two quantum phenomena—superposition and entanglement—to perform calculations on large quantities of data at once. The nature of quantum physics means that a computer with just 100 qubits should be capable of calculations on a mind-boggling scale.

Rigetti is something of an underdog in the race. IBM recently announced that it has built a quantum computer with 50 qubits, and Google is widely rumored to have a device of similar scale. Still, Rigetti has plenty of boosters. The company has raised around $70 million from investors including Andreessen Horowitz, one of Silicon Valley’s most prominent firms.

Having more qubits doesn’t necessarily equate to superiority, though. Maintaining quantum states and manipulating qubits reliably represent formidable challenges.

Like some others, Rigetti uses a hybrid approach, meaning its quantum machine works in concert with a conventional one to make programming more straightforward. Zeng says the company’s systems are also more modular than its rivals’, which may offer a significant edge when it comes to scaling machines up further.

Quantum computing has tremendous potential, in theory. There is good evidence that quantum machines can be used to solve cryptographic challenges and to simulate new material. And there is hope that algorithms such Rigetti’s will eventually transform the world of machine learning and AI.

Quantum computers are just now reaching a scale where they can perform work that would be very difficult, if not impossible, to run on even the most powerful conventional supercomputer. The race to demonstrate this threshold with a functioning machine, sometimes referred to as “quantum supremacy,” has become symbolic of the current hype. Physicists agree that it will be several more years before quantum computers, and the algorithms that run on them, show their worth.

Christopher Monroe, an experimental physicist at the University of Maryland and chief scientist at another quantum computing startup, IonQ, says it is too early to suggest quantum computing will change machine learning. “We don’t really understand how and why classical machine learning works, so it seems that applying it to quantum might just further obfuscate an already obfuscated field,” he says.

Monroe, however, raises an interesting reverse possibility. He suggests that machine learning might play a key role in making quantum computers more reliable. “The growing complexity of classical control systems for large quantum computers may need a different approach,” he points out. So he speculates that “perhaps non-quantum machine learning will be used” to manage the complex behavior inside these machines.

Scott Aaronson, who directs the Quantum Information Center at the University of Texas, says he expects quantum computing to speed up some machine-learning approaches in the future, although more work will be needed to show how valuable that is.

Both Aaronson and Monroe agree that making quantum computers accessible via the cloud, as Rigetti, IBM, and Google are all doing, will be crucial to advancing the field. Applications will likely emerge as engineers and programmers start to experiment on these systems.

Providing access to early adopters may also provide a valuable revenue stream for startups like Rigetti. IBM recently announced a range of partners for its quantum project, including JPMorgan Chase, Daimler AG, Samsung, Hitachi, and Oak Ridge National Laboratory. These companies want to see what quantum machines might be able to do in a range of applications including financial modeling, chemistry, and route optimization.

Aaronson wonders if the growing hype might eventually hold back real progress, although it may prove difficult to separate the two. “On the other hand,” he says, “it’s a genuinely exciting time.”

Deep Dive

Computing

Inside the hunt for new physics at the world’s largest particle collider

The Large Hadron Collider hasn’t seen any new particles since the discovery of the Higgs boson in 2012. Here’s what researchers are trying to do about it.

How ASML took over the chipmaking chessboard

MIT Technology Review sat down with outgoing CTO Martin van den Brink to talk about the company’s rise to dominance and the life and death of Moore’s Law.

How Wi-Fi sensing became usable tech

After a decade of obscurity, the technology is being used to track people’s movements.

Algorithms are everywhere

Three new books warn against turning into the person the algorithm thinks you are.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.