AI Can Be Made Legally Accountable for Its Decisions

Artificial intelligence is set to play a significantly greater role in society. And that raises the issue of accountability. If we rely on machines to make increasingly important decisions, we will need to have mechanisms of redress should the results turn out to be unacceptable or difficult to understand.

But making AI systems explain their decisions is not entirely straightforward. One problem is that explanations are not free; they require considerable resources both in the development of the AI system and in the way it is interrogated in practice.

Another concern is that explanations can reveal trade secrets by forcing developers to publish the AI system’s inner workings. Moreover, one advantage of these systems is that they can make sense of complex data in ways that are not accessible to humans. So making their explanations understandable to humans might require a reduction in performance.

How, then, are we to make AI accountable for its decisions without stifling innovation?

Today, we get an answer of sorts thank to the work of Finale Doshi-Velez, Mason Kortz, and others at Harvard University in Cambridge, Massachusetts. These folks are computer scientists, cognitive scientists, and legal scholars who have together explored the legal issues that AI systems raise, identified key problems, and suggested potential solutions. “Together, we are experts on explanation in the law, on the creation of AI systems, and on the capabilities and limitations of human reasoning,” they say.

They begin by defining “explanation.” “When we talk about an explanation for a decision, we generally mean the reasons or justifications for that particular outcome, rather than a description of the decision-making process in general,” they say.

The distinction is important. Doshi-Velez and co point out that it is possible to explain how an AI system makes decisions in the same sense that it is possible to explain how gravity works or how to bake a cake. This is done by laying out the rules the system follows, without referring to any specific falling object or cake.

This is the fear of industrialists who want to keep the workings of their AI systems secret to protect their commercial advantage.

But this kind of transparency is not necessary in many cases. Explaining why an object fell in an industrial accident, for example, does not normally require an explanation of gravity. Instead, explanations are usually required to answer questions like these: What were the main factors in a decision? Would changing a certain factor have changed the decision? Why did two similar-looking cases lead to different decisions?

Answering these questions does not necessarily require a detailed explanation of an AI system’s workings.

So when should explanations be given? Essentially, when the benefit outweighs the cost. “We find that there are three conditions that characterize situations in which society considers a decision-maker is obligated—morally, socially, or legally—to provide an explanation,” say Doshi-Velez and co.

The team say the decision must have an impact on a person other than the decision maker. There must be value to knowing if the decision was made erroneously. And there must be some reason to believe that an error has occurred (or will occur) in the decision-making process.

For example, observers might suspect that a decision was influenced by some irrelevant factor, such as a surgeon refusing to perform an operation because of the phase of the moon. Or they may distrust a system if it made the same decision in two entirely different sets of circumstances. In that case, they might suspect that it has failed to take an important factor into account. Another concern arises with decisions that appear to benefit one group unfairly, as when corporate directors make decisions that benefit themselves at the expense of their shareholders.

In other words, there must be good reason to think a decision is improper before demanding an explanation. But there can also be other reasons to give explanations, such as attempting to increase trust with consumers.

So Doshi-Velez and co look at concrete legal situations in which explanations are required. They point out that reasonable minds can and do differ about whether it is morally justifiable or socially desirable to demand an explanation. “Laws on the other hand are codified, and while one might argue whether a law is correct, at least we know what the law is,” they say.

Under U.S. law, explanations are required in a wide variety of situations and in varying levels of detail. For example, explanations are required in cases of strict liability, divorce, or discrimination; for administrative decisions; and for judges and juries. But the level of detail varies hugely.

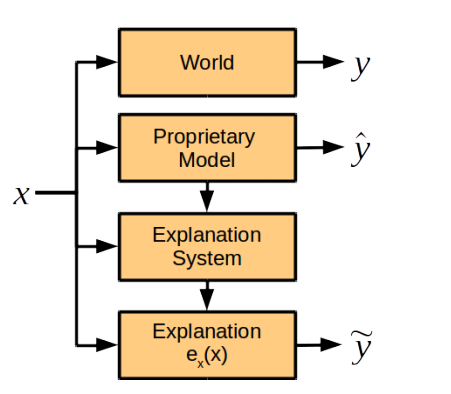

All that has important implications for AI systems. Doshi-Velez and co conclude that legally feasible explanations are possible for AI systems. This is because the explanation for a decision can be made separately from a description of its inner workings. What’s more, the team say that an explanation system should be considered distinct from the AI system.

That’s a significant result. It does not mean that satisfactory explanations will always be easy to generate. For example, how can we show that a security system that uses images of a face as input does not discriminate on the basis of gender? That is only possible using an alternate face that is similar in every way except for gender, say the team.

But explanations for the decisions AI systems make are generally possible. And that leads the team to a clear conclusion. “We recommend that for the present, AI systems can and should be held to a similar standard of explanation as humans currently are,” they say.

But our use and understanding of AI is likely to change in ways we do not yet understand (and perhaps never will). For that reason this approach will need to be revisited. “In the future we may wish to hold an AI to a different standard,” say Doshi-Velez and co.

Quite!

Ref: arxiv.org/abs/1711.01134 : Accountability of AI Under the Law: The Role of Explanation

Deep Dive

Artificial intelligence

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

What’s next for generative video

OpenAI's Sora has raised the bar for AI moviemaking. Here are four things to bear in mind as we wrap our heads around what's coming.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.