Secret Ultrasonic Commands Can Control Your Smartphone, Say Researchers

Voice-controlled digital assistants are increasingly common and sophisticated. Google, Apple, Amazon, numerous car manufacturers, and others all allow their devices to be controlled by voice. And that, of course, gives malicious attackers a tempting way of targeting these devices.

Many people with voice-activated devices will have experienced anomalous activations as the device reacts to other people’s voices or interprets external noise as a command. So it’s not hard to imagine how a hacker might attempt an attack using a nearby speaker. However, such attacks are easily foiled by an alert user who can also hear the commands.

But that raises an interesting question: is it possible to activate voice-controlled assistants using inaudible commands?

Today, we get an answer thanks to the work of Guoming Zhang and Chen Yan and a few pals at Zhejiang University in China. They've developed a system called DolphinAttack that uses ultrasonic messages to activate digital assistants, such as Siri, Google Now, and the Audi voice-controlled navigation system. These messages are entirely inaudible to the human ear and represent a significant new threat, says the team.

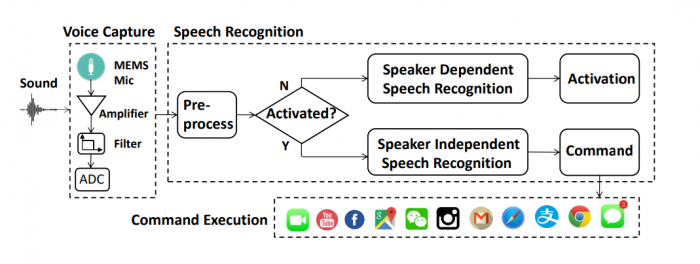

The principle behind the attack is simple. The microphones used in modern electronics devices are designed to convert audible sound into electronic signals. This is sound with a frequency lower than about 20 KHz, the generally accepted limit of human hearing.

Microphones have a deformable membrane that vibrates when struck by sound waves. This vibration changes the capacitance of an internal circuit, thereby converting the sound into electronic form.

By design, these membranes are not particularly sensitive to ultrasonic frequencies. And in any case, higher frequency signals are filtered out by the device electronics.

But Guoming and co have found a way to game these devices. The trick is to use an ultrasonic frequency that is amplitude modulated by the frequency of ordinary speech. So the ultrasound acts as a carrier wave for the spoken message.

It turns out that the microphone membranes can pick up such signals, even though they are inaudible to humans.

That opens a Pandora’s Box of potential mischief. Guoming and co tested the approach on devices such as various Apple iPhones, an LG Nexus 5X, a Samsung Note S6 edge, an Amazon Echo, and an Audi Q3 navigation system, to name a few.

Some of these assistants work with any voice, such as Amazon’s Echo. Others can only be activated by a specific voice, although commands after this can be in any voice. In this case, the Chinese team says it is straightforward to try a number of different voices to find one similar enough to complete the activation.

(A common observation is that people with similar voices can activate each other’s voice-controlled phone.)

The team then used a Samsung Galaxy S6 edge connected to an ultrasonic loudspeaker to test the attack both in the lab and in the real world with background noise.

The results are unnerving. “We show that the DolphinAttack voice commands, though totally inaudible and therefore imperceptible to humans, can be received by the audio hardware of devices and correctly understood by speech recognition systems,” say Guoming and co.

The team used the system to persuade Siri to initiate a FaceTime call on an iPhone, to force Google Now to switch a phone to airplane mode, and even to manipulate the navigation system in an Audi automobile.

More advanced commands are clearly possible. “We believe this list is by far not comprehensive,” say the researchers.

That will make for uncomfortable reading for security-conscious owners of these devices. It’s not hard to imagine how a malicious user might exploit this weakness to secretly initiate calls that eavesdrop on conversations, to switch off a phone to prevent it receiving data, or to repurpose the navigation system on a voice-controlled car.

And as voice-controlled systems become more capable the threat becomes greater and more worrying. Google’s latest Assistant is significantly more capable then previous incarnations, for example.

Guoming and co say the threat can be mitigated. The most obvious way is to redesign the microphones to reduce their sensitivity to ultrasonic carrier waves. This should be straightforward but it does not help the millions of people who already own a smartphone, assistant, or car that is at risk.

For this group, a software-based solution is more practical. Ultrasonic commands differ from natural voice signals in several unique ways that should be easy to spot. Developing such a system shouldn’t be too tricky although delivering it to millions of users will be.

In the meantime, a modified smartphone that can carry out this kind of attack is easy to build. Which means that millions of smart devices around the globe are at risk.

Ref: arxiv.org/abs/1708.09537: DolphinAttack: Inaudible Voice Commands

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.