How Machine Learning Is Helping Neuroscientists Crack Our Neural Code

Whenever you move your hand or finger or eyeball, the brain sends a signal to the relevant muscles containing the information that makes this movement possible. This information is encoded in a special way that allows it to be transmitted through neurons and then actioned correctly by the relevant muscles.

Exactly how this code works is something of a mystery. Neuroscientists have long been able to record these signals as they travel through neurons. But understanding them is much harder. Various algorithms exist that can decode some of these signals, but their performance is patchy. So a better way of decoding neural signals is desperately needed.

Today, Joshua Glaser at Northwestern University in Chicago and a few pals say they have developed just such a technique using the new-fangled technology of machine learning. They say their decoder significantly outperforms existing approaches. Indeed, it is so much better that the team says it should become the standard method for analyzing neural signals in future.

First some background. Information travels along nerve fibers in the form of voltage spikes, or action potentials, that travel along nerve fibers. Neuroscientists believe that the pattern of spikes encodes data about external stimuli, such as touch, sight, and sound. Similarly, the brain encodes information about muscle movement in a similar way.

Understanding this code is an important goal. It allows neuroscientists to better understand the information that is sent to and processed by the brain. It is also helps explain how the brain controls muscles.

Engineers would dearly love to have better brain-machine interfaces for controlling wheelchairs, prosthetic limbs, and video games. “Decoding is a critical tool for understanding how neural signals relate to the outside world,” say Glaser and co.

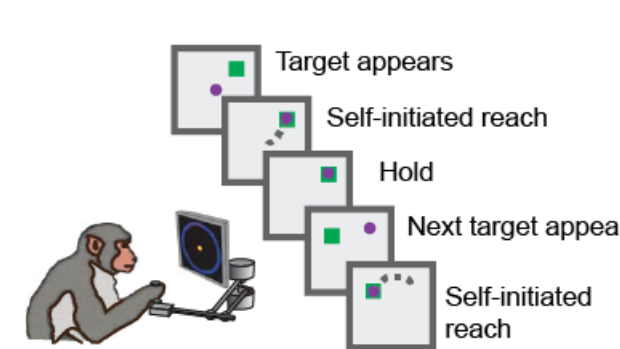

Their method is straightforward. They’ve trained macaque monkeys to move a screen cursor toward a target using a kind of computer mouse. In each test, the cursor and target appear on a screen at random locations, and the monkey has to move the cursor horizontally and vertically to reach the goal.

Having trained the animals, Glaser and co recorded the activity of dozens of neurons in the parts of their brains that control movement: the primary motor cortex, the dorsal premotor cortex, and the primary somatosensory cortex. They recordings lasted for around 20 minutes, which is about the attention span of the monkeys … and the experimenters.

The job of a decoding algorithm is to determine the horizontal and vertical distance that the monkey moves the cursor in each test, using only the neural data.

Glaser and co’s goal was to find out which kind of decoding algorithm does this best. So they fed the data into a variety of conventional algorithms and several new machine-learning algorithms.

The conventional algorithms work using a statistical technique known as linear regression. This involves estimating a curve that fits the data and then reducing the error associated with it. It is widely used in neural decoding in techniques such as Kalman filters and Wiener cascades.

Glaser and co compared these techniques to a variety of machine-learning approaches based on neural networks. These included a Long Short Term Memory Network, a recurrent neural network, and a feedforward neural network.

All these learn from annotated data sets, and the bigger the data set, the better they learn. This generally involves dividing the data set in two—80 percent being used to train the algorithm and the other 20 percent used to test it.

The results are convincing. Glaser and co say that the machine-learning techniques significantly outperformed the conventional analyses. “For instance, for all of the three brain areas, a Long Short Term Memory Network decoder explained over 40% of the unexplained variance from a Wiener filter,” they say. “These results suggest that modern machine-learning techniques should become the standard methodology for neural decoding.”

In some ways, it’s not surprising that machine-learning techniques do so much better. Neural networks were originally inspired by the architecture of the brain, so the fact that they can better model how it works is expected.

The downside of neural nets is that they generally need large amounts of training data. But Glaser and co deliberately reduced the amount of training data they fed to the algorithms and found the neural nets still outperformed the conventional techniques.

That’s probably because the team used smaller networks than are conventionally used for techniques such as face recognition. “Our networks have on the order of 100 thousand parameters, while common networks for image classification can have on the order of 100 million parameters,” they say.

The work opens the way for others to build on this analysis. Glaser and co have made their code available for the community so that existing neural data sets can be reanalyzed in the same way.

There is plenty to do. Perhaps the most significant task will be in finding a way to carry out the neural decoding in real time. All of Glaser and co’s work was done offline after the recordings had been made. But it would clearly be useful to be able to learn on the fly and predict movement as it happens.

This is a powerful approach that has significant potential. In other areas of science where machine learning has been applied for the first time, researchers have stumbled across much low-hanging fruit. It would be a surprise if the same weren’t true of neural decoding.

Ref: arxiv.org/abs/1708.00909: Machine Learning for Neural Decoding

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.