Machine-Vision Algorithm Learns to Judge People by Their Faces

Social psychologists have long known that humans make snap judgments about each other based on nothing more than the way we look and, in particular, our faces. We use these judgments to determine whether a new acquaintance is trustworthy or clever or dominant or sociable or humorous and so on.

These decisions may or may not be right and are by no means objective, but they are consistent. Given the same face in the same conditions, people tend to judge it in the same way.

And that raises an interesting possibility. Rapid advances in machine vision and facial recognition have made it straightforward for computers to recognize a wide range of human facial expressions and even to rate faces by attractiveness. So is it possible for a machine to look at a face and get the same first impressions that humans make?

Today, we get an answer thanks to the work of Mel McCurrie at the University of Notre Dame and a few buddies. They’ve trained a machine-learning algorithm to decide whether a face is trustworthy or dominant in the same way as humans do.

Their method is straightforward. The first step in any machine-learning process is to create a data set that the algorithm can learn from. That means a set of pictures of faces labeled with the way people judge them—whether trustworthy, dominant, clever, and so on.

McCurrie and co create this using a website called TestMyBrain.org, a kind of citizen science project that measures various psychological attributes of the people who visit. The site is one of the most popular brain testing sites on the Web, with over 1.6 million participants.

The team asked participants to rate 6,300 black and white pictures of faces. Each face was rated by 32 different people for trustworthiness and dominance and by 15 people for IQ and age.

An interesting feature of these ratings is that there is no objective answer—the test simply records the opinion of the evaluator. Of course, it is possible to measure IQ and age and work out how well people are able to guess these values. But McCurrie and co are not interested in this. All they want to measure is the range of people’s impressions and then train a machine to reproduce the same results.

Having gathered this data, the team used 6,000 of the images to train their machine-vision algorithm. They use a further 200 images to fine-tune the machine-vision parameters. All this trains the machine to judge faces in the same way that humans do.

McCurrie and co save the last 100 images to test the machine-vision algorithm—in other words, to see whether it jumps to the same conclusions that humans do.

The results make for interesting reading. Of course, the machine reproduces the same behavior that it has learned from humans. When presented with a face, the machine gives more or less the same values for trustworthiness, dominance, age, and IQ as a human would.

McCurrie and co are able to tease apart how the machine does this. For example, they can tell which parts of the face the machine is using to make its judgments.

The team does this by covering different parts of a face and asking the machine to make its judgment. If the outcome is significantly different from the usual value, they assume this part of the face must be important. In this way, they can tell which parts of the face the machine relies on most when making its judgment.

Curiously, these turn out to be similar to the parts of the face that humans rely on. Social psychologists know that humans tend to look at the mouth when assessing trustworthiness and that a lowered brow is often associated with dominance.

And these are exactly the areas that the machine-vision algorithm learns to look at from the training data. “These observations indicate that our models have learned to look in the same places that humans do, replicating the way we judge high-level attributes in each other,” say McCurrie and co.

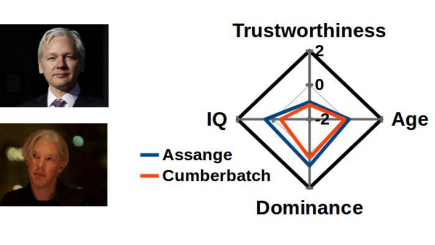

That leads to a number of interesting applications. McCurrie and co first apply it to acting. They use the machine to assess the trustworthiness and dominance of Edward Snowden and Julian Assange from pictures of their faces. They then use the machine to make the same assessment of the actors who play them in two recent moves—Joseph Gordon-Levitt and Benedict Cumberbatch, respectively.

In effect this predicts how a crowd might assess the similarity between an actor and the person he or she portrays.

The results are clear. It turns out that the machine rates both actors in a similar way to the humans they portray—all score poorly in trustworthiness, for example. “Our models output remarkably similar predictions between the subjects and their actors, attesting to the accuracy of the portrayals in the films,” say McCurrie and co.

But the team can go further. They apply the machine-vision algorithm to each frame in a movie, which allows them to see how the ratings change over time. This provides a measure of the way people’s perceptions might change over time. And that’s something that could be used in research, marketing, political campaigning, and so on.

The work also suggests future avenues to pursue. One possibility is to test how first impressions change between cultural or demographic groups.

All this makes it possible to start teasing apart the factors that contribute to our preconceptions, which often depend on subtle social cues. It may also allow robots to predict and repeat them.

A fascinating corollary to this is how this kind of research could influence human behavior. If somebody discovered that their face is perceived as untrustworthy, how might that person react? Might it be possible to learn how to change this perception, perhaps by changing facial expressions? Interesting work!

Ref: arxiv.org/abs/1610.08119: Predicting First Impressions with Deep Learning

Deep Dive

Artificial intelligence

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

What’s next for generative video

OpenAI's Sora has raised the bar for AI moviemaking. Here are four things to bear in mind as we wrap our heads around what's coming.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.