Software Dreams Up New Molecules in Quest for Wonder Drugs

What do you get if you cross aspirin with ibuprofen? Harvard chemistry professor Alán Aspuru-Guzik isn’t sure, but he’s trained software that could give him an answer by suggesting a molecular structure that combines properties of both drugs.

The AI program could help the search for new drug compounds. Pharmaceutical research tends to rely on software that exhaustively crawls through giant pools of candidate molecules using rules written by chemists, and simulations that try to identify or predict useful structures. The former relies on humans thinking of everything, while the latter is limited by the accuracy of simulations and the computing power required.

Aspuru-Guzik’s system can dream up structures more independently of humans and without lengthy simulations. It leverages its own experience, built up by training machine-learning algorithms with data on hundreds of thousands of drug-like molecules.

"It explores more intuitively, using chemical knowledge it learned, like a chemist would," says Aspuru-Guzik. "Humans could be better chemists with this kind of software as their assistant." Aspuru-Guzik was named to MIT Technology Review’s list of young innovators in 2010.

The new system was built using a machine-learning technique called deep learning, which has become pervasive in computing companies but is less established in the natural sciences. It uses a design known as a generative model, which takes in a trove of data and uses what it learned to generate plausible new data of its own.

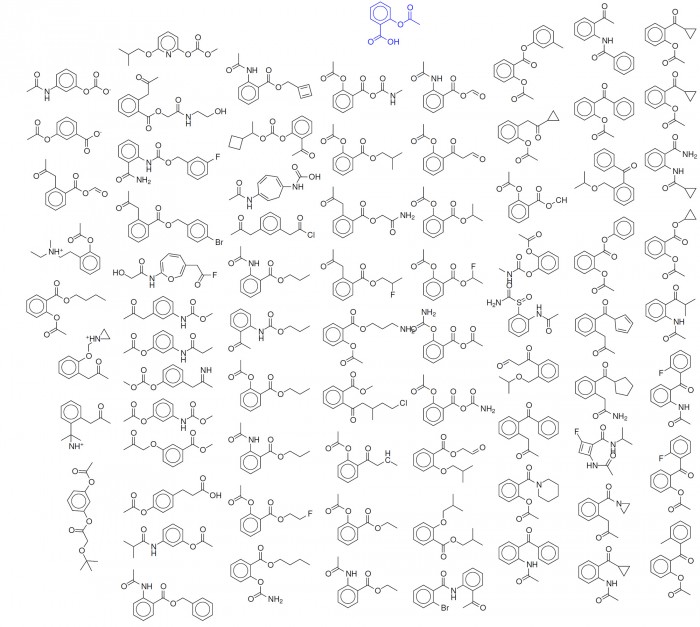

Generative models are more typically used to create images, speech, or text, for example in the case of Google’s Smart Reply feature that suggests responses to e-mails. But last month Aspuru-Guzik and colleagues at Harvard, the University of Toronto, and the University of Cambridge published results from creating a generative model trained on 250,000 drug-like molecules.

The system could generate plausible new structures by combining properties of existing drug compounds, and be asked to suggest molecules that strongly displayed certain properties such as solubility, and being easy to synthesize.

Vijay Pande, a professor of chemistry at Stanford and partner with venture capital firm Andreessen Horowitz, says the project adds to the growing evidence that new ideas in machine learning will transform scientific research (see “Stopping Breast Cancer with Help from AI”).

It suggests that deep-learning software can internalize a kind of chemical knowledge, and use it to help scientists, he says. “I think this could be very broadly applicable,” says Pande. “It could play a role in finding or optimizing lead drug candidates, or other areas like solar cells or catalysts.”

The researchers have already experimented with training their system on a database of organic LED molecules, which are important for displays. But making the technique into a practical tool will require improving its chemistry skills, because the structures it suggests are sometimes nonsensical.

Pande says one challenge for asking software to learn chemistry may be that researchers have not yet identified the best data format to use to feed chemical structures into deep-learning software. Images, speech, and text have proven to be a good fit—as evidenced by software that rivals humans at image and speech recognition and translation—but existing ways of encoding chemical structures may not be quite right.

Aspuru-Guzik and his colleagues are thinking about that, along with adding new features to his system to reduce its chemical blooper rate.

He also hopes that giving his system more data, to broaden its chemistry knowledge, will improve its power, in the same way that databases of millions of photos have helped image recognition become useful. The American Chemical Society’s database records around 100 million published chemical structures. Before long, Aspuru-Guzik hopes to feed all of them to a version of his AI program.

Deep Dive

Artificial intelligence

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

What’s next for generative video

OpenAI's Sora has raised the bar for AI moviemaking. Here are four things to bear in mind as we wrap our heads around what's coming.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.