Self-Driving Cars Can Learn a Lot by Playing Grand Theft Auto

Spending thousands of hours playing Grand Theft Auto might have questionable benefits for humans, but it could help make computers significantly more intelligent.

Several research groups are now using the hugely popular game, which features fast cars and various nefarious activities, to train algorithms that might enable a self-driving car to navigate a real road.

There’s little chance of a computer learning bad behavior by playing violent computer games. But the stunningly realistic scenery found in Grand Theft Auto and other virtual worlds could help a machine perceive elements of the real world correctly.

A technique known as machine learning is enabling computers to do impressive new things, like identifying faces and recognizing speech as well as a person can. But the approach requires huge quantities of curated data, and it can be challenging and time-consuming to gather enough. The scenery in many games is so fantastically realistic that it can be used to generate data that’s as good as that generated by using real-world imagery.

Some researchers already build 3-D simulations using game engines to generate training data for their algorithms (see “To Get Truly Smart, AI Might Need to Play More Video Games”). However, off-the-shelf computer games, featuring hours of photorealistic imagery, could provide an easier way to gather large quantities of training data.

A team of researchers from Intel Labs and Darmstadt University in Germany has developed a clever way to extract useful training data from Grand Theft Auto.

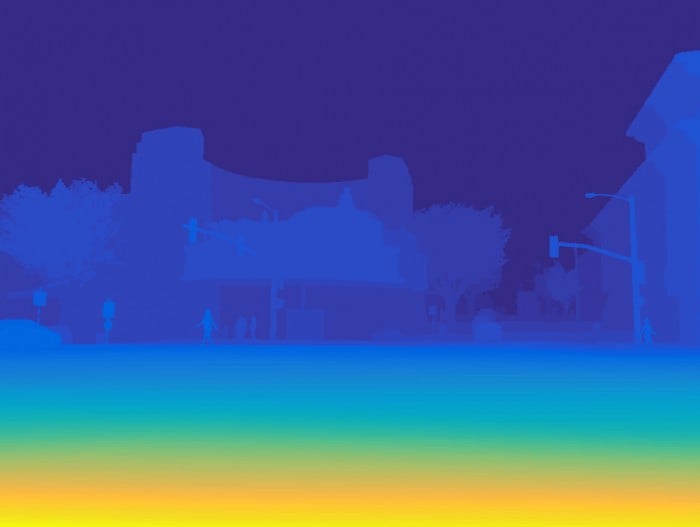

The researchers created a software layer that sits between the game and a computer’s hardware, automatically classifying different objects in the road scenes shown in the game. This provides the labels that can then be fed to a machine-learning algorithm, allowing it to recognize cars, pedestrians, and other objects shown, either in the game or on a real street. According to a paper posted by the team recently, it would be nearly impossible to have people label all of the scenes with similar detail manually. The researchers also say that real training images can be improved with the addition of some synthetic imagery.

One of the big challenges in AI is how to slake the thirst for data exhibited by the most powerful machine-learning algorithms. This is especially problematic for real-world tasks like automated driving. It takes thousands of hours to collect real street imagery, and thousands more to label all of those images. It’s also impractical to go through every possible scenario in real life, like crashing a car into a brick wall at a high speed.

“Annotating real-world data is an expensive operation and the current approaches do not scale up easily,” says Alireza Shafaei, a PhD student at the University of British Columbia who recently coauthored a paper showing how video games can be used to train a computer vision system, in some cases as well as real data can. Together with Mark Schmidt, an assistant professor at UBC, and Jim Little, a professor at UBC, Shafaei showed that video games also provide an easy way to vary the environmental conditions found in training data.

“With artificial environments we can effortlessly gather precisely annotated data at a larger scale with a considerable amount of variation in lighting and climate settings,” Shafaei says. “We showed that this synthetic data is almost as good, or sometimes even better, than using real data for training.”

AI researchers already use simple games as a way to test the learning capabilities of their algorithms (see “Google’s AI Masters Space Invaders” and “Minecraft Is a Testing Ground for Human-AI Collaboration”). But there is growing interest in using game scenery to feed algorithms visual training data. A group at Johns Hopkins University in Baltimore, for instance, is developing a tool that can be used to connect a machine-learning algorithm to any environment built using the popular game engine Unreal. This includes games such as KiteRunner and Hellblade, but also many spectacular architectural visualizations.

Rockstar Games, the studio behind the Grand Theft Auto franchise, declined the opportunity to comment for this piece.

Deep Dive

Artificial intelligence

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

What’s next for generative video

OpenAI's Sora has raised the bar for AI moviemaking. Here are four things to bear in mind as we wrap our heads around what's coming.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.